3.2. Indoor Positioning

This study establishes a positioning area measuring approximately 3 m in length and width. The design ensures a relatively uniform signal reception gradient within the designated area, thereby enhancing the accuracy of the positioning system. Under this configuration, the proposed positioning system is expected to deliver stable and precise performance within the defined space.

3.2.1. Indoor Space Transmitter Placement Topology

The experimental area is located in the BEE0101 research lab on the first floor of the Electrical Engineering Building at National Formosa University. Taking into account the arrangement of desks and chairs in the space, the reference point configuration for the positioning transmitters is set to a length and width of 3 × 2.8 m, as illustrated in

Figure 5.

3.2.2. First-Stage Positioning

The first-stage positioning utilizes the fingerprinting method combined with the KNN algorithm to obtain a coarse estimate of the user’s location. This initial positioning identifies the user’s approximate region, serving as an accurate starting point for the more detailed second-stage positioning.

In the first stage of localization, the environment is divided into four regions: Region 1, Region 2, Region 3, and Region 4. Subsequently, a database of received signal strength (RSS) values is constructed for each region, which is essential for both fingerprinting-based localization and the K-Nearest Neighbors (KNN) algorithm. As the value of K increases, the overall accuracy declines rapidly. In this experiment, the total dataset consists of 1600 samples, suggesting that K should be within 400. The results indicate that the optimal performance is achieved when K is set to 3, yielding an accuracy of 96.37%, as illustrated in

Figure 6.

To ensure that the generator consistently produces high-quality generated samples, this experiment examines the relationship between the generator’s input variables,

z1 and

z2, and the discriminator. The x-axis and y-axis represent the input variables

z1 and

z2, while the z-axis,

D(G), denotes the discriminator’s evaluation score of the generated data. As shown in

Figure 6, not all input variable ranges result in high-quality generated samples. The value of

D(G) reflects the discriminator’s assessment of the generated data, where a higher

D(G) indicates that the discriminator considers the generated data to be more similar to real data, whereas a lower

D(G) suggests a greater divergence from real data. To ensure that the generator consistently produces high-quality samples, this experiment selects the region where

D(G) is greater than 0.7 for sample generation. This design ensures that the generated samples are evaluated by the discriminator as having a high degree of similarity to real data, thereby enhancing their authenticity and reliability, as illustrated in

Figure 7.

As observed in the figure below, generating within the input variable space where the discriminator score

D(

G) > 0.7 enables the generated results to successfully capture the key features of each region, as illustrated in

Figure 8.

To validate the reliability of the generated data, this experiment employs the KNN model for testing, using real data (1600 samples) as the training set and generated data (800 samples) as the test set. The results demonstrate that the generated data perform exceptionally well in the model test, showing a high degree of similarity to the real data. This indicates that the generated data effectively capture the characteristics of the real-world environment and significantly enhances the performance of the positioning model during testing, as illustrated in

Figure 9.

As shown in the table below, the accuracy of the KNN model was 96.37% before data augmentation. After augmentation, the accuracy of the KNN model with the same parameters increased to 97.04%, representing an improvement of 0.67%. Although this improvement may appear modest, it can have a significant impact on the performance and reliability of the positioning system in practical applications, as detailed in

Table 3.

3.2.3. Second-Stage Positioning

In the second stage of positioning, this experiment employs a polynomial regression model to fit the relationship between signal reception strength and distance, followed by testing in a real-world environment. As observed in

Figure 9 below, the polynomial regression model demonstrates a significant improvement in stability compared to the traditional signal reception strength channel model. However, a challenge remains in this two-stage positioning process—the presence of multiple intersection clusters rather than just one. Due to the complexity of the real-world environment, multiple intersection clusters may appear during distance calculations from multiple transmitters. To obtain the correct coordinate point, the following experiment will further explain the methods used to handle these intersection clusters, as illustrated in

Figure 10.

To address the issue of multiple intersection clusters, this experiment first establishes an intersection matrix:

In the matrix, the rows represent the pairwise combinations of transmitters, while the columns represent the intersection points of the circles formed by the signal strength-to-distance conversion between each pair of transmitters. This method establishes an intersection matrix for all transmitters in the environment. Next, the method calculates the differences in the

x and

y coordinates for each intersection point and then computes the average values:

Using this method, the centroid of the coordinate differences can be determined. Subsequently, the Euclidean distance between each intersection point’s coordinate difference and the centroid is calculated sequentially for each row in the intersection matrix:

After calculating the distances for each row, the coordinate points closest to the centroid are selected for retention:

The resulting intersection matrix after the calculations will be as follows:

Finally, the correct intersection points are plotted on the coordinate graph, as illustrated in the figure (right). It can be observed that the centroid distance calculation and retention method effectively preserves the correct intersection clusters, as shown in

Figure 11.

This experiment utilizes the Grey Wolf Optimizer algorithm (GWO) to achieve this objective. The primary reason for choosing this algorithm is to incorporate the concept of weighting into the convergence process. Traditional multi-transmitter triangulation methods typically select the three points with the strongest signal reception as reference points. However, this study aims to ensure that all reference points contribute value, with their weights determined by distance. Therefore, the Grey Wolf Optimizer algorithm aligns well with the core objectives of this positioning experiment. Initially, the Grey Wolf Optimizer estimates the target position. In this experiment, the centroid of all grey wolf coordinate points (intersection points) is determined.

In Equation (9),

N represents the total number of intersection clusters, while

wi(

x) and

wi(

y) denote the

x and

y coordinates of the

ith intersection point, respectively. After determining the target point, the calculation is further refined to converge toward the optimal point. In each iteration, the target point is updated in the same manner, similar to how a pack of wolves tracks and closes in on its prey as it moves. This updating process continues until the maximum number of iterations is reached. When the number of epochs reaches the maximum iteration count of 299, all wolves have converged to the target point. The actual test results after incorporating the Grey Wolf Optimizer algorithm are shown in

Figure 12.

After integrating the Grey Wolf Optimizer algorithm, the actual test results reveal a certain degree of positioning error, approximately 50 cm. This error primarily arises due to interference sources surrounding the receiver, which affect the accuracy of the positioning results.

To mitigate these interferences, this experiment employs fuzzy theory to establish a fuzzy inference system. This system adjusts the estimated signal reception strength based on the location and intensity of interference sources near the receiver, as well as their impact on signal reception. By doing so, the influence of interference sources on positioning results can be eliminated or reduced, thereby improving positioning accuracy, as detailed in

Table 4.

To determine whether the positioning coordinate points are affected by interference, this experiment analyzed the update fluctuations of the positioning coordinates. If the fluctuations exceeded a certain threshold, they were classified as interference. To address this issue, this study applied the concept of fuzzy control and utilized fuzzy linguistic variables to model the coordinate fluctuations caused by interference, as illustrated in

Figure 13.

After incorporating fuzzy control, the positioning error was reduced from 50 cm to 15 cm. However, some positioning errors remain noticeable. It is hypothesized that these errors are primarily caused by signal fluctuations, as detailed in

Table 5.

This experiment employs machine learning methods to determine whether a signal exhibits fluctuations. Two datasets will be collected: 6000 samples of fluctuating signals and 6000 samples of non-fluctuating signals. These datasets will be used to train a machine learning model to identify signal fluctuations, as illustrated in

Figure 14.

As shown in the table after fluctuation processing, the average error between the actual coordinates and the positioning result coordinates in 15 initial positioning tests is approximately 4 cm, as detailed in

Table 6.

3.3. EEG-Based Attention Level

The attention level sampling in this study was conducted using the BrainLink Lite EEG device, a lightweight model developed by Macrotellect. This device is capable of detecting the subject’s attention level, as well as Low Gamma and Middle Gamma EEG signals.

This EEG dataset primarily focuses on attention level as the sampling target, with each data sequence defined as one sequence per minute. The sampling rate is set at one sample per second, resulting in 60 sampling points per minute. This approach is intended to capture variations in attention level over each minute.

Next, we applied the same technique used for positioning database augmentation, utilizing Generative Adversarial Networks (GANs) to generate data. However, during the generation training process, we observed anomalies in the generated attention level samples, including negative attention values or values exceeding 100. Additionally, the amplitude exhibited significant fluctuations, as shown in

Figure 15.

To address this issue, this experiment adjusted the Loss function in the generator. The original Loss function was:

In the original Loss function, we introduced a weighting mechanism for attention level values. When the individually generated attention level values within a single data sequence exceed 100 or fall below 0, the weight is defined as:

In addition to the attention level value weight, we also introduced a standard deviation weight. This weight is designed to prevent the generated samples from exhibiting excessive amplitude. The formula for calculating the population standard deviation is as follows:

Once the calculation method for the standard deviation is established, a threshold for the standard deviation can be defined. Analysis of the original real attention level data revealed an average standard deviation of approximately 16.9768. Consequently, the threshold was set at 20 in the weight design. This approach is intended to mitigate the issue of excessive amplitude in the generated samples.

After completing the series of weight definitions described above, these defined weights can be incorporated into the Loss function of the generator as follows:

To ensure that the generator consistently produces high-quality samples, we conducted a detailed analysis of the generative variable space to understand the impact of input variables z1 and z2 on the discriminator. Our experimental results indicate that within certain input variable ranges, the value of D(G) is higher, suggesting that the generated data in these regions more closely resemble real data. To guarantee high-quality sample generation, we selectively generate samples in regions where D(G) exceeds 0.7.

As shown in

Figure 16, the results of generated data sequences randomly sampled using two different loss functions are presented. The red curve represents the generated data sequence, while the blue curve represents the standard deviation of the generated data sequence at different training stages. Compared to the results generated using the original Loss function (right image), it is evident that the previously large fluctuations in the generated data have been effectively suppressed through the introduction of the standard deviation fluctuation weight. This adjustment significantly improves the issues of generated attention level data exceeding 100 or falling below 0.

As observed in the table below, compared to the training results with the original dataset, the model trained with the augmented dataset achieved a 1.17% increase in accuracy over the original model, as detailed in

Table 7.

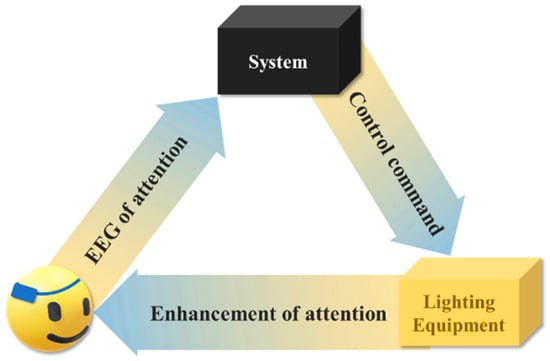

3.4. The Lighting Control System

This system aims to utilize lighting illuminance and color temperature to help users quickly enter a high-attention mode and maintain prolonged focus. To optimize the lighting control system for enhancing user attention, additional improvements were incorporated, as shown in

Figure 17.

In order to realize the intelligent lighting control system, we will refer to the same research results in the literature [

28,

29], which show that when the color temperature is 6200 K and the illuminance is 1000 Lux, the concentration increases the fastest, but the relative maintenance time is shorter. When the color temperature is 4100 K and the illuminance is 500 Lux, the concentration rises slowly, but maintaining a high concentration time is the most durable. Focus is lowest at a color temperature of 2900 K. Through these characteristics, we will design an automated lighting control system, one of the inputs of the control system is concentration, we divide concentration into two categories, the first is high level concentration (Label 0) and the second type of low level concentration (Label 1), high level of concentration refers to the concentration level is in an upward state, and vice versa low level is the concentration downward curve. The label is defined in

Figure 18 below.

In the design of the fuzzy lighting control system, a fuzzy controller with three inputs and two outputs was established. The three input variables are the one-minute EEG attention level trend, real-time EEG attention level, and current ambient illuminance. The outputs are the lighting color temperature and illuminance.

Figure 19 illustrates the test results. Since this controller is a three-input, two-output system, the characteristics were analyzed by fixing the EEG attention level trend to 0 (Left 1, Right 2) and 1 (Left 2, Right 1) during testing. In the figure, the x- and y-axes represent attention levels (0–100%) and illuminance (0–100%), respectively, while the z-axis represents the output values for color temperature (Left 1, 2) and illuminance (Right 1, 2).

In practical applications, an issue was identified with the fuzzy lighting control system. Since the sampling rate of the EEG device is once per second, it is not possible to capture changes in EEG attention levels within each second. This limitation introduces a potential time lag problem in the proposed fuzzy controller, as illustrated in the figure below. During significant fluctuations in EEG attention levels, the delay causes abrupt changes in color temperature and illuminance. Such abrupt transitions may lead to user discomfort due to excessive variations during system adjustments, as shown in

Figure 20.

When using PD control, the initial changes are drastic, and then the rate of change slows down as it approaches the target value. However, the design idea of this lighting control system is to hope that the initial rate of change is slower, then gradually accelerate, and finally slow down again as it approaches the target value to reduce the discomfort caused by too large a change rate. To achieve this concept, we proposed using the Fuzzy-PD control method, integrating it into the original fuzzy controller as shown in

Figure 21. We added a Fuzzy-PD controller to the light output of the original fuzzy controller. The

Kp value of the Fuzzy-PD controller will adjust according to the gap between the set value and the target value, and this

Kp parameter will be used to control the PD controller. The PID controller will output the light color temperature and luminance setting values based on the adjusted parameters, thereby realizing the expected lighting change mode.

Figure 22 shows the simulation output of a fuzzy controller combined with a PD controller using PD parameters. In the figure, the green line represents the most original control method, which has the problem of sampling time difference. It can be observed that, whether it is color temperature control (left) or luminance control (right), they switch directly to the target set value at the initial state. The blue line represents pure PD control, whose control curve changes rapidly at first, then slows down, and finally gradually reaches the target value. The red line is the control curve using this fuzzy PD controller. Unlike the blue line, the initial change in the red line is smaller, then gradually increases, and the change rate slows down again when approaching the set value. This conforms to the design concept of light control at the beginning of this paper, which is to hope that the initial change is smaller, then gradually accelerate, and finally slow down the change rate when approaching the set value to reduce the discomfort caused by too large a change. The simulation effect of this fuzzy PD controller is very consistent with expectations, which will help to control the light more accurately and improve the comfort of the user.

Next, to ensure that the lighting system accurately illuminates the user’s area based on EEG attention levels, the lighting control positioning unit is designed to divide each region of the desktop into three equal parts, representing the left, center, and right sections of the desk. After performing distance calculations between the receiver and the smart lighting fixture, the results are fed into the fuzzy controller along with the coordinates for inference, yielding the optimal control parameters for the illumination angle, as shown in

Figure 23 and

Figure 24.

Figure 25 shows the 3D plot of the simulation characteristics of the fuzzy controller. The x-axis and y-axis represent the positioning x-coordinate and the distance between the lighting fixture and the receiver, respectively, while the z-axis corresponds to the servo motor set angle. Upon observation, it can be seen that the red high point represents the result when the lighting fixture is positioned in the center of the desktop area. The blue area indicates the output angle when the lighting fixture is positioned on the left side of the desk, while the green area shows the output angle when the fixture is on the right side. By examining this 3D plot, one can understand the input-output characteristics of the entire fuzzy controller and assess the design’s reasonableness.