In this section, we provide the main result of this work, which is an operational interpretation of partial information decomposition.

4.1. PID via Sato’s Outer Bound

Researchers in the information theory community have made numerous efforts to identify a computable characterization of the capacity region of general broadcast channels (see the textbook [

33] for a historical summary); yet, at this time, a complete solution is still elusive. Nevertheless, significant progress has indeed been made toward this goal. Particularly, Sato [

34] provided an outer bound for

, and it can be specialized to yield an upper bound for the sum-rate capacity of the general broadcast channel as follows:

where the set is defined as

i.e., the set of conditional distributions for which the marginal conditional distributions and are preserved. The inner maximization is over the possible marginal distribution of the random variable in the alphabet . The form already bears a certain similarity to (4). Note that for channels on general alphabets (i.e., not necessarily optimized on a compact space), the maximization should be replaced by the supremum and the minimization by the infimum. Due to the minimax form, the meaning is not yet clear, but the max–min inequality (weak duality) implies that

where the equality is by the definition of , and the set is exactly the one defined in (4) with and . The inner minimization of this form is exactly the same as the second term in (3). Though the max–min form does not yield a true upper bound of the sum-rate capacity, in the PID setting we consider, is always fixed; therefore, the max–min and min–max forms are in fact equivalent in this setting. The equivalence in mathematical forms does not fully explain the significance of this connection, and we will need to consider Sato’s bound more carefully. Let us define the following quantity for notational simplicity:

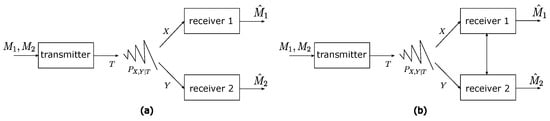

Sato’s outer bound was derived using the following argument. For a channel that has a single input signal

T and a single output signal

Y on a channel

, Shannon’s channel coding theorem [

28] states that the channel capacity is given by

. Moreover, for any fixed distribution

, the rate

is achievable, where the probability distribution

represents the statistical signaling pattern of the underlying codes. Turning our attention back to the broadcast channel with a transition probability

, if the receivers are allowed to cooperate fully and share the two output signals

X and

Y—i.e., they become a single virtual receiver (see

Figure 1b)—then clearly the maximum rate achievable would be

. However, Sato further observed that the error probability of any code should not differ on any broadcast channel

, even if the transition distribution

is different from the true broadcast channel transition probability

. This is because the channel outputs only depend on the marginal transition probabilities

and

, respectively, and the decoders only use their respective channel outputs to decode. Therefore, we can obtain an upper bound by choosing the worst channel configuration

, i.e., the outer minimization in (

5).

With the interpretation of Sato’s upper bound above, it becomes clear that

is essentially an upper bound on the sum rate of the broadcast channel where the receivers are not allowed to cooperate, when the input signaling pattern is fixed to follow

. On the other hand, the quantity

is the rate that can be achieved by allowing the two receivers to fully cooperate, also with

being the input signaling pattern. In this sense,

defined in (

3) is a lower bound on the difference between the sum rate with full cooperation and that without any cooperation, with the input signaling pattern following

.

This connection provides an operational interpretation of PID for general distributions. Essentially, synergistic information can be viewed as a surrogate of the cooperative gain. When this lower bound is in fact also achievable, would be exactly equal to the cooperative gain. In the corresponding learning setting, it is the difference between what can be inferred about T by using both X and Y in a non-cooperative manner, and what can be inferred by using them jointly. This indeed matches our expectations for the synergistic information. In the next subsection, we consider a special case when Sato’s bound is indeed achievable, and the lower bound mentioned above becomes exact.

In one sense, this operational interpretation is quite intuitive as explained above, but on the other hand, it is also quite surprising. For example, in broadcast channels, a more general setup allows the transmitter to also send a common message to both receivers [

35,

36], in addition to the two individual messages to the two respective receivers. It would appear plausible to expect this generalized setting to be more closely connected to the PID setting with the common message related to the common information, yet this turns out to be not the case here. Moreover, a dual communication problem studied in information theory is the multiple access channel (see, e.g., [

28]), where two transmitters wish to communicate to the same receiver simultaneously. The readers may also wonder if an operational meaning should be extracted on this channel, instead of on the broadcast channel. However, note that in the PID setting, we are inferring

T from

X and

Y, which is similar to the decoding process in the broadcast channel, instead of the multiple access channel. Moreover, in the multiple access channel, the joint distribution of the two transmitters’ inputs is always independent when the two transmitters cannot cooperate, and this will not match the PID setting under consideration. Another seemingly related problem setting studied in the information theory literature is the common information between two random variables [

37,

38]; however, for the PID defined in [

13], this approach also does not yield a meaningful interpretation.

4.2. Gaussian MIMO Broadcast Channel and Gaussian PID

One setting where a full capacity region characterization is indeed known is the Gaussian multiple-input multiple-output (MIMO) channel [

32,

39]. In the two-user Gaussian MIMO broadcast channel, the channel transition probability

and

are given, with

being the transmitter input variable, and

the channel outputs at the two individual receivers. The channel is usually defined as follows:

where and are two channel matrices, the additive noise vector is independent of , and similarly, is independent of . For a fixed input signaling distribution , the pairwise marginal distributions and are well specified. Conversely, for any joint distribution , where the marginals and are jointly Gaussian, respectively, we can represent their relation in the form above via a Gram–Schmidt orthogonalization. Note that the joint distribution of is not fully specified here, as the noise vectors and are not necessarily jointly Gaussian, but can be dependent in a more sophisticated manner. The standard Gaussian MIMO broadcast problem usually specifies the noises zero-mean Gaussian with certain fixed covariances, and there is also a covariance constraint on the transmitter’s signaling . The problem can be further simplified using certain linear transformations, as discussed below.

Let us assume

and

are full rank for now (when

and

are not fully rank, a limiting argument can be invoked to show the same conclusion holds), and in this case, it is clearly without loss of generality to assume that

’s are in fact identity matrices, since otherwise, we can perform receiver-side linear transforms to make them so, i.e., through a transformation based on the eigenvalue decomposition of

and

, respectively. For the same reason, we can assume

is an identity matrix, through a linear transformation at the transmitter. These reductions to independent noise and independent channel input are often referred to as the transmitter precoding transformation and the receiver precoding transformations in the communication literature; see, e.g., [

39].

For the two-user Gaussian broadcast channel, the worst channel configuration problem we discussed in the general setting is essentially the least favorable noise problem considered by Yu and Cioffi [

39] with the simplification above, where the noise relation between

and

needs to be identified for a channel that makes it the hardest to communicate. It was shown in [

39] that the least favorable noise problem can be recast as an optimization problem:

where , and , when is nonsingular. It can be shown that the problem is convex.

Yu and Cioffi also showed that in this setting, Sato’s upper bound is achievable—i.e., it is exactly the sum-rate capacity. Moreover, for any input signaling

that is Gaussian-distributed, the corresponding

can be achieved on this broadcast channel through a more sophisticated scheme known as dirty-paper coding [

40]. Therefore, when the pairwise marginals

and

are jointly Gaussian, respectively, the synergistic information

is exactly the cooperative gain of the corresponding Gaussian broadcast channel using this specific input signaling pattern

. The connection in the Gaussian setting is of particular interest, given the practical importance of the Gaussian PID, which was thoroughly explored in [

41].