The usage of Rigel in the creation of such pipelines, along with the integration of cloud simulations in the testing pipeline, should make this pipeline a powerful tool for ATOM’s developers. In addition, though the proposed pipeline is specific to ATOM, it would also be a blueprint for similar pipelines for the testing of different robotic applications.

Using the proposed CI pipeline benefits the research work on calibration as it allows several researchers (at the time of writing, ATOM has around 15 contributors) to work in parallel on their research lines, while maintaining the core base of the calibration framework bug free due to the periodical tests that are carried out automatically. Having the calibration of a robotic system be systematically tested as part of a series of verifications has the potential to make the ATOM framework more stable and easily maintained.

State of the Art

The benefits of using cloud resources in robotics were studied by Guizzo [

5] in 2011. Chief among these is the fact that, by using cloud resources, fewer computational resources are needed and, as such, lighter robotic systems can be used. One possible application for these resources lies in, for example, the training of neural networks. The significant computational cost required to train certain networks makes the use of cloud resources appealing [

6].

When it comes to the perks brought by the introduction of CI/CD pipelines in software development, there are several. Among these are better communication between developers and clients, since feedback is faster due to the CD process, and a general improvement in the quality of the development, due to automatic testing. Furthermore, the CI process helps in the detection of errors early in development [

7,

8]. Tools exist that facilitate the application of CI/CD in ROS applications, such as the ROS Build Farm (

http://wiki.ros.org/buildfarm (accessed on 4 March 2025)) and ROS Industrial CI (

https://github.com/ros-industrial/industrial_ci (accessed on 4 March 2025)) [

9].

A framework that includes CI processes for the testing of racing software was presented by Jiang et al. [

9]. However, even though AWSs are used in this pipeline and the racing software, named

AutOps, is containerized, RoboMaker is not used and no simulations are issued on the cloud. Containerization is a necessary step to using RoboMaker and will be explained further in this paper.

As mentioned earlier, Teixeira et al. [

3] proposed a solution for the integration of RoboMaker simulations in a CI/CD pipeline. The process is triggered after a change is made to the source code. Afterwards, ROS Industrial CI is used to run unit and integration tests. Following that, a bundle is created, called

robot application. Generally, the

robot application includes everything that is supposed to be tested by the cloud simulations. This bundle is paired with another, called

simulation application, and both are uploaded to the Amazon Web Service Short Storage Service (AWS S3) to then be used by RoboMaker. It should be noted that, at the time of writing, RoboMaker does not use bundles stored in AWS S3 anymore but does use container images stored in the Amazon Web Service Elastic Container Registry (AWS ECR). After the cloud simulations are finished, the results are analyzed. If the simulation proves successful, the CD process is triggered and a container image is deployed.

Containers have been mentioned several times as they are integral parts of this process and are, in fact, currently needed to issue simulations in RoboMaker. A container is a unit of software that combines code and dependencies. The use of containers in software development brings forth several boons. For example, since a container includes a software’s dependencies, the software can be run regardless of the system it is run on [

10]. Furthermore, this can ease the sharing of code and lead to increased modularity [

11]. One of the typical tools used to containerize software is Docker.

A container is generated by running a container image and an image is generated based on what is known as a

Dockerfile. When it comes to orchestrating or launching multiple containers, a YAML file is required as input to a tool named Docker Compose (

https://docs.docker.com/compose/ (accessed on 4 March 2025)). A pipeline was proposed by Melo et al. [

12] that generates these YAML files automatically. Furthermore, this pipeline also creates the Dockerfiles necessary to containerize ROS workspaces based on another YAML file that is given as input to the pipeline.

In their later work, Melo et al. [

4] proposed an improved solution: Rigel. Rigel can create container images from entire ROS workspaces and orchestrate several containers, in order to test the robotic software. Rigel can also perform the deployment of container images to remote container registries, like Docker Hub (

https://hub.docker.com/ (accessed on 4 March 2025)) or AWS ECR. Rigel is a plugin-based tool, where each plugin performs a specific task and it is configured via what is known as a

Rigelfile.

Containerization is present in a robotic software development paradigm proposed by Lumpp et al. [

11]. In this methodology, the software is containerized and then simulations are run on the robot’s hardware. Hence, though the benefits of containerization are taken advantage of, the same cannot be said for cloud simulations.

One of the components to be integrated in our proposed approach is static code analysis. This is the process of verifying that code is compliant with certain rules or guidelines without running it [

13]. This process helps in the detection of problems in the earlier development stages [

13,

14]. Tools like Codacy (

https://www.codacy.com/ (accessed on 4 March 2025)) exist that perform static analysis on code repositories. Furthermore, Santos et al. [

15] have created a tool that performs static code analysis, intended to be used in ROS workspaces, named the High Assurance ROS Framework (HAROS) (

https://github.com/git-afsantos/haros (accessed on 4 March 2025)). While most static analysis tools only analyze individual ROS nodes, HAROS takes into account complete ROS applications.

Yet another component to be included in this proposed pipeline is unit testing. A

unit test tests a single unit of code [

16,

17]. Niedermayr et al. [

16] differentiate these tests from

system tests, which test the entire system at once instead of individual code units. Typically, this means running a unit of code, such as a method or function, and comparing its output with what is to be expected. A tool for running unit tests for Python source code, which will be used in our pipeline, is the built-in

unittest (

https://docs.python.org/3/library/unittest.html (accessed on 4 March 2025)) module.

Code coverage is a way of measuring how covered a module of code is by unit tests [

18]. Several metrics may be used for code coverage. One of these is

line coverage, or the ratio of lines covered [

18]; an alternative is the ratio of functions or methods that are run when running the unit tests [

16]. Codacy can also display the results of a code coverage report, uploaded as part of a CI pipeline.

The worth of unit testing and code coverage reports was studied by Niedermayr et al. [

16]. They concluded that code coverage, using method coverage as a metric, is indeed a useful metric when measuring the extent of unit tests and not system tests. In the case of system tests, the authors warn of the possibility of pseudo-tested methods. This occurs when, for example, a test is poorly written so that it does not effectively test a function but still counts towards coverage. The authors state that the occurrence of these pseudo-tested methods is higher in the case of system tests than in the case of unit tests.

A study was conducted at Google in 2019 by Ivankovi et al. [

18], with the purpose of understanding how useful the code coverage tools were considered by the developers. They conclude that these tools are indeed used by most developers when readily available. The authors also give recommendations for their integration in development, these being that the tools should operate automatically and as effortlessly as possible.

The target for testing by the pipeline proposed in this paper is ATOM, as mentioned. ATOM is a calibration software for generalized robotic systems. It is capable of handling several types of calibration:

sensor to sensor,

sensor in motion and

sensor to frame [

2]. This system is based on the idea of indivisible transformations. Generally, a solution to a calibration problem involves simplifying the topological tree of transformations, given a specific situation and system. However, ATOM does the opposite, preserving the complexity of a system’s transformation tree to achieve generalization. Examples of systems calibrated using ATOM (

https://github.com/lardemua/atom/tree/noetic-devel/atom_examples (accessed on 4 March 2025)) can be found in

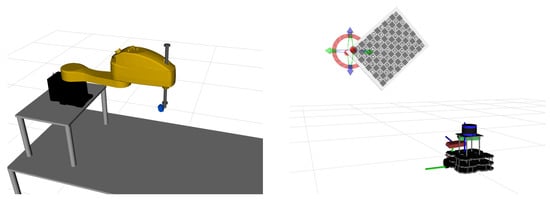

Figure 1.

The ATOM calibration process comprises multiple steps. First, the calibration is configured via a YAML configuration file. An optional step where an estimate for the sensors’ positioning can be given follows. The next step is not optional, however, and that is the data collection and labeling step. This process outputs a dataset, in the form of a JavaScript Object Notation (JSON) file. This dataset is then used as input to the actual calibration procedure, which outputs another dataset and robot description files, both calibrated. This calibrated dataset is used afterwards in a calibration evaluation process that compares the results to the ground truth and outputs the errors in the calibration.

By creating the proposed pipeline for this software, it is our belief that it would prove to be an effective and useful tool to ATOM’s development team. Furthermore, given the tools and concepts explored thus far, it seems all of the necessary assets for such a pipeline to be created already exist. Most notable of these is Rigel, as its inclusion would simplify the creation of this pipeline considerably.