1. Introduction

Babies cry as an innate reflex to express their needs, such as hunger, discomfort, and sleeplessness. Understanding these cries accurately and quickly is critical to babies’ healthy development and happiness [

1]. However, inexperienced parents, new caregivers, and some healthcare professionals may have difficulty understanding why babies cry. This can lead to prolonged crying, increased stress levels, and negatively impacted cognitive development [

2].

Studies on the automatic classification of baby crying sounds have significantly contributed to this field. Analysis and classification of baby crying sounds using sound processing methods and machine learning algorithms constitute the basis of research in this field. This literature review examines important studies on the classification and detection of baby crying sounds. It discusses, in detail, the methodologies, datasets, feature extraction methods, and classification algorithms used in these studies.

Various studies on the classification of baby cries have developed different methods using sound processing methods and machine learning algorithms. Sharma [

3] classified the conditions such as hunger, need to burp, abdominal pain, discomfort, fatigue, loneliness, feeling cold or hot, and fear on the Donate a Cry dataset and achieved 81.27% accuracy with k-means, hierarchical clustering, and Gaussian mixture models using features such as mean frequency, median frequency, spectral entropy, and skewness.

Maghfira [

4] achieved 94.97% success in classifying hunger, discomfort, need to pass gas, and abdominal pain using the Dunstan Baby dataset with CNN-RNN. Similarly, Franti [

5] achieved 89% accuracy with CNN on the same dataset. Liu [

6] achieved 76.4% success with the nearest neighbor algorithm and artificial neural networks using LPC, LPCC, MFCC, and BFCC features on sounds recorded in the NICU environment. Turan [

7] achieved 86.1% accuracy with capsule networks’ help on the CRIED dataset.

Osmani [

8] performed classification using SVM, Bagging Decision Tree, and Boosted Trees algorithms using parameters such as spectrum, tone, zero crossing rate, root mean square, energy, and statistical features in the Dunstan Baby dataset. Chang [

9] evaluated spectrogram-based features using CNN to classify hunger, pain, and sleep categories on the dataset collected from National Taiwan University Hospital with an accuracy rate of 78.5%. Bano [

10] achieved 86% accuracy with KNN using features such as MFCC and short-term energy on its own recorded data. Orlandi [

11] used features such as median, mean, standard deviation, and minimum and maximum values of F0 and F123 parameters to distinguish full-term and preterm babies and achieved 87% success with logistic regression, multilayer perceptron, SVM, and RF algorithms. Bhagatpatil [

12] achieved 91.58% accuracy with K-means clustering and KNN algorithms using MFCC and LFCC features on his own recorded data. Rosales-Pérez [

13] achieved 97.96% accuracy by classifying hunger and pain states on the Baby Chillanto dataset using MFCC and LPC features using a fuzzy model algorithm. Yamamoto [

14] achieved 62.1% accuracy with the nearest neighbor algorithm using FFT features on his own recorded discomfort, hunger, and sleep categories. Finally, Bashiri and colleagues [

15] achieved 99.9% accuracy using MFCC and artificial neural network (ANN) algorithms with the BabyChilanto dataset.

Various studies on baby crying detection have developed different approaches to overcome the challenges in this area using sound processing methods and machine learning algorithms. For example, Chang [

16] performed baby crying detection using a self-recorded dataset. The data included background sounds such as television and speech, and 99.83% accuracy was achieved using the CNN algorithm with spectrogram-based features. Manikanta [

17] extracted MFCC features by recording baby crying data accompanied by the air conditioner and fan sounds in a home environment and achieved an 86% accuracy rate using 1D-CNN, FFNN, and SVM algorithms. Dewi [

18] achieved 90% accuracy with the KNN algorithm using LFCC features on a dataset containing baby crying and non-crying sounds that she recorded. Gu [

19] used LPC features on a dataset containing background sounds such as laughter and dog barking that she recorded and achieved a 97.1% success rate with the Dynamic time warping algorithm. Ferretti [

20] worked on two different datasets: the first is real data recorded in a hospital’s Neonatal Intensive Care Unit (NICU); the second is a dataset containing synthetic sounds such as speech and “beep” sounds. The CNN algorithm with Log-Mel coefficients features was used, and an accuracy rate of 86.58% was achieved in the real dataset and 92.92% in the synthetic dataset. Feier [

21] worked on the TUT Rare Sound Events 2017 dataset. This dataset includes sounds such as glass breaking, gunshots, and baby cries. Using log-amplitude mel-spectrogram features, CRNN achieved an 85% success rate for baby cry detection and 87% for all targets. Torres [

22] worked on a dataset containing background sounds, such as adult crying and vacuum cleaners, collected from online sources. Features such as MFCC, voiced–unvoiced counter, and harmonic ratio accumulation with consecutive F0 were extracted from the audio data. A 92% AUC rate was achieved with the Support Vector Data Descriptor (SVDD) and CNN algorithms. Lavner [

23] worked on a dataset containing background sounds such as talking and door opening in a home environment. Using the CNN algorithm with features such as MFCC, pitch, and formant, 95% accuracy was achieved.

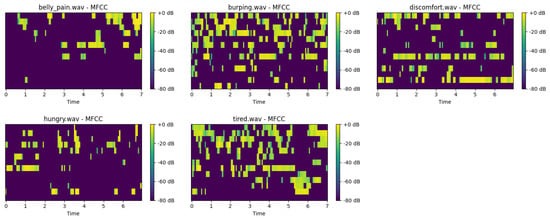

This study aims to develop a system that can automatically classify the needs or distresses underlying the crying sounds of babies by analyzing them. The system will extract meaningful data using sound processing techniques and machine learning algorithms and classify this data, allowing parents and caregivers to respond to babies’ needs accurately and quickly. Sound processing methods such as Mel-Frequency Cepstral Coefficients (MFCCs) will be used in the research. The development of this system will support healthy development by reducing babies’ stress levels. In addition, it will also contribute to the establishment of a healthier and happier relationship by reducing the stress of parents and caregivers. In addition, this study will contribute to the academic literature on sound processing and machine learning and will guide parents as a practical training and support tool.

The main contributions of the article are as follows:

The study provides a more effective solution than existing approaches in this field by developing an ANN-based integrated model for classifying baby sounds.

The artificial neural network model used in the study was improved by hyperparameter optimization using the grid search optimization algorithm, and its accuracy rate was significantly increased.

To the best of our knowledge, the proposed model, which has the best average accuracy rate of 90%, outperforms the existing work [

24] that reported an 85.93% accuracy on Donate a Cry Dataset test data.

The rest of this paper is organized as follows. Materials and Methods are presented in

Section 2.

Section 3 describes the experimental studies, including the experimental setup and results. Finally,

Section 4 summarizes the conclusion and future work.

3. Experimental Results

This paper used VGG16 (a pre-trained CNN model consisting of 16 layers, which is frequently used in image classification tasks and is an important milestone in the field of deep learning), KNN, CNN, random forest-based methods (M1, M2, M3, M4, M5, and M6), and the ANN-based proposed method to classify baby sounds. These methods were applied to analyze the sound data to classify baby sounds. These methods were applied to analyze audio data to classify baby sounds. It is aimed to use these methods by repeating studies in the literature with high accuracy rates. In the testing phase of the models, the Donate a Cry dataset was tested and compared to classify baby sounds. In the simulations, the dataset was divided into three parts, 75% for training, 10% for validation, and 15% for testing, see the splitting in [

24] for a comparison.

In the proposed method, hyperparameter optimization was performed using the grid search (GS) algorithm to tune the parameters of the ANN. The near-optimal hyperparameters selected by GS are shown in

Table 5.

While using the grid search optimization algorithm, not only the hyperparameter optimization but also the optimization algorithm to be used is optimized. In this process, three different optimizer options are presented to the grid search algorithm: Adam (DeepMind, London, UK), RMSProp (Geoffrey Hinton, Toronto, ON, Canada), and SGD (Stochastic Gradient Descent, USA). Thus, the performance of the model is increased by determining the most appropriate optimizer and hyperparameter combination.

In

Table 6, the results compare the performance of different models to classify baby voices. The models were supported by methods such as DA (Data Augmentation), MFCC (Mel-Frequency Cepstral Coefficients), and SF (Spectral Flatness) as sound features. Precison, recall, and F1 score values were examined as performance measures.

The poor performance of other methods on minority classes is likely class imbalance in the dataset. Traditional models such as random forest (RF), k-nearest neighbors (KNNs), and One-vs.-All tend to favor majority classes, as they rely on handcrafted features that might not sufficiently capture the subtle differences between cry categories. When the dataset is imbalanced, these models often learn to optimize for the dominant class, leading to a significant drop in performance for underrepresented classes.

Furthermore, traditional machine learning methods struggle with the complex and high-dimensional nature of baby cry sounds, which exhibit intricate variations in frequency, amplitude, and temporal patterns. These models typically depend on predefined statistical features, such as MFCCs or spectral features, which may not be expressive enough to distinguish minority classes effectively.

On the other hand, deep learning models, particularly artificial neural networks (ANNs), have the advantage of learning hierarchical and abstract feature representations directly from the data. By leveraging advanced optimization techniques and neural architectures, these models are able to capture nuanced differences between cry types, even in cases where certain classes have fewer training samples.

Additionally, our proposed method incorporates grid search (GS) for hyperparameter tuning, data augmentation (DA) to mitigate class imbalance, and MFCC-based feature extraction, all of which contribute to its superior performance. The ANN’s ability to generalize well across different cry types, despite data imbalance, further highlights its effectiveness in this classification task. Future improvements could involve employing more sophisticated augmentation techniques or adaptive loss functions to further enhance performance across all classes.

When the results are examined, the highest accuracy (90%) and F1 score (90%) values were achieved with the structure-tuned artificial neural network (ANN)-based proposed method. The proposed method showed the best performance in terms of accuracy and F1 score, exhibiting a higher classification success than the other methods. Therefore, the proposed method model was preferred as the most appropriate solution for classifying baby sounds.

The 90% accuracy rate obtained was compared with the study that gave the best-known result in the literature. According to the results in

Table 7, the proposed method left behind the most successful result in the same data splitting type.

The similarities and differences between the proposed method for classifying baby sounds and the existing studies using the same feature extraction process are shown in

Table 8.

In this study, the performance of the proposed method was evaluated in detail using a confusion matrix, learning curves, and ROC curves. The confusion matrix for the test data results is given in

Figure 5. The graph measuring the best performance of the learning model over the epoch or iteration is shown in

Figure 6 and

Figure 7.

The confusion matrix was used to evaluate the class-based accuracy and error rates of the model based on the test data. The majority of classes were correctly classified. The correct classification rates were high, especially for belly pain, burping, and discomfort (31, 16, and 49 correct classifications). Significant confusion was observed between hungry and tired. Hungry was frequently misclassified as tired (5 examples), and similarly, tired was predicted as hungry in 7 examples. This situation shows that the features of these classes can be quite similar.

The learning curves showing the changes in the training and validation accuracies of the model across epochs are presented in

Figure 6. The model learned rapidly on the training data for training accuracy and reached an accuracy rate of approximately 90%. However, this situation shows that the model is vulnerable to overfitting. For validation accuracy, although the accuracy on the validation dataset was initially low (30%), it increased over time and reached approximately 60%. However, the significant difference between the training and validation accuracy reveals that the model is poor at generalizing the validation data.

The ROC curve presented in

Figure 7 was used to evaluate the model’s inter-class discrimination power. The AUC values for all classes are quite high (0.99 for Belly pain, 1.00 for Burping, 0.99 for Discomfort, 0.95 for Hungry, and 0.98 for Tired), which shows that the model has good discrimination power in general. The One-vs-Rest (OvR) approach was used in calculating the ROC curves.

The slight decreases in AUC values, especially for hungry and tired (0.95 and 0.98, respectively), indicate a result consistent with the confusion between these classes. Feature engineering and/or dataset balancing are recommended to separate these classes better.

To analyze the robustness of the proposed method in this article, the 10-fold cross-validation method was also applied. The dataset was divided into 10 different subsets, and the model was trained and tested in each of them. After the model training was completed in each layer, predictions were made on the validation set, and the predicted class labels were stored. Finally, the model’s overall performance was calculated by combining the results of all layers.

The results show the model’s performance against different classes and measure the classification quality. The model accuracy is presented with the average accuracy calculated as a result of cross-validation. As a result of 10-fold cross-validation, an accuracy rate of 87% was obtained.

4. Conclusions

In this paper, different artificial intelligence models were applied and tested to classify baby sounds. The performances of pre-trained VGG16, KNN, CNN, random forest, and artificial neural network (ANN) models were evaluated using the Donate a Cry dataset. The success rates of the models increased thanks to the hyperparameter optimizations made with the grid search algorithm. The results showed that the test accuracy reached 90% in the proposed method, especially obtained using a five-layer artificial neural network (ANN). This success rate reveals the effectiveness of deep learning methods in correctly classifying baby crying sounds.

The important outcomes of the study include the effectiveness of artificial intelligence models, data processing techniques, and augmentation methods. The proposed method provided high accuracy in classifying baby sounds. In addition, features such as Mel-Frequency Cepstral Coefficients (MFCCs), time, and spectral domain features significantly increased the classification performance. Data augmentation techniques, such as time shifting, pitch shifting, and white noise augmentation methods, enriched the dataset and strengthened the model’s generalization ability.

The results of this study have potential applications in health services and infant care. In particular, they can contribute to the development of real-time systems that can analyze baby crying sounds to provide fast and accurate information to parents or healthcare professionals.

Future work can focus on testing models using larger datasets and developing systems optimized for real-time applications. Furthermore, using different acoustic features and more advanced deep learning architectures can further improve classification performance.