Quantitative Evaluation: The quantitative evaluation results, summarized in Table 1, present the PSNR, SSIM, and FID metrics across various complexity levels (, , , , , and ). These metrics comprehensively evaluate reconstruction accuracy, structural similarity, and perceptual quality, respectively. The dataset complexity is categorized into very low, low, medium, high, very high, and extreme levels based on keypoint density, texture variance, and edge density. In very low complexity scenarios (), where distortions are minimal, the proposed method achieves a PSNR of 24.64 dB, exceeding the next-best performer, QueryCDR, by 1.32 dB. The SSIM of 0.9199 shows an improvement of 3.03% compared with QueryCDR, while the FID of 27.7 indicates a perceptual quality enhancement over QueryCDR, which achieves a value of 28.3. At low complexity levels (), DCAN achieves a PSNR of 24.58 dB and an SSIM of 0.9159, reflecting improvements of 1.62 dB and 5.71% over QueryCDR and PCN, respectively. The FID of 27.8 further highlights the perceptual advantage, with QueryCDR recording a higher value of 28.9. In medium complexity scenarios (), DCAN achieves a PSNR of 24.43 dB, SSIM of 0.9191, and FID of 28.1. Compared with SimFIR, which records a PSNR of 21.84 dB and SSIM of 0.8411, the proposed method provides improvements of 11.85% and 9.27%, respectively. The reduction in FID by 6.03% compared with QueryCDR further demonstrates robustness in handling moderate distortions. For high complexity levels (), the proposed method achieves a PSNR of 24.47 dB and an SSIM of 0.9195, both outperforming QueryCDR, which achieves values of 22.79 dB and 0.7983. The FID of 28.0, which is lower than the value of 30.1 achieved by QueryCDR, highlights the ability of the proposed method to preserve perceptual fidelity in challenging conditions.At very high complexity levels (), DCAN obtains a PSNR of 24.52 dB, SSIM of 0.9189, and FID of 28.2, maintaining a consistent advantage over competing approaches. When compared with SimFIR, the proposed method achieves an SSIM improvement of more than 10.37%, demonstrating its effectiveness in preserving structural details even in scenarios with significant distortions. At the extreme complexity level (), the proposed method achieves a PSNR of 24.41 dB, SSIM of 0.9186, and FID of 28.6. These results exceed the corresponding values of QueryCDR, which records a PSNR of 22.86 dB, SSIM of 0.7895, and FID of 30.9, further validating the adaptability and robustness of the proposed method. Across all complexity levels, the proposed method attains an average PSNR of 24.51 dB, which is an improvement of 6.75% over QueryCDR, with an average PSNR of 22.96 dB. Similarly, the average SSIM is 0.9187, representing an enhancement of 6.98% compared with PCN, which achieves an average SSIM of 0.8587. The average FID of 28.1 is also superior to QueryCDR, which records a value of 29.9, reflecting a perceptual improvement of 6.02%. These results highlight the effectiveness of the advanced feature fusion mechanisms and structural preservation strategies employed by DCAN. Unlike fixed-pattern approaches such as QueryCDR, DCAN dynamically adjusts to varying levels of distortion, ensuring superior reconstruction accuracy and perceptual quality. This adaptability makes the proposed method well suited for real-world applications in diverse and challenging scenarios.

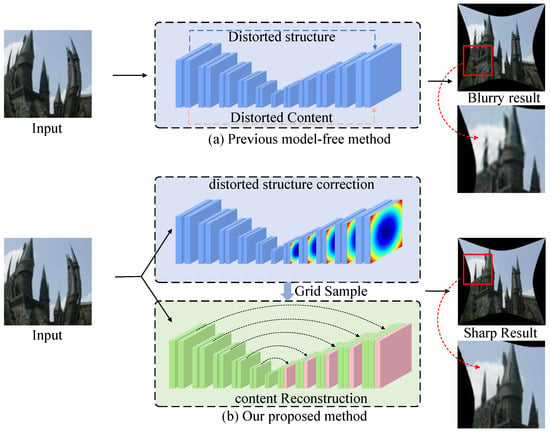

Qualitative Evaluation: In this section, we use our synthetic dataset to visualize the corrected photos of the various algorithms in order to provide a visual comparison. The learning methods SimFIR, DeepCalib, DR-GAN, PCN, and QueryCDR achieve improved correction performance in terms of visual appearance thanks to the benefits of the global semantic features supplied by neural networks, as Figure 4 illustrates. However, these approaches have difficulty recovering appropriate distributions from substantially distorted situations because of the simplicity and inadequacy of the learning methodology. The distorted parts of the image are corrected by SimFIR, although the ground truth picture of physical objects is often smaller and loses some edge information as a result. DeepCalib [28] corrects the inner areas of the picture well while degrading in the boundary regions. The network’s inherent properties impose limitations on DR-GAN and the images it generates show blurring. PCN frequently yields overcorrected results, which makes it difficult to flexibly adjust to varying degrees of distortion. Additionally, Figure 5, Figure 6 and Figure 7 provide a comprehensive comparison with SimFIR, PCN, and QueryCDR. The comparative results with SimFIR and PCN can be visually observed and clearly demonstrate the advantages of DCAN. To further highlight the differences between DCAN and QueryCDR, we introduce bounding boxes in the corrected images. Specifically, green boxes mark areas with high-quality correction results, while red boxes indicate regions where correction performance is less satisfactory. These visual comparisons effectively illustrate the strengths of DCAN in recovering fine details and achieving better correction consistency across the entire image. The results demonstrate that DCAN achieves significantly better correction quality compared with the other methods, highlighting its superior ability to retain fine details and avoid excessive smoothing. This indicates that DCAN not only effectively prevents information loss and blurring but also ensures a high level of detail recovery in the corrected images. Moreover, in qualitative evaluations, DCAN outperforms most of the compared approaches, achieving the best corrective performance and closely approximating the ground truth.

To comprehensively evaluate the performance of different fisheye correction methods, both objective metrics and subjective assessments are utilized. Figure 9a presents the average performance of PSNR, SSIM, and FID across various methods, where higher PSNR and SSIM values indicate better image fidelity and lower FID scores reflect more realistic and visually consistent results. For subjective evaluation, a voting experiment is conducted involving ten volunteers with experience in image processing. A total of 200 fisheye images are randomly selected from diverse scenes, including campus, streets, and indoor environments, and are rectified using different methods. To ensure fairness, the images are divided into ten groups, each containing 20 images, with their order randomized to avoid bias. Each group is evaluated by a single volunteer, who rated the rectified images on a scale of 0 (worst) to 5 (best). All participants have a strong background in image processing and are familiar with the image content, ensuring reliable feedback. As shown in Figure 9b, DCAN achieved the highest subjective ratings among all approaches.

Source link

Jianhua Zhang www.mdpi.com