1. Introduction

New digital technologies have enabled extraordinary advances in various scientific and technological fields in the last two decades [

1,

2,

3,

4,

5]. In particular, the accelerated exponential growth of an extensive amount of data requires enormous computational resources, with machine learning techniques widely employed to process all these data [

6]. In this scenario, the generation and processing of data happen continuously at every moment, often powered by some artificial intelligence algorithm [

7]. Indeed, classical artificial intelligence models (those operating in the electronic domain) have been the subject of strong academic interest in recent years, with use in healthcare [

8], autonomous vehicles [

9], agriculture [

10], machine fault diagnosis [

11], intrusion detection systems [

12], Internet of Things [

13], fundamental physics studies [

14], education [

15], battery management systems [

16], speech recognition [

17], optical communications [

18], among other fields.

For the scenario of optical signal equalization that is the focus of this review, classical machine learning techniques in the last two decades have been widely employed for this purpose since artificial intelligence algorithms can reverse the channel propagation function and thus mitigate distortions caused in the transmitted signals. Such investigations include complex-valued minimal radial basis function neural networks [

19], feedforward neural networks [

20], reservoir computing [

21,

22], multi-layer perceptron networks [

23], long short-term memory recurrent neural networks [

24], recurrent neural networks [

25], bidirectional long short-term memory neural networks [

26], adaptive Bayesian neural networks [

27], among several other neural network architectures [

28,

29,

30]. Specifically, multi-layer perceptron equalizers generally perform better than decision feedback-based equalizers. However, they have limitations in determining local minima [

31,

32] and present slow convergence. On the other hand, complex and Chebyshev neural networks have proven efficient in equalizing quadrature amplitude modulation signals, with faster convergence ability [

33,

34]. Moreover, it is a well-known fact that recurrent neural networks outperform feedforward neural networks [

28]. In this perspective, end-to-end artificial intelligence architectures have also been investigated [

35]. Despite the aspects mentioned above, the major drawback of classical artificial intelligence models employed in signal equalization is their high computational complexity, requiring extensive training [

30,

36].

In this scenario, investigations into the impact of the complexity of neural networks employed for the equalization of optical communication signals have also been conducted, showing a worsening in performance when the complexity of the neural network is reduced [

26,

37,

38]. In recent years, compression strategy investigations to optimize the hyperparameters of classical artificial intelligence algorithms to minimize their complexity have been extensively performed. These compression approaches include pruning, which consists of removing all parameters (neurons, layers, and parts of the neural network structure) that do not seriously influence the neural network’s performance [

39,

40,

41,

42,

43,

44,

45,

46,

47]; weight clustering, that reduces the number of effective weights [

48,

49,

50]; and quantization, employed to minimize the bit width of the numbers in arithmetic operations [

51,

52,

53,

54,

55]. In particular, Ron et al. experimentally demonstrated the multi-layer perceptron neural network, which applies pruning and quantization approaches for signal equalization with reduced memory and complexity [

56]. Despite all these efforts over the last few years to reduce the computational complexity of classical neural networks, the performance–complexity trade-off remains an open problem, in which processing large amounts of data using classical artificial intelligence models requires high computational resources [

30,

57].

To overcome the current limitations of classical neural networks operating in the electronic domain, a groundbreaking era of artificial intelligence governed by integrated photonic neural networks has received much attention in recent years [

58,

59,

60,

61,

62,

63,

64,

65]. Exceptionally, photonic neural networks can operate in the optical domain, being energy-efficient, noise-resilient, and working at high speed [

66,

67,

68], opening possibilities for real-time applications, even in the most critical scenarios [

69]. At the same time, their integration on a chip paves the way for a cost-effective manufacturing process [

60]. In terms of applications, photonic neural networks have recently been applied for image classification [

70], pattern recognition [

71], and image detection [

72].

Currently, photonic neural networks have also been successfully employed in optical communications to compensate for distortions in signals transmitted through optical fiber links. These investigations include signals with different modulation formats, with data rates ranging from 10 Gb/s to 40 Gb/s over transmission distances ranging from 15 km to 125 km [

73,

74,

75,

76,

77,

78,

79,

80,

81]. The main neural network architectures employed to date for equalizing optical communication signals are reservoir computing and perceptron. The main difference between perceptron and reservoir photonic neural networks is their architectural design, which directly affects their ability to handle specific signal equalization tasks. Specifically, all weights in the perceptron neural network structure are adjusted during the training phase. On the other hand, in photonic reservoir computing neural networks, only the output layer weights are adjusted, which can be achieved through a simple linear regression, making the training process fast and simple. In this way, the perceptron architecture is more controllable, with targeted weights learned for specific tasks, than reservoir computing photonic architectures. Regarding training, the ridge regression algorithm, the particle swarm optimizer, and the Adam optimizer are the most widely used training algorithms for photonic neural networks for equalizing optical communication signals, delivering bit error rates (BERs) ranging from 1 ×

to 1 ×

[

73,

74,

75,

76,

77,

78,

79,

80,

81]. A detailed discussion of these works is given later in

Section 5 of this review paper.

In this scenario, this paper discusses the emergence of photonic neural networks as a promising alternative for equalizing optical communication signals distorted by the complex dynamics of the transmission channel. Thus, the remainder of this paper is systematized as follows to present this discussion:

Section 2 introduces the principles and main architectures of the photonic neural networks employed as equalizers.

Section 3 addresses the training algorithms of the photonic neural networks for equalizing optical communication signals.

Section 4 explains the main building blocks of photonic neural networks.

Section 5 presents the photonic neural networks recently built for equalizing optical signals and summarizes their main features for this application. The concluding remarks are made in

Section 6.

2. Fundamentals of Photonic Neural Networks Working as Equalizers

Photonic neural networks are part of a research field that explores the use of photonics to build artificial neural networks on integrated circuits [

82]. Unlike classical neural networks, which use conventional electronics for data processing, photonic neural networks employ optical components, such as lasers, optical fibers, waveguides, and photonic integrated circuits to perform computations and transmit information [

83]. Classical artificial neural networks operating in the electronic domain were inspired by biological neural connections and consist of learning models employed primarily to determine a prediction model based on a large amount of input data [

84]. Specifically, each neuron interconnected with all others in the network structure receives input information from other neurons or external stimuli, processes this information, and provides a transformed output signal to other neurons or external outputs [

85]. Thus, classical neural networks can efficiently recognize and learn interconnected patterns between previously unseen input data sets and the related target values [

85]. After the training stage, these neural networks can be applied to new, independent input data to predict the system dynamics with high accuracy [

86]. Photonic neural networks aim to perform these same steps but using light.

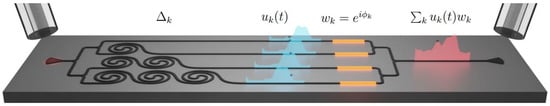

The most basic structure of a photonic neural network that was recently employed as an equalizer is called a perceptron, which consists of a photonic neural network with only one layer, in which the information flow in its structure occurs in a single direction, from its input to the output, without any feedback connection. An integrated version of a photonic perceptron was realized in 2022 by Mancinelli et al. [

76], composed of delay lines and phase modulators, and a sketch of it is schematically illustrated in

Figure 1.

In the device depicted in

Figure 1, an optical input signal

is split into four copies

, which propagate in the four waveguides, which are spiral-wound to act as delay lines and thus delay copies of the input signal. Thermal heaters (yellow lines) are placed above the waveguides to heat them and thus transmit a specific phase

to the delayed input signals (phase shifters). Consequently, the phase encoded through the weights,

, is assigned to each delayed copy. Finally, these delayed copies are weighted and summed coherently by a combiner, and the output signal is collected by a fiber and detected by a photodetector. The output of the photonic neural network

is then mathematically described by [

76]

where represents the time delay of each delay line. There are also photonic neural networks that can be used in a framework called reservoir computing that can be used as equalizers, which consist of a simplification of a classical recurrent neural network [79]. It is worth mentioning that recurrent neural networks are distinguished by their well-known memory capability, as they receive information from previous inputs to adjust the current input and output values [87]. Specifically, photonic reservoir computing comprises three parts: the input layer, the reservoir (hidden layer), and the output layer. The weights in the input and reservoir are randomly selected and kept fixed, and the connections in the reservoir are recurrent, as illustrated in Figure 2. Furthermore, only the weights in the output layer are adjusted, which can be achieved through a simple linear regression, making the training process fast and simple. The output from photonic reservoir computing can then be described by [88]

where is the weights in the output layer, and is defined by [88]

where is the input signal, b is a constant, t is the time instant, and , , and are matrices with the parameters of the input layer, the reservoir, and the feedback connections, respectively.

Specifically,

Figure 3 shows the architecture of an integrated photonic reservoir computing for equalizing optical communication signals [

73]. Initially, a direct bit detection process is converted into a pattern recognition problem, and the detected patterns are employed to train a linear classifier. This photonic reservoir computing uses a delay loop divided into intervals that define the virtual nodes of a neural network. The input patterns are then multiplied by a random mask and subsequently enter the reservoir [

73]. In the sequence, the virtual node values are combined using optimal weights, which are determined using ridge regression. Finally, the prediction output is determined by a sum of the weighted reservoir’s responses [

73].

3. Training Algorithms of Photonic Neural Networks for Equalizing Communication Signals

One obstacle faced by photonic neural networks is the need to perform their training stage still in the electronic domain [

89]. This training consists of an optimization process in which the neural network’s parameters are adjusted so that it can perform specific tasks after this process. To date, the particle swarm optimizer (PSO) has been the most widely used training algorithm for photonic neural networks when applied for equalizing optical communication signals [

76,

78,

79,

80,

81]. The PSO is an approach introduced by Kennedy and Eberhart in 1995 [

90], initially motivated by the natural behavior of bird flocks searching for food sources. Currently, the PSO and its modifications have been successfully utilized to solve real-life problems and engineering design optimization due to their simplicity and ability to be employed in diverse applications [

91]. For instance, the PSO has been applied to enhance the efficiency and the precision of support vector machine classifiers [

92], to predict hydrogen yield from gasification operations [

93], for cardiovascular disease prediction [

94], for early detection of forest fires [

95], and for gas detection [

96], among other applications [

97,

98]. Its fundamental principles that allow the optimization process to be carried out are described below. Specifically, in this optimizer, individuals are called particles and fly through the search space, looking for the best position that minimizes (or maximizes) a given situation [

98]. For its full implementation, that is, finding the best solution, the movement of each particle towards its prior personal best position (

) and the global best position (

) in the swarm needs to be calculated, as described below [

91]:

where i represents the particle index, the number of particles, t the current iteration, f the fitness function, and P the position. The velocity V and the position P of the particles are determined, respectively, by [91]

where is the inertia weight employed to balance the global and local exploitations, and are arbitrary variables uniformly determined, and and are positive constant parameters named acceleration coefficients [91]. For photonic neural networks based on reservoir computing architectures, since only the weights in the output layer are adjusted, the training process can be performed through a simple linear regression, making the training process fast and simple [75].

In terms of the training stage of photonic neural networks, which is still performed in the electrical domain, we can mention, as an example of how this process is performed, the work carried out by Marciano et al., in which the PSO was employed during the training phase of a photonic neural network for chromatic distortion precompensation [

81]. The training is carried out by a computer that elaborates the output signal and regulates a current control following the PSO algorithm. The typical training time is a few tens of seconds and is mainly limited by oscilloscope–computer data exchange. Specifically, a current generator transmits an array of pre-set currents for each experimental data acquisition, with time-constant amplitude, to the photonic neural network to drive the neural network weights. Subsequently, a triggering signal is sent to the oscilloscope to acquire the resulting periodic trace at the end-of-line receiver [

81]. The output trace is then aligned through the cross-correlation method with the digital target sequence obtained as a periodic version of the digital input sequence. Each aligned output trace serves as an input to the loss function that estimates the transmission quality, which is then provided to the PSO algorithm for the photonic neural network training [

81]. The PSO iterates these steps until error-free or until the max iteration number is reached. All the input traces provided to the photonic neural network during the training are unique since the various traces are all corrupted by noise. As a result of the training, optimal currents found are used in the testing phase, where new traces are acquired and processed.

4. Building Blocks of Photonic Neural Networks

To build all-optical photonic neural network structures, robust training algorithms still need to be developed in which weight adjustments are performed optically without the need for electro-optical conversions. This requires implementing the building blocks of photonic neural networks by optically connecting neurons, exploiting optical effects such as diffraction, refraction, interference, and scattering to precisely manipulate the amplitude, phase, and direction of light in integrated photonic circuits. One of the fundamental building blocks of photonic neural networks is activation functions, which are responsible for introducing nonlinearity to the neural network’s response [

89].

All-optical nonlinear activation functions are critical for the expansion of optical computing and optical neural networks, which have the potential to provide significant speed and efficiency advantages over their electronic counterparts. This nonlinearity allows the network to learn and model complex relationships between inputs and outputs [

99]. It is worth noting that without activation functions, the neural network only consists of a linear combination of its inputs, which limits its ability to solve more complex problems. In this scenario, activation functions are applied to neurons in a neural network to decide whether they should be activated or inactivated based on the weighted sum of the inputs received [

100]. In this direction, recent studies have already successfully demonstrated the implementation of optical activation functions employing silicon electro-optical switches and Ge–Si photoelectric detectors [

101], germanium silicon hybrid asymmetric couplers [

102], 2D metal carbide and nitride (MXene) materials [

103], the injection-locking effect of distributed feedback laser diodes [

104], the free-carrier dispersion effect [

105], and semiconductor lasers [

106]. For instance,

Figure 4 illustrates a fully integrated photonic neural network based on an MXene-saturable absorber material with an optical nonlinearity unit. With this integrated optical structure, the classification of the modified National Institute of Standards and Technology (MNIST) database was carried out with nearly 99% accuracy, as illustrated in

Figure 5, confirming the effectiveness of the use of saturable absorber materials in building all-optical nonlinear activation functions [

103].

Another fundamental building block of photonic neural networks is multiplication matrices, which consist of optical structures that allow mathematical operations to be performed using light [

107]. As illustrated in

Figure 6, the main approaches for photonic matrix–vector multiplications (MVMs) are plane light conversion (PLC), the Mach–Zehnder interferometer (MZI), and the wavelength-division multiplexing (WDM) approach [

107]. Using diffraction of light, PLC matrix–vector multiplication can be implemented, in which an input vector

X is expanded and replicated via optical components, as illustrated in

Figure 6a. At the end of this optical structure, the beams are combined and summed to form the output vector

Y, defined by the product of the transmission matrix

W and the vector

X [

108].

Figure 6b displays the MZI multiplication matrix configuration based on singular value decomposition and rotation submatrix decomposition methods. The WDM-based multiplication matrix depicted in

Figure 6c is generally based on microring resonators [

107]. For instance, Giamougiannis et al. demonstrated a tiled matrix multiplication as illustrated in

Figure 7 for artificial intelligence applications in integrated photonics capable of executing linear algebra operations with an accuracy of 0.636 for benign and malicious attack detection [

109].

Other recent investigations that combine multiplication matrices, activation functions, and training stages to process optical signals from the front door to the output port can further be highlighted in the direction of building all-optical photonic neural networks. For example, Basani et al. demonstrated an all-photonic accelerator using a single-ring resonator to store information encoded in the amplitude of frequency modes [

110]. Nonlinear optical processes enable interaction among these modes. The matrix multiplication and the activation functions on these modes in this work are performed coherently, allowing a direct representation of negative and complex numbers without passing through digital electronics. Thus, this architecture enables on-chip analog Hamiltonian-echo backpropagation for gradient descent and other self-learning tasks [

110].

Chen et al. presented a study on an optical authentication system based on a diffractive deep neural network [

111]. A unique and secure image representation at a precise distance is generated by manipulating a light beam with a public and private key. The certification system possesses inherent concealment characteristics and enhanced security by leveraging invisible terahertz light. Specifically, a diffractive deep neural network with diffraction layers is designed following this scheme, and a THz beam for illumination is employed [

111]. The side length of each diffracted neuron, the scale of each network layer, and the interval between two adjacent layers are determined, in which in each diffraction layer there are 200 × 200 neurons. Thus, every neuron on the adjacent diffraction layer is connected to each other through the diffraction layer, generating 6.4 billion neural connections from the input layer to the output layer. This entire certification process proposed by these authors operates solely through the manipulation of the light beam, eliminating the need for electronic calculations [

111]. Furthermore, Dehghanpour et al. recently presented a reconfigurable optical activation function based on adding or subtracting the outputs of two saturable absorbers [

112]. This approach can provide bounded and unbounded outputs by facilitating an electrically programmable adder/subtractor design. Furthermore, by investigating the multiplication noise and the loss function, a vector–matrix multiplication that was six times more parallel than the conventional optical vector–matrix multiplication method was obtained [

112].

Integrating on-chip nonlinear activation functions, multiplication operations, and training mechanisms in the optical domain is essential for efficiently processing optical signals from input to output. Particularly, implementing activation functions remains challenging due to the linear nature of optical components. However, it can be overcome by employing electro-optical modulators that modulate the intensity of the optical signal based on electrical inputs. Multiplication operations, especially matrix multiplications, can be performed using optical components such as beam splitters and interferometers, where weights and inputs are optically encoded, allowing the network to be dynamically reprogrammed and trained. Training photonic neural networks, in turn, requires parameter adjustments based on error gradients, which still requires the combination of electrical circuits to perform backpropagation and update the weights based on the computed errors [

113]. In this scenario, integrating these components in all-optical signal processing follows steps that range from converting input data into optical signals by modulators that translate electrical signals into optical ones. Next, they undergo linear transformations, which can be performed by matrix multiplications. Then, they are subjected to nonlinear activation functions implemented by electro-optical modulators, introducing the necessary nonlinearity. Finally, error gradients must be calculated to adjust the optical components of the photonic neural network during training, ensuring learning of the desired task [

113].

The extraordinary advances in photonics have brought to light a promising new path for the next generation of artificial intelligence technologies, paved by integrated photonic devices. Among the most promising avenues encompassing technologies and methods for advancing photonic neural networks in optical communications, we can highlight the use of microring resonators [

114], cascaded-array programmable Mach–Zehnder interferometers [

115], spatial light modulators and Fourier lenses [

116], phase-change materials [

117], microcomb sources [

118], and vertical-cavity surface-emitting laser arrays [

119]. Particularly, phase-change materials can change their optical properties in response to external stimuli, enabling the development of reconfigurable photonic devices, where the synapses are built of optical waveguides, and weighting is achieved via phase-change material cells [

71]. This adaptability is crucial for creating dynamic and efficient photonic neural networks capable of processing communication signals of different formats. The most common phase-change material is the archetypal alloy

[

120], but other compositions are also being investigated [

121]. It is also worth highlighting that vertical-cavity surface-emitting laser arrays can operate with low energy consumption (<5 aJ per symbol) and rapid programmability, with high-speed updating of weights, constituting a promising candidate for photonic neural network applications [

119].

Optical frequency comb devices have attracted significant attention in photonics [

122]. Comb technologies from the near-ultraviolet to the mid-infrared spectral range have been investigated in photonic-chip waveguides via supercontinuum generation and in microresonators via Kerr comb generation [

122]. Thin-film lithium niobate-on-insulator has arisen as a photonic substrate that enables photonic circuits with dimensions compatible with silicon photonic devices [

123] and provides the ability to integrate fast electro-optical modulators and efficient nonlinear optical elements on the same chip. Specifically, lithium niobate-based modulators can deliver very high modulation frequencies [

124] and support data rates up to 210 gigabits per second with low on-chip optical loss [

125]. In this scenario, investigations have also emerged on integrated photonic digital-to-analog converters capable of operating without optic–electric–optic domain crossing [

126]. Recently, Masnad et al. demonstrated a photonic digital-to-analog converter fabricated on a silicon-on-insulator platform incorporating microring resonator-based modulators capable of 4 GSample/s conversion rate in a 2-bit resolution operation [

127]. In this current scenario of growth and strengthening of photonic technologies, photonic neural network architectures with low heat dissipation, sub-nanosecond latencies, and high parallelism powered by photonic integrated circuits are now a feasible reality [

128,

129].

5. Applications of Photonic Neural Networks for Equalizing Optical Communication Signals

Photonic neural networks are in their early stages of development, so there are currently few scientific works on their use in equalizing optical communication signals. Therefore, in this section we present the investigations found in the scientific literature that address signal equalization using integrated photonic neural network devices more deeply. Initially, we can mention an investigation carried out in 2018 by Argyris et al. that demonstrated a simplified photonic reservoir computing approach for data classification of distorted optical signals after propagating over 45 km of optical fiber link [

73]. In this work, a direct bit detection process was first converted into a pattern recognition problem. By employing the experimental photonic reservoir computing device, an improvement in the BER metric by two orders of magnitude for a pulse-amplitude modulation 2-level (PAM2) signal at 25 Gb/s was successfully achieved compared to directly classifying the transmitted signal, as depicted in

Figure 8 [

73].

In 2019, Katumba et al. presented the design and a numerical study of a photonic reservoir computing with 16 nodes operating in the optical domain [

74]. Tests with signals transmitted at different data rates over optical fiber links of different lengths were evaluated by these authors. Particularly, for an on–off keying (OOK) signal at 40 Gb/s after 15 km of fiber propagation, a BER of 2 ×

was obtained after applying the optical equalization with the proposed photonic reservoir computing [

74]. Moreover, in 2021, Sackesyn et al. presented experimental results in which nonlinear distortions in a 32 Gb/s OOK signal were mitigated below the forward error correction (FEC) of 0.2 ×

using a photonic reservoir computing [

75]. Furthermore, the results obtained were compared with a delay line filter, clearly evidencing that the developed photonic device performed a nonlinear equalization, as shown in

Figure 9 [

75].

Using an optical perceptron, M. Mancinelli et al. [

76] performed binary pattern recognition of signals at different rates, ranging from 5 to 16 Gb/s, achieving a BER as low as

. The perceptron performance after the training stage is illustrated in

Figure 10. These experimental results demonstrate that the best performance was achieved for the 2-bit pattern. In the case of the 3-bit patterns, the best performance was achieved at 10 and 16 Gb/s, which are the closest bit rates to the 20 Gb/s rate of the designed photonic neural network. Thus, the versatility of this neural network is limited by its structure, which is designed to process optical signals at a specific data rate.

More recently, in 2023, using photonic reservoir computing, Zuo et al. demonstrated a simultaneous improvement in the BER and the eye diagrams for an OOK signal with 25 Gb/s over a 25 km of single-mode fiber transmission. Employing the PSO training algorithm, it was possible to obtain fast convergence during the training of the network weights [

79]. Due to the excellent performance of the training algorithm employed, a BER of 9 ×

was accomplished, which is three orders of magnitude lower than that reached before the optical equalization [

79].

In the same year, Staffoli et al. published results of a small optical perceptron composed of four waveguides to compensate for chromatic dispersion in fiber-optic links [

80]. The photonic device was designed, fabricated, and subsequently tested experimentally with optical signals at a rate of 10 Gb/s after propagation through distances of up to 125 km of optical fiber. During the learning phase, a separation loss function was optimized to separate the transmitted levels of 0s from 1s as much as possible, which automatically implies an optimization in the BER. The tests performed with this photonic neural network revealed that the excessive losses introduced by the device (greater than 6 dB) were compensated by the gain in the equalization of the transmitted signal for transmission distances of more than 100 km [

80]. Furthermore, the measured data were reproduced by a simulation model that simultaneously considered the optical transmission medium and the photonic device. This allowed for simulating of the performance of the photonic neural network for data rates higher than 10 Gb/s, where the device presented improvements concerning the current state of the art [

80]. For example, one of the experimental results obtained in this work is illustrated in

Figure 11.

Looking to extend the applicability of the integrated photonic neural networks for equalizing optical communication signals, Staffoli et al. demonstrated a photonic neural network with eight waveguides for chromatic dispersion compensation of a pulse-amplitude modulation 4-level (PAM4) signal propagating in a 50 km optical fiber [

78]. With a greater number of delay lines, the processing capacity of the photonic neural network increases; however, this comes at the cost of increased insertion loss of the device. To date, OOK, PAM2, and PAM4 signals have been equalized by photonic neural networks. In this regard, seeking to explore the optical correction of signals with other modulation formats in addition to those already investigated to expand the applicability of photonic neural networks, Marciano et al. [

81], experimentally demonstrated the recovery of an orthogonal frequency-division multiplexing (OFDM) signal propagating over 125 km of optical fiber employing a photonic neural network. Ongoing studies have already revealed that this approach allows corrections to be performed on the distorted OFDM signal directly in the optical domain, pre-equalizing it by the photonic device, as illustrated in

Figure 12.

Furthermore, to demonstrate the robust performance of photonic neural networks for equalizing optical signals, Marciano et al. [

81] evaluated constellation diagrams of an OFDM signal after propagation over 125 km of standard single-mode fiber with and without the photonic neural network device. This analysis is presented in

Figure 13. Specifically,

Figure 13a exhibits the significant impact of chromatic dispersion on the transmitted signal when no equalization techniques are applied. Even in this worst-case scenario, the distorted ODFM signal is adequately restored after using the photonic neural network, delivering a well-defined constellation diagram, as illustrated in

Figure 13b [

81].

Also, Wang et al. demonstrated a multi-wavelength photonic recurrent neural network for channel distortion compensations in a 56 Gbaud WDM PAM4 communication system [

77]. Simulations have shown that this photonic recurrent neural network can outperform the digital maximum likelihood sequence estimation and the feedforward neural network equalizer in optimum Q-factor and launch power metrics [

77]. In the sequence,

Table 1 gathers the scientific investigations that more deeply evaluated the performance of photonic neural networks for equalizing optical communication signals. This table highlights the characteristics of the equalized signals, the structures of the photonic neural networks used and their respective training algorithms, and the BER values achieved before and after equalization. From

Table 1, it can be concluded that perceptron photonic neural networks perform slightly better in terms of BER, equalizing signals transmitted over greater distances than reservoir computing photonic neural networks, but with signals at lower data rates.

The main difference between perceptron and reservoir photonic neural networks is their architectural design, which directly affects their ability to handle specific signal equalization tasks. Specifically, all weights in the perceptron neural network structure are adjusted during the training phase. On the other hand, in photonic reservoir computing neural networks, only the output layer weights are adjusted, which can be achieved through a simple linear regression, making the training process fast and simple. In this way, the perceptron architecture is more controllable, with targeted weights learned for specific tasks, than reservoir computing photonic architectures. Since the perceptron photonic neural networks have a fully trainable architecture, they can provide more targeted optimization, enabling them to handle signal distortions more effectively, which translates to better performance in terms of BER when compared to reservoir photonic neural networks. This may partially explain why the perceptron performs slightly better in terms of BER, equalizing signals transmitted over greater distances than reservoir computing, according to the results shown in

Table 1. However, detailed investigations comparing the performance of the perceptron with reservoir computing are still needed to answer this point better.

Moreover, although there is currently a consistent body of knowledge about equalization techniques in the scientific literature [

130,

131,

132,

133,

134], investigations have shown that adaptive equalizers dominate receiver power consumption [

135]. Thus, one of the main advantages of photonic neural networks compared to classical neural networks for equalizing optical communication signals is their high energy efficiency [

77].

Table 2 objectively summarizes the advantages and disadvantages of photonic neural networks for the application of optical communication signal equalization. Particularly, some efforts have already been directed to overcome the disadvantages of integrated photonic devices, such as high insertion loss and low flexibility. Among them, we can highlight investigations with a photonic integrated circuit-based erbium-doped amplifier [

136], low-loss photonic integrated circuits using lithium niobate [

137,

138] and silicon nitride [

139,

140]. Furthermore, investigations for reconfigurable photonic devices have also been performed to realize a spatially resolving detector of amplitude and phase information [

141], for partial differential equation solving [

142], and for optical pulse processing [

143].

Table 3 summarizes the main challenges and their respective solutions for improving the performance of future photonic neural networks.

Evaluating classical and photonic neural networks comparatively with definitive numbers is challenging because classical neural networks are far more mature, with decades of research and development. Photonic neural networks are still at a relatively early stage, with ongoing research and diverse implementations. From this perspective, we have gathered, in

Table 4, a qualitative comparison highlighting the general trends and potential advantages/disadvantages of classical and photonic neural networks. It is important to emphasize that classical neural networks (operating in the electronic domain) are currently dominant for general-purpose artificial intelligence tasks due to their high accuracy, mature training methodologies, and well-established hardware and software ecosystems. On the other hand, photonic neural networks hold great promise for specific applications where speed and energy efficiency are critical, such as high-bandwidth communication, signal processing, and certain types of pattern recognition. Hybrid electronic–photonic neural network devices that combine the strengths of both domains are certainly another promising direction for future research. However, artificial intelligence technologies are a rapidly evolving field, and future advancements in materials, devices, and architectures could significantly shift the balance.

Several fiber-optic communication impairments encompass linear or nonlinear forms [

144]. Linear impairments include chromatic dispersion, polarization mode dispersion, and symbol timing offset. Nonlinear impairments include laser phase noise, self-phase modulation, cross-phase modulation, four-wave mixing, and nonlinear phase noise. Cross-phase modulation and four-wave mixing do not apply in single-wavelength communication systems. Furthermore, the inelastic nonlinearities of stimulated Brillouin scattering and stimulated Raman scattering can occur at certain power levels but are generally avoided in optical communications links. We can also mention additive noise introduced by optical amplifiers like erbium-doped fiber amplifiers. Among these impairments, one of the most severe is chromatic dispersion, which occurs when different wavelengths (frequencies) of light travel at different speeds through an optical fiber. It causes pulse broadening, which leads to intersymbol interference [

145]. Consequently, chromatic dispersion is the predominant effect when recovering distorted signals employing photonic neural networks, correcting phase shifts and delays in the time domain. On the other hand, nonlinear compensation requires more complex photonic neural networks due to the higher-order interactions in the impairments.

Table 4.

Comparative analysis between classical (operating in the electronic domain) and photonic neural networks (operating in the optical domain).

Table 4.

Comparative analysis between classical (operating in the electronic domain) and photonic neural networks (operating in the optical domain).

| Feature | Photonic Neural Networks | Classical Neural Networks |

|---|

| Complexity | Can offer reduced complexity for certain operations (e.g., linear matrix multiplications) due to inherent parallelism of optics. However, implementing complex nonlinearities can increase system complexity [66]. | Well-established design methodologies and tools for low-complex architectures. However, increasing complexity leads to higher power consumption and latency [146]. |

| Power consumption | Potentially much lower for certain operations, especially linear computations. However, the power consumption of optical sources, modulators, and detectors needs to be considered [77]. | Can be high, especially for large and complex networks, due to electronic signal transmission and processing. Energy efficiency is a major concern [56]. |

| Speed | Inherently high speed for signal propagation. Potential for ultra-fast processing for specific tasks [67]. | Limited by electronic signal propagation speed. Training and evaluating each node in large neural networks can be very time consuming [30]. |

| Scalability | Challenges in scaling to large and complex networks due to fabrication tolerances, signal losses, and low-flexibility architectures [80]. | Well-established scaling techniques and manufacturing processes [35]. |

| Nonlinearity | Implementing efficient nonlinear activation functions in the optical domain is a major challenge [103]. | Mature techniques for implementing nonlinear activation functions [28]. |

| Training | Training optical neural networks can be more challenging due to backpropagation limitations and integrated device constraints [76]. | Mature training algorithms and software frameworks [25]. |