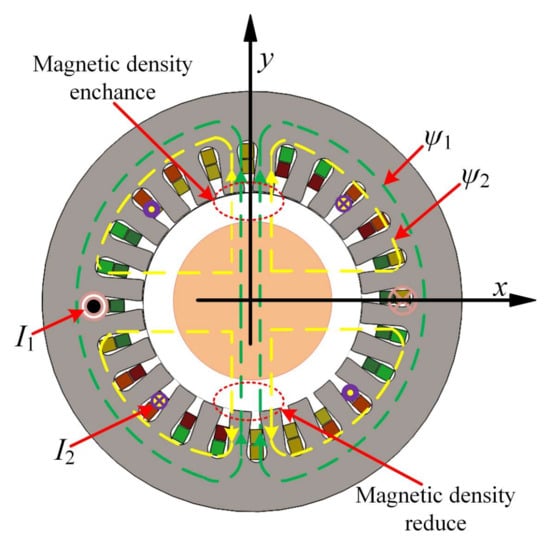

3.1. Reversibility Analysis of CCR-BIM

Based on the inverse system theory, the output variables, state variables, and input variables of the CCR-BIM system are defined as follows:

The output variables are defined as Z = , the state variables are defined as V = , the input variables are defined as U = , where x and y represent the suspension displacement parameters; 1 represents the torque winding parameter, 2 represents the suspension force winding parameter, s represents the stator parameter, r represents the rotor parameter, d represents the d-axis parameter, and q represents the q-axis parameter.

The state equation of the CCR-BIM can be expressed as:

By taking the partial derivatives of the input variables

U in Equation (6), the Jacobian matrix can be obtained as follows:

According to Equation (7), it can be deduced that the determinant of the Jacobian matrix A is not equal to zero, and the corresponding relative degree of the system is , which satisfies the condition . Therefore, the system meets the reversibility criteria for an inverse system.

The inverse system representation of the state equation for the CCR-BIM system is given by:

The system model in Equation (7) possesses reversibility, which fulfills the fundamental condition for achieving decoupling control of CCR-BIM. However, in practical control system applications, the complexity of the CCR-BIM state equation is too high for conventional analytical methods to accurately derive its regression model. To realize decoupling control using the inverse system of CCR-BIM, it is necessary to employ SVM to construct a more precise and effective inverse system model for CCR-BIM.

3.2. Establishment of SVM Model

In an

n-dimensional space, the expression for the geometric margin between a point and a hyperplane is given by:

where

,

(i = 1, 2, 3, ⋯, n). It can be observed that the scaling of

ω and

b has a minor impact on the geometric margin. Therefore, we can set

dmin = 1. Additionally, the process of solving for

is transformed into computing. Hence, the following is the process for solving the

minimization problem [

22,

23,

24]:

Transforming the original problem into a convex quadratic programming problem under linear constraints is achieved through the above process. To solve this problem, a Lagrange function incorporating

n training samples is constructed:

To ensure that the Lagrange function satisfies the constraint conditions while achieving the maximum value, the parameters are restricted to

αi ≥ 0. The objective function expression at this point is:

Equation (11) has a saddle point that satisfies the stationarity, feasibility, and complementary slackness conditions within the Karush–Kuhn–Tucker (KKT) framework. Consequently, Equation (12) can be equivalently transformed into solving a set of dual problems, specifically expressed as:

By fixing the parameter

α, the partial derivatives of

ω and

b are taken to find the minimum value of Equation (11) regarding

ω and

b. The mathematical expression is:

Substituting Equation (14) into the Lagrange function shown in Equation (11) yields:

The objective function in the original Equation (13) is transformed into:

Using the Sequential Minimal Optimization (SMO) recursive method to calculate the Lagrange multipliers

α, and subsequently calculating

ω and

b, the maximum margin hyperplane can be determined. The classification function can be expressed as:

Figure 8 illustrates the analysis process for nonlinear sample processing. Specifically, when outliers are in the state shown in

Figure 8a, the sample points become linearly inseparable. Data measurement errors or noise introduces outliers, which inevitably affect the margin of the maximum margin hyperplane. This situation can be effectively improved by introducing a loss function, whose expression is as follows:

To ensure that the maximum margin hyperplane meets the constraint conditions at any position, corresponding constraints need to be applied to the deviation. Here, the deviation is squared and then added cumulatively to the objective function. The adjusted optimization objective for the objective function in Equation (10) is:

where γ is the parameter controlling the weight.

Equation (19) calculates the sum of squares of deviations, and after corresponding adjustments, the Lagrange function is expressed as:

The corresponding objective function is represented as follows:

Equation (20) is the optimized Lagrange function, and it can be observed that the original problem has been transformed into solving a system of linear equations after processing.

Figure 8b illustrates a scenario where the two types of samples cannot be separated within the sample space, i.e., the sample points themselves are in a nonlinear state. The solution involves mapping the sample to a higher-dimensional space, as depicted in

Figure 8c. The nonlinear sample classification process mainly consists of two stages. First, the sample is mapped to a higher-dimensional feature space, and then a linear learning machine is used for classification in this high-dimensional space.

The specific process is as follows. In a two-dimensional space, the coordinates can be represented as (

v1,

v2), and any curve equation can be written as:

Taking a five-dimensional space as an example, let

,

,

,

,

, then the curve equation in the two-dimensional space can be expressed as:

Therefore, in the newly constructed coordinate system, by introducing a hyperplane equation, the sample points that were originally linearly inseparable in the low-dimensional space can be linearly separated in the high-dimensional space. The classification function at this time can be reformulated as:

When the number of input features for SVM is 2, the hyperplane appears as a straight line. When the number of input features increases to 3, the hyperplane becomes a two-dimensional plane. However, when the number of features exceeds 3, the mapped dimensions will increase significantly, leading to a dimensionality explosion. To avoid this, a kernel function k is introduced. Introducing the kernel function allows the samples to be implicitly mapped to a high-dimensional space without explicitly computing the coordinates of the mapped samples. This approach effectively avoids the dimensionality explosion problem while achieving computation results equivalent to those obtained through explicit high-dimensional mapping.

The specific expression of the kernel function

k is as follows:

After dimensionality reduction using the kernel function, the classification function corresponding to Equation (24) can be rewritten as:

From the above analysis, it can be seen that SVM has the ability to fit any nonlinear function. The following function fitting result can be obtained:

The constraint condition in the above equation is:

where zi is the actual output corresponding to vi.

The optimization objective is transformed to:

Based on Equations (27) and (28), the Lagrange equation is constructed as:

According to the KKT conditions, the partial differential equation of the above equation is obtained, with the specific expression as follows:

Solving Equation (31) yields:

where Ω is the diagonal matrix of the kernel function, with diagonal elements as ; , and In is the identity matrix.

By selecting a kernel function, the SVM regression equation is obtained as:

The fitting process of the SVM regression equation in Equation (33) heavily relies on the kernel function of the SVM. Therefore, the selection of the kernel width of the kernel function is crucial, as an appropriate kernel width can effectively improve the prediction accuracy of the model. Thus, the ISA-GA is employed to determine the optimal kernel width.

3.3. Optimal Design of Kernel Function Based on ISA-GA

By leveraging the global search capability of genetic algorithms and the local search capability of simulated annealing algorithms, the simulated annealing-genetic algorithm (SA-GA) demonstrates robust performance in solving complex optimization problems. The SA-GA employs two fundamental operations: genetic operations and simulated annealing operations, where genetic operations constitute the global search component of the genetic simulated annealing algorithm. However, genetic algorithms exhibit limitations in local search, often leading to premature convergence to local optimal solutions. Traditional genetic algorithms typically utilize fixed crossover and mutation operators, and despite numerous experimental adjustments to these parameters, it remains challenging to ensure their optimality in every instance. Consequently, this to some extent affects the efficiency and accuracy of the algorithm in finding optimal solutions [

25,

26]. In light of this, it is necessary to improve the crossover and mutation operators of genetic algorithms to enhance their global search capability.

The parameter optimization process for the improved simulated annealing-genetic algorithm (ISA-GA) supporting the SVM is as follows:

- (1)

Initialization of Population: Initialize the population by randomly generating individuals, each representing a potential solution.

- (2)

Genetic Operations: Evolve the population using selection, adaptive crossover, and mutation operations to update the fitness of each individual.

- (3)

Simulated Annealing Operations: Apply simulated annealing to each individual to conduct a local search for better solutions.

- (4)

Stopping Condition Check: Check if the preset stopping conditions for iteration have been met.

- (5)

Return Optimal Solution: Return the optimal individual as the final result.

The genetic operations in genetic algorithms are of crucial importance. The primary processes include selection, crossover, and mutation. The selection operation involves choosing the more superior individuals from the old population to form a new population. There are numerous methods for this selection, and in this design, the roulette wheel selection method is employed, with its specific expression given below:

where fi represents the fitness of individual i, and M denotes the population size.

This article introduces an adaptive crossover and mutation strategy that dynamically adjusts parameter values at different stages of population evolution to better suit the evolutionary needs of each generation. When there is a significant difference between the current population’s average fitness and the optimal fitness, it indicates a high degree of dispersion and rich diversity within the population. Based on the fundamental principles of genetic algorithms, it is recommended to increase the crossover operator’s value to facilitate more thorough crossover operations within the population, thereby generating more competitive individuals. Meanwhile, to protect high-quality individuals, the mutation operator’s value should be moderately decreased to reduce potential interference with advantageous genes, thereby accelerating the overall convergence speed of the algorithm. Consequently, when the aforementioned difference is small, the crossover operator’s value should be reduced accordingly. The process of constructing the expressions for the adaptive crossover operator and adaptive mutation operator is as follows.

The expression for the adaptive crossover operator with an adaptive coefficient

kc is set as:

where represents the average fitness value, represents the maximum fitness value, and is used to measure the current population dispersion.

The expression for setting the mutation operator value with an adaptive coefficient

is given by

Prior to performing the simulated annealing operation, the genetic algorithm has already obtained new individuals through a series of selection, crossover, mutation, and other operations. Subsequently, the simulated annealing algorithm compares the fitness value of the new individual obtained by the genetic algorithm with the fitness value of the old individual to determine whether to accept an inferior solution. The specific calculation formula is shown below:

where is the fitness value of the new individual, fit(x) is the fitness value of the old individual, and T is the annealing temperature.

The flowchart of the ISA-GA is shown in

Figure 9.