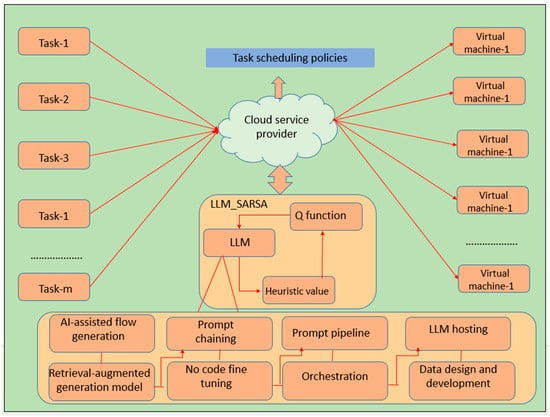

2: Input: Input the set of task

3: Output: Output task scheduling policies

4: Initialize

5: Initialize LLM heuristic Q buffer

6: For each episode S, perform

7: Training phase of LLM_SARSA

8: For every task in training task set perform

9: Initialize state S, Action A

10: Choose Action A from state S using the policy derived from

11: For each step of episode, perform

12: Take action A, observe reward R, and go to next step

13: Choose action from state using the policy derived from

11:

12: Update ,

12: Compute the LLM heuristic value

13: Update the with LLM

14:

15: Employ L2 loss to approximate the value

16: L2(

17:

18: End For of episode until S is terminal

19: End For

20: Testing phase of LLM_SARSA

21: For every task in testing task set perform

22: Initialize state S, Action A

23: Choose Action A from state S using the policy derived from

24: For each step of episode, perform

25: Execute the action from state with updated heuristic value and L2 loss value

26:

27: End For of episode until S is terminal

28: End For

29: End For

30: Output

34: Stop

Source link

Bhargavi Krishnamurthy www.mdpi.com