1. Introduction

The advancement of society has meant that traditional manual management in agriculture is unable to meet demand. This makes the incorporation of modern and technological agricultural management essential for the sustainability and competitiveness of the sector. However, the high cost and complexity of adapting many of these innovations hinder their adoption. Furthermore, the shortage of professionals specialized in agricultural innovation and technology limits the quality of management and outcomes, highlighting the need for greater participation of engineering to meet the demands of the flower-growing sector.

The main players in the global export market of flowers had the following performance as of 2023: India, with 80.16% of the market (USD 12.4 billion); Colombia, with 6.78% (USD 1.1 billion); and Vietnam, with 5.50% (USD 854 million). Colombia’s flower portfolio, by cultivated area, covers a total production of 77 km2. The most cultivated flowers are roses, covering 26 km2 (33.8% of the total area), and carnations, covering 9 km2 (11.7%), representing a significant sector in the country’s trade balance.

The incorporation of technologies in the agro-industrial sector has not been systematic because it is a heterogeneous area. This industry may require technological, process, logistical, or execution solutions that can vary depending on regional conditions and available resources [

1]. Therefore, it is necessary to seek specific solutions to problems [

2].

An additional problematic factor is that most of the available knowledge about each process comes from information networks that compare and complement traditional practices and the experiences of each worker. Moreover, in the case of Colombia, 85% of this formal knowledge comes from the personal expertise of workers [

1]. Improvement opportunities, according to these information networks, do not consider the multiple technological solutions available in each sector because they lack systematic processes that encourage the inclusion of these new technologies.

An integrated perspective on technology and innovation highlights that innovations can vary in scale, from being new to a firm, to new in the market, or even new to the world. Innovation does not have to be global or transformative—small, localized changes are also recognized as innovations. This view rejects the notion that only high-tech or radical innovations matter, recognizing that innovation can arise in any sector. It also moves away from categorizing industries as “innovative” or “non-innovative”, emphasizing that innovation is a continuous process. This perspective fits well with agriculture, where technological advancements emerge from both public and private efforts, and the industry itself is diverse, driven by unique technological and competitive dynamics.

At this point, it is worth noting that the incorporation of a technological contribution does not necessarily mean an immediate improvement in the process. In fact, manual work or a micro-innovation may be more efficient than a technological contribution due to the experience of the workers or the nature of the task. The truth is that many tasks (planting, harvesting, cutting, pest eradication, and classification) depend on the experience of the worker.

Due to the wide variety of flowers in the sector, technological contributions must be adapted to the specific type of flower, as each has unique characteristics and specific cultivation requirements. Moreover, the agro-industrial sector, especially floriculture, faces high occupational risks due to intensive manual labor, exposure to harmful chemicals, and the lack of regulation in the use of machinery. This leads to frequent muscular disorders, especially in the hands, wrists, and lower back. However, solutions for these problems have not been sufficiently studied, particularly in Colombia.

For the design of all flower industry machinery, there is a need to optimize to consider the lowest costs in the short and long term [

3]. Over the years, various optimization methods have been developed, including programmed methods, heuristic algorithms, and metaheuristic algorithms aimed at optimizing the design process. Metaheuristic algorithms show the best performance, as they offer global solutions for design and avoid becoming trapped in local solutions, as is the case with heuristic algorithms [

4].

Metaheuristic methods are designed to find the optimal solution to an optimization problem. They simulate behavioral patterns to define constraints and input values for each problem. In the design of mechanisms and machinery, they avoid falling into local solutions through phases of exploration and exploitation. Exploration means expanding the search space to new local areas, while exploitation involves finding the best local solution. The goal is to compare possible solutions and determine the optimal one [

5]. These algorithms have three fundamental differences from conventional algorithms: they define a population in the search space, use specific fitness information, and employ probabilistic rules [

6]. They can be used to solve non-differentiable or discontinuous optimization problems [

7,

8]. Due to their advantages, they can be applied in many disciplines within the field of general engineering [

9,

10], earth sciences [

11,

12], and, in this case, the flower-growing sector [

13,

14].

Recent research proposes modifying these methods, suggesting alternatives that improve their performance and replicability [

15,

16]. For example, the inclusion of tools and methodologies that diversify the possible solutions (target population) has been studied to avoid the premature convergence of the solution, aiming to escape from local optima [

17].

The Salp Swarm Algorithm (SSA) is efficient in structural optimizations and has low complexity, but it struggles with cost, weight, and volume optimization, taking a long time to find the best solution [

18]. The Multiverse Optimizer (MVO), while also facing challenges in geometry, weight, and volume optimization, is slow to converge and has high complexity [

19]. The Moth-Flame Optimization Algorithm (MFO) offers fast convergence and low complexity, excelling in cost and weight optimization, but performs poorly in volume optimization [

20]. Atom Search Optimization (ASO) has high computational complexity, leading to longer development and execution times [

21]. Ultrasound-based optimization (EBO) performs well in optimizing weight, geometry, volume, and critical paths but is less effective in cost optimization and is both complex and time-consuming [

22]. The Queue Search Algorithm (QSA) excels in optimizing weight, geometry, cost, and volume, delivering quick results with low complexity [

23]. The Equilibrium Optimizer (EO) performs well across weight, geometry, cost, and volume problems in short times, though it is highly complex [

24].

Florval, a company located in Cundinamarca, Colombia, is grappling with urgent challenges related to the obsolescence of its mini carnation sorting machines. These machines incur high operating and maintenance costs and frequently fail, which significantly hampers productivity. In 2023, Florval processed over 67 million carnation stems annually using these machines. The high-impact movements of the machine’s mechanism resulted in 6% of the stems being damaged, downgrading their quality and disqualifying them from the high-quality export classification. Additionally, the accumulation of broken stems necessitated hourly stops to clear waste buildup, risking total blockages and damage to mechanical components.

The primary objective of this study is to optimize the design of machinery used in the carnation industry, aiming to reduce mechanical damage to the stems, extend the equipment’s lifespan, and minimize maintenance interruptions. Ultimately, this optimization seeks to increase the profitability of the agro-industry. To achieve this goal, three metaheuristic optimization methods are compared: Ant Colony Algorithm, Genetic Algorithm, and Sine Cosine Algorithm. These methods are employed to identify an optimal design configuration that minimizes jerk during the machine’s operation. Additionally, the solutions obtained from these metaheuristic approaches are compared to those derived from an exhaustive search conducted over a reduced design space. This research aims to provide a robust solution that enhances machine performance while analyzing which algorithm demonstrates the best efficiency during the optimization process.

2. Materials and Methods

2.1. Mini Carnation Sorting Machine

Figure 1 displays the machine utilized for tying and sorting carnations at Florval S.A.S, located Nemocón, a municipality situated in the northern part of the Bogotá savanna in Colombia.

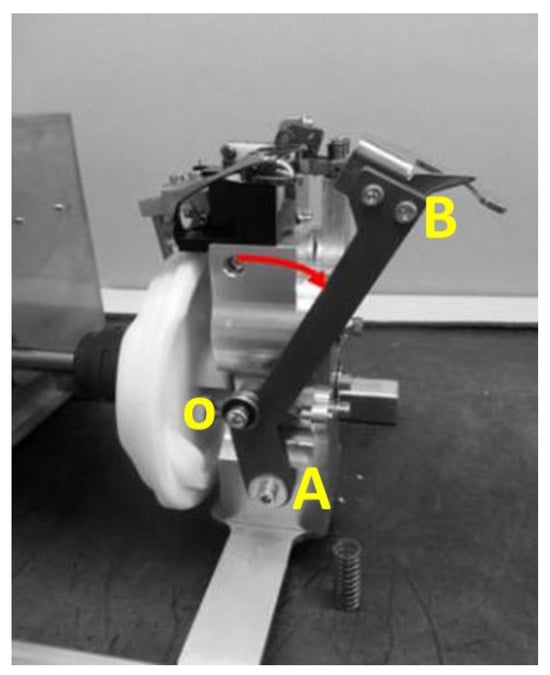

Figure 2 illustrates a simplified representation of the mechanism to be optimized. These figures identify point O, the reference point of rotation; point A, the contact point with the cam of the mechanism; and point B, the tying point. The movement of point B facilitates the tying of carnation stems, and optimizing this movement is essential to reduce mechanical damage to the flowers.

An objective function was formulated specifically to minimize the jerk value for the tying point of the mechanism (point B). The jerk value represents the third derivative of the position with respect to time. To achieve this, it was developed the position equations for point B, considering its planar movement relative to the fixed reference point of rotation (point O). The position equations of X and Y directions, model the dynamic behavior of the mechanism as a function of time and are presented in Equations (1) and (2). These equations were derived based on the kinematic analysis of the mechanism, and they reflect the precise motion of the components involved in the optimization process.

The design parameters , , and are the variables on which the optimization function depends. These represent physical measurements within the mechanism. Specifically, variable represents the distance between points O and B, denotes the distance between points O and A, and corresponds to the horizontal distance between the cam’s stabilization section and point O. These variables are constrained to specific intervals due to the spatial limitations of the machine, which are detailed below.

;

;

.

By calculating derivatives with respect to time using Equations (1) and (2) three times, the jerk functions are obtained. Due to its mathematical extension, equations of these derivatives are shown in detail in the simulation code upon request. However, their behavior is exemplified using arbitrary values

,

and

; then, the jerk vector magnitude is computed for a full turn of the cam which is shown in

Figure 3. This figure shows the maximum jerk point in the plotted curve; the mechanism has 2 points of maximum jerk over every turn, which are the values being minimized through the optimization in this document. This computation is exemplified in Equations (3)–(5).

2.2. Brute Force Method

The brute force method for optimization is a straightforward approach that involves evaluating every possible solution within a defined search space to identify the best outcome. This method systematically explores all configurations, calculating the objective function for each one and ensuring that no potential solution is overlooked. While the brute force method guarantees finding the optimal solution, it can be computationally expensive and time-consuming, especially for complex problems with large search spaces. As a result, it is often impractical for real-world applications where efficiency is crucial. Despite its limitations, the brute force method serves as a valuable baseline, providing a clear benchmark against which the performance of more sophisticated optimization techniques can be compared.

The search space for the current optimization consisted of a millimeter-by-millimeter combination of the values of the variables within the specified intervals. For variable , the range was from 130 mm to 146 mm, providing 17 discrete values. Variable ranged from 315 mm to 380 mm, resulting in 66 possible values. Lastly, variable was defined within the interval of 25 mm to 45 mm, offering 21 values. In total, these parameters created 23,562 combinations to be evaluated.

2.3. Sine Cosine Algorithm

The Sine Cosine Algorithm (SCA) is a metaheuristic search methodology based on a random sinusoidal scale, from the optimal point found up to that iteration. This search methodology allows for a balance between exploration and exploitation. The algorithm demonstrates great potential for global optimization and shows promising results when modified or hybridized with other algorithms, particularly in solving design problems [

25,

26,

27]. Its simplicity allows for a wide range of applications and is governed by Equations (6)–(9) [

28].

: Attenuation parameter;

, r3: Random numbers;

: Search intensity parameter;

: Number of iterations;

: Current interaction;

: Variables to optimize;

: Value of the optimal variables found according to the optimization criterion.

The parameter and the number of iterations are critical in implementing the method. The parameter , which controls search intensity, defines the amplitude of the sine function, and governs how the search is conducted around the optimal point. This variable indicates the maximum value that the attenuation parameter can reach. When is larger, the search explores a wider region of the optimization space, enhancing exploration. However, this may reduce exploitation, as the optimization steps become larger. To balance exploration and exploitation, it is necessary to correlate with the number of iterations .

In

Figure 4, the behavior of the maximum jerk in relation to

is shown for a constant number of iterations. The chosen interval for

is [0.01, 0.1], with 100 values of

evaluated within this range. The number of iterations

is set to 1000. The computations were repeated 100 times to calculate the mean and standard deviation for each of the 100

values. The results indicate that when

increases, the optimization tends to stabilize at a constant value. Conversely, when

is too small, the exploration becomes less effective, leading to significant variability in the optimal value, as reflected by a higher standard deviation. This suggests that, for this number of iterations, a

value greater than approximately 0.05 tends to achieve a lower maximum jerk value more consistently.

In

Figure 5, the behavior of the maximum jerk is depicted as a function of the number of iterations for a constant parameter

. The chosen interval for the number of iterations is [10, 1000], with 100 iteration values sampled within this range. The parameter

is set to 0.1 for this computation. The process was repeated 100 times to calculate the mean and standard deviation for each of the 100 iteration values.

This figure shows that increasing the number of iterations leads to more optimal solutions. For this value of , the jerk values tend to stabilize around 500 iterations, with a noticeably lower standard deviation. This indicates that after a certain threshold, additional iterations yield diminishing returns in terms of optimization improvement but contribute to consistency.

The parameter selection for the Sine Cosine Algorithm (SCA) in the jerk optimization process was based on the results shown in

Figure 4 and

Figure 5. Values of

and

iterations were chosen for the optimization.

2.4. Particle Swarm Algorithm

Particle Swarm Optimization (PSO), introduced by Eberhart and Kennedy, is inspired by human social behavior and natural swarming phenomena, such as fish schools or bird flocks [

29]. In PSO, each solution is represented by a particle, with its position reflecting a potential solution, while the ‘food’ is the objective function being optimized. Throughout the iterations, particles adjust their positions by moving toward both the best local and global solutions, eventually converging on the optimal objective value. The main steps in PSO include initializing parameters, evaluating the objective function, and updating the particles’ velocity and position.

In recent advancements in trajectory planning for high-speed parallel manipulators, Chen et al. [

30] presented an innovative technique that optimizes execution time, energy consumption, and jerk, utilizing a quantum-behaved particle swarm optimization (QPSO) algorithm and quintic B-spline interpolation to enhance efficiency, reduce vibration, and minimize energy use. Xiao et al. [

31] proposed a jerk-limited heuristic feed rate scheduling method for five degrees-of-freedom (5-DOF) hybrid robots, optimizing machining time and stability using particle swarm optimization (PSO). Their approach addresses the complexity of feed rate scheduling for high-precision machining, improving both computation speed and overall efficiency.

In the realm of product design, Fu et al. [

32] propose an innovative method that integrates Kansei Engineering with Game Theory and Particle Swarm Optimization–Support Vector Regression (PSO-SVR) to enhance the objectivity and precision of designing Ming-style furniture, addressing key emotional requirements from users.

In this document, it is applied a methodology derived from the Particle Swarm Optimization (PSO) algorithm, specifically the Ant Colony Optimization (ACO) algorithm. The classical ACO requires the definition of several key parameters for effective operation. These parameters include the following:

In the Ant Colony Optimization approach for solving a continuous function, the problem is addressed by discretizing the variables into intervals. For the current problem, which involves minimizing the jerk, three variables need to be optimized. The different paths represent the combinatorial space formed by the discretization of the continuous intervals, and the reference points along these paths correspond to the potential values of each variable.

To define these paths, it is essential to establish the optimization order for the variables. In this case, as illustrated in

Figure 6, the variables are optimized in the following order: first

, then

, and finally

. Each white point in this figure represents a possible value for one of the variables. The path chosen by an ant is a combination of one white point from each variable circle. The ACO implementation used 10 ants to explore the solution space.

The evaporation coefficient, which determines how quickly undesirable paths are removed from the ants’ preferences, was set to 0.05 in this solution. Additionally, a stopping criterion of five iterations without improvement in the optimal value was employed.

2.5. Genetic Algorithm

Genetic Algorithm (GA) was proposed by Holland John [

33] and has been widely employed to select optimal features, especially in the development of envelop feature selection methods [

34]. It is considered as one of the first and most widely applied metaheuristic algorithms. The design inspiration for GA comes from natural selection theory and genetic principles. The fundamental idea is to simulate the process of biological evolution, evolving individuals within a population over generations through genetic operations. This iterative evolution aims to produce individuals increasingly adapted to the environment, searching for the optimal solution.

Decision variables are considered chromosomes when GA is employed to address optimization problems. These chromosomes are updated according to the genetic principles of genes and undergo operations such as crossover and mutation. Subsequently, based on the values of their objective function, the best chromosomes are preserved through survival of the fittest. After multiple iterations, the chromosome satisfying the termination condition is regarded as the result of model parameter inversion. In their study on air conditioning systems, Zhao et al. [

35] proposed an innovative angle control algorithm for air curtains using a GA-optimized quadratic BP neural network, achieving improved airtightness and real-time performance, which is crucial for enhancing energy efficiency and emission reduction. Tegegne and Kim [

36] used Genetic Algorithms to develop and evaluate the operation rules of a reservoir reflecting the uncertainty of the reservoir inputs.

The classical Genetic Algorithm implemented in this document uses a crossover mechanism where one offspring inherits a single chromosome from the father and two chromosomes from the mother, or vice versa. The process then applies an elitist selection, choosing the better solution from the two offspring. In this case, each chromosome represents one of the three variable values, or genes, selected for the optimization process. After crossover, a random mutation is applied to one of the chromosomes of the elite offspring by altering neighboring genes, which helps introduce diversity to the population of ten individuals. The stopping criterion is set at 50 generations without improvement in the optimization value.

The discretization of possible genes is achieved by initializing variables and ensuring that mutations can only occur within predefined intervals for each variable. This approach guarantees that any geometrical value accepted as optimal remains within the defined range, and the most suitable values are represented among the genes in the population across generations. This method helps maintain the precision of the optimization process while ensuring that the search space is properly explored within the allowed boundaries.

3. Results

Using a brute force methodology, the minimum possible jerk value among all maximum jerk values was obtained, as well as the values of the design variables. The absolute optimal values found in the search space were the followings:

;

;

.

Figure 7 shows the value of the maximum jerk for all the values evaluated in the brute force method. A global minimum value of jerk is observed that is expected to be reached by means of the metaheuristic methods.

From the behavior shown in

Figure 7, 17 trend peaks are observed as the variable values change, corresponding to the 17 values of the variable L. The jerk values in

Figure 7 range between 112,890 N/mm

3 and 80,049 N/mm

3. This indicates that there could be a 29% reduction in jerk according to the change from the maximum jerk value (112,890) to the optimized jerk value (80,049), which was calculated using Equation (10).

Figure 8 shows the graph obtained from the maximum jerk values as a function of iteration for the Ant Colony Algorithm. It is observed that the algorithm reached the optimal value in iteration 4. This shows high efficiency in finding the best solution.

Figure 9 illustrates the graph of maximum jerk values as a function of iteration for the Genetic Algorithm. The optimal value is reached at iteration 52. Due to the inherent nature of Genetic Algorithms, multiple iterations, or generations, are typically required to converge on the best solution. It is noteworthy that while the algorithm quickly achieves an acceptable level of optimization in the early iterations, reaching the true optimal point needs further refinement of the chromosomes across several generations.

Figure 10 shows the results obtained from the maximum jerk values as a function of iteration for the Sine Cosine Algorithm. It is observed that the algorithm reached the optimal value in iteration 491.

The results obtained using both the Genetic Algorithm and the Ant Colony Algorithm matched those from the brute force method but were achieved in significantly shorter times.

Table 1 presents a comparison of the relative and absolute errors between the SCA method and the brute force method. This comparison is essential because SCA uses a continuous search approach, unlike the discrete methodology applied in the brute force method.

It can be observed that the relative error of was 7%, corresponding to an absolute value of 1.75 mm, which is the highest error among all variables. However, it is important to note that the relative error of the objective value, , was only 0.465%. This indicates that while has a larger margin of error, it does not significantly influence the optimization of the jerk value. Therefore, the 7% error for can be considered acceptable within the context of the overall optimization.

Figure 11 presents a comparison of the execution times for each algorithm, averaged over 10 random runs. On one hand, both the Ant Colony and Genetic Algorithms consistently find the optimal solution in each run. On the other hand, while the Sine Cosine Algorithm yields varying results across runs, the variations are not significant.

The reduction in execution time with respect to the brute force method was computed using Equation (11).

With this equation, a reduction of 94% in execution time was obtained for the Sine Cosine and Genetic Algorithms. Also, a reduction of 98% was obtained for the Ant Colony Algorithm.

4. Discussion

The sinusoidal random search characteristic of the Sine Cosine Algorithm (SCA) typically allows for finding an optimal function value within just a few iterations. However, in this instance, it took 491 iterations to arrive at the result, which is approximately half of the predetermined maximum iterations. The stopping criterion for this method was set to the maximum iterations to ensure that the algorithm could continue searching for an absolute minimum value around the optimal point that had already been identified. Since the search occurs within a continuous interval rather than a discrete set of possibilities, it is expected that the Sine Cosine Algorithm (SCA) may not achieve the absolute minimum identified by the brute force method. The constraints on the possible values due to interval restrictions limit the algorithm’s sinusoidal nature. This can negatively impact the algorithm’s random search capability, leading it to converge on a local minimum from which it does not improve, even after completing all iterations. Nonetheless, the absolute and relative errors in the results are small, as shown in

Table 1. Notably, the maximum jerk value obtained by both methods differs by only 0.465%, indicating that the difference is not significant and SCA could be an effective option in the optimization process.

On the other hand, the Genetic Algorithm and the Ant Colony Algorithm achieved a value equal to the one determined by brute force. This means that accuracy it is not a problem for these algorithms when a correct discretization of possible solutions in the search space is implemented.

The difference in processing time between the different metaheuristic methods is not higher than 1 s; however, in all cases the execution time is less than 6% of the time needed by the brute force method, which is highly desirable.

The Ant Colony Algorithm is the most efficient metaheuristic algorithm for this optimization, as both the processing time and the number of internal iterations of the algorithm are the smallest among all those used.

The 29% reduction in jerk values achieved through the dimensional optimization of the mechanism has a significant positive impact on the daily handling of carnation stems. After implementing physical modifications to the machine based on the optimal values, Florval Company observed a decrease in mechanical damage to the stems from 6% to 2%, according to the monthly production summary. Furthermore, the frequency of hourly maintenance was extended to once every three hours, minimizing downtime on the production line. This optimization not only enhances operational efficiency but also reduces mechanical wear, contributing to a longer lifespan for the equipment.