4.1. Discussion

The results of this study underscore the critical importance of integrating multi-modal data with advanced attention mechanisms to enhance the accuracy of automated assessment systems in medical training. By combining 2D image data with 3D reconstructed data, the proposed multi-modal fusion model with multi-head self-attention achieved a significant improvement in performance metrics compared to single-modal approaches. Specifically, while the accuracy for the 2D image-based single-modal model was 0.5913, and the 3D reconstructed data model achieved 0.6573, the multi-modal approach elevated this to 0.7238. This improvement in accuracy and other metrics, including AUC (0.8343), precision (0.7339), and F1-score (0.7060), demonstrates the model’s ability to differentiate between skill levels with higher sensitivity and reliability.

Moreover, the use of multi-head self-attention mechanisms further distinguishes our approach from existing methods by enabling the model to focus on relevant aspects of the fused data. This attention mechanism allows the model to better capture nuanced differences in hand movements and task progression, leading to an improved classification of skill levels. This is particularly critical in medical education, where subtle variations in performance can significantly impact clinical outcomes.

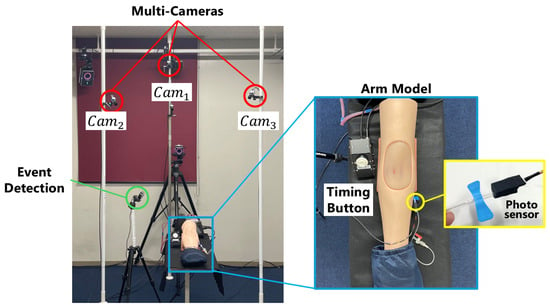

This study contributes to advancing the field of AI-driven solutions for medical education, particularly in the area of skill assessment, by proposing a novel multi-modal fusion network that integrates 3D reconstructed data and 2D images. The network utilizes LSTM and CNN to extract temporal and spatial features, and the introduction of a self-attention mechanism allows the model to efficiently capture critical moments during the injection process. This novel architecture provides a comprehensive evaluation of hand movements in medical training, as shown in our improvement over single-modal models (e.g., 0.5913 and 0.6573 accuracy for CNN and LSTM, respectively). Furthermore, extensive experiments validated the effectiveness of this model, demonstrating superior accuracy over existing methods while enabling objective, quantitative assessments of the injection process.

However, while our model outperforms traditional machine learning methods like Gradient Boosting Decision Trees (GBDT) and Random Forest, and even other deep learning models such as CNN and LSTM-based approaches, there are still areas for improvement. The results from the confusion matrices and ROC curves indicate that the model performs well in identifying high skill levels (Class 5) but struggles with distinguishing intermediate skill levels (Class 4) from lower levels (Class 3). This suggests that further refinement of the model’s sensitivity to subtle differences between intermediate and high skill levels is necessary.

Additionally, our study contributes to the growing body of research advocating for AI-driven solutions in the post-pandemic era of medical education. The disruption caused by COVID-19 has accelerated the need for remote and scalable training tools, and our proposed multi-modal fusion model represents a step toward fulfilling that need. By providing a data-driven, objective method of skill assessment, this model could enhance the quality and consistency of medical education, particularly in settings where traditional face-to-face instruction is not feasible.

4.3. Conclusions

In conclusion, this study introduces a novel multi-modal fusion model with multi-head self-attention for the automated assessment of hand injection training in medical education. By combining 2D image data with 3D reconstructed motion data, the model achieves a notable improvement over single-modal approaches with an accuracy of 0.7238 and an AUC of 0.8343. These findings underscore the importance of integrating multi-modal data to provide a more accurate and comprehensive evaluation of medical skills.

Our model demonstrates strong performance in distinguishing between different skill levels, particularly in predicting high and low skill levels. However, challenges remain in consistently classifying intermediate skill levels, which could be addressed by refining the attention mechanisms or incorporating additional dataset.

This study holds significant implications for the future of medical education, even though it is still in its early stages. The post-pandemic era has underscored the need for innovation, particularly in remote training and automated assessment tools, which are becoming increasingly vital. Our proposed approach contributes to the growing body of AI-based tools that aim to enhance clinical education by providing scalable, reliable, and objective data-driven evaluations. Future work will focus on further enhancing the model’s capabilities by integrating more diverse datasets and exploring the introduction of advanced deep learning techniques, such as 3D-CNN, to improve performance and adaptability in real-world training environments.

Source link

Zhe Li www.mdpi.com