1. Introduction

Currently, most Internet transactions are primarily due to online purchases, which have become increasingly important because of the COVID-19 pandemic [

1]. Customers seek to minimize the cost of their purchases by considering the discounts offered by online stores, since prices change daily depending on the advertised offers. The Internet shopping problem was formally defined by Błażewicz [

2]; furthermore, this author shows that this problem corresponds to the NP-hard type by constructing a pseudo-polynomial transformation of the Exact Cover by 3-Sets problem P1.

Currently, metaheuristic algorithms are widely used to solve optimization problems. They are generally used to find optimal solutions or to work towards the optimal value in NP-hard optimization problems [

3]. A study by Li [

4] identifies the main trends and areas of application of metaheuristic algorithms such as logistics, manufacturing, artificial intelligence, and computer science; the main reason for its popularity is its flexibility in addressing NP-hard problems. Valencia-Rivera [

5] presents a complete analysis of trends and the impact of the metaheuristic algorithms that are most frequently used to solve optimization problems today; the review includes particle swarm optimization algorithms, genetic algorithms, and Ant Colony algorithms.

Therefore, developing new solution methods and metaheuristics is essential for identifying which stores offer the best discount to satisfy a shopping list. The described issue is known as the Internet shopping problem with sensitive prices (IShOPwD); related works are described below:

Błażewicz [

6] designed the IShOPwD model for the first time, considering only the customer’s perspective. This model explicitly addresses a scenario where a client aims to buy several products from multiple online stores, focusing on minimizing the total cost of all products and incorporating applicable discounts into the overall cost. The solution method developed is a branch-and-bound algorithm (BB).

Musial [

7] proposes a set of algorithms (Greedy Algorithm (GA), Forecasting Algorithm (FA), Cellular Processing Algorithm (CPA), MinMin Algorithm, and Branch and Bound algorithm) to solve the problem of IShOPwD optimization. These algorithms are crafted to generate various solutions at different computation times, aiming to achieve results that are close to the optimal solution. The results of these proposed algorithms are compared with the optimal solutions and calculated using a BB.

In the work of Błażewicz et al. [

8], the authors define and study some price-sensitive extensions of the IShOP. The study includes the IShOP with price-sensitive discounts and a newly defined optimization problem: the IShOP that includes two discount functions (a shipping cost discount function and a product price discount function). They formulate mathematical programming problems and develop some algorithms (new heuristic: new forecasting), and exhaustive feasibility tests are carried out.

Another related work is that of Chung and Choi [

9]. This work aims to introduce an optimal bundle search problem called “OBSP” that allows integration with an online recommendation system (Bundle Search Method) to provide an optimized service considering pairwise discounting and the delivery cost. The results are integrated into an online recommendation system to support user decision-making.

Mahrundinda [

10] and Morales [

11] analyze the trends and solution methods applied to the IShOP and determine that no solution methods have used non-deterministic metaheuristics to solve the IShOPwD until now. That is why it was decided to adopt the solution methods proposed by Huacuja [

12] and García-Morales [

13], which are metaheuristic algorithms that provide the best results for the IShOP variant with shipping costs.

In this research, we develop two metaheuristic algorithms (a Memetic Algorithm (MAIShOPwD) and a particle swarm optimization algorithm (PSOIShOPwD)) that allow us to solve the Internet shopping problem with sensitive prices.

The main contributions of the proposed MAIShOPwD algorithm are as follows:

A novel method for calculating changes in the objective values of the current solution during local search, with a time complexity of .

Adaptive adjustment of control parameters.

On the other hand, the contributions of the proposed PSOIShOPwD algorithm are as follows:

A revised approach derived from the bandit-based adaptive operator selection technique, Fitness-Rate-Rank-Based Multiarmed Bandit (FRRMAB) [

14], was used to adjust the control parameters in both proposed algorithms adaptively.

A series of feasibility experiments, including the instances used in [

12], were carried out to identify the advantages of the proposed algorithms. Subsequently, the non-parametric tests were applied with a significance level of 5% to establish certain conclusions.

This research comprises the following sections:

Section 2 formally defines the problem addressed.

Section 3 describes the main components of the proposed algorithms.

Section 4 and

Section 5 describe the general structure of the MAIShOPwD and PSOIShOPwD algorithms.

Section 6 describes the computational experiments carried out to validate the feasibility of the proposed algorithms.

Section 7 presents the results obtained through the Wilcoxon and Friedman non-parametric tests. Moreover,

Section 8 defines the conclusions obtained and potential areas for future work.

2. Definition of the Problem

The data set includes the available products in each store . Each product is associated with a cost and a shipping cost . The shipping cost is added if the client purchases one or more items in the store .

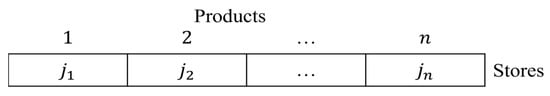

Formally, the problem considers that a client aims to purchase items from a specified set

, in a set of online stores

with the lowest total cost, including a discount

, which is applicable in the calculation of the objective function. We calculate the objective value of the solution

using Equation (1):

A candidate solution is represented by a vector

of length

, which specifies the store from which each product should be purchased. A more detailed description can be found in the work of Błażewicz et al. [

6].

Equation (2) below shows the criteria used to select the discount for the total cost of the shopping list for the function

.

4. The General Structure of the Memetic Algorithm

Huacuja [

12] introduced a Memetic Algorithm to tackle the IShOP with shipping costs. The results obtained by the Memetic Algorithm demonstrate that it could feasibly be used to solve other variants related to IShOP. Considering this contribution as a feasible solution method, the decision was made to improve the local search to allow a significant reduction in the quality and efficiency of the results for the IShOPwD. Additionally, a mechanism that allows adaptive adjustment of some control parameters was added, considering the contribution of García-Morales [

17].

4.1. Used Heuristics

The heuristics used to generate candidate solutions are described below. They are called best first and best [

12]. These heuristics identify the store where a product can be purchased at the lowest cost, considering the discount associated with the total cost of the shipping list. If more detailed information on the operation of these heuristics is required, please consult the work conducted in [

12].

4.1.1. Heuristic: Best First

The best first heuristic changes the stores assigned to a solution vector until the first cost improvement associated with the current product is found. This process is repeated for all products in the shopping list. This heuristic has a time complexity of

[

12].

4.1.2. Heuristic: The Best

The best heuristic works the same as the best first heuristic. The difference is mainly that this heuristic continues when it finds the first improvement in the cost of each product but continues to search all stores for the lowest cost for each product. The process concludes when all stores have been checked for all products in the solution vector. This process is applied to all products. The best heuristic has a complexity of

[

12].

4.2. Binary Tournament

A certain number of individuals are randomly selected from the population, and the fittest advance to the next generation. Each individual participates in two comparisons, and the winning individuals form a new population; this ensures that any individual in the population has a maximum of two copies in the new population. A more extensive description of the binary tournament can be found in [

12].

4.3. Crossover Mechanism

The crossover mechanism is applied to a certain percentage of the individuals sequentially. This operator selects two solution vectors called parent one and parent two. Both parent vectors are divided in half to generate two children. The first offspring is created by combining the first half of parent 1 with the second half of parent 2. The second offspring is formed by merging the first half of parent 2 with the second half of parent 1.

4.4. Mutation Operator

This operator selects a percentage of elite solutions from the population. Subsequently, it goes through each elite solution and generates a random number. If the value of the mutation probability parameter is less than the heuristic mutation process, the heuristic mutation process will be applied to each selected elite solution. Detailed information on the mutation heuristic can be found in [

12].

4.5. Improved Local Search

The local search algorithm improves a given vector solution, reducing the total cost. To achieve this goal, we change the assigned store for each product, reducing the solution’s total cost. This procedure improves a given solution, and speeding up the local search algorithm is costly. This procedure was modified to reduce the computational costs and determine the change in the objective value of the current solution. be the current solution and be the solution we obtain when the store one should buy the product from changes from to . The change in the objective values of the current solution is given by . If this is directly realized using Equation 1, the computational complexity required to determine is . The following equation shows how to calculate in .

Theorem 1. .

Proof. Let the current solution and the solution that we obtain when the store one should buy the product from changes from to .

Then, using Equation (6):

Therefore, = . □

The local search tries to improve the current solution by changing the stores assigned to the products. For each product, the store that produces the maximal reduction in the objective value is identified. To determine if a change in the store assigned to the product produces a reduction or not, Theorem 1 is applied again in the search process. For this reason, these results significantly impact the local search performance.

4.6. Proposed Memetic Algorithm

Algorithm 3 shows the structure of the proposed MAISHOPwD algorithm. In steps 1 and 2, the values of the initial parameters are defined, and the instance to be used is loaded. From steps 3 to 7, an initial population is generated, the objective value and discount for the entire population are calculated, and the best local and global solution can be identified. In step 9, the binary tournament is applied to the population, and a new population will be generated based on the results obtained. In step 10, a percentage of elite solutions are moved from the new population to an intermediate population. In step 11, the crossover operator affects the new population, and the missing elements of the intermediate population are generated. In steps 12 and 13, the mutation operator and the improved local search are applied to the intermediate population to obtain a population of children. In step 15, the local solution is checked to determine if it is better than the global solution; if so, the global solution is updated. In steps 16 and 17, the entire population is reset, the best global solution is inserted, and all other individuals in the population will be ruled out. In steps 18,19 and 20, the sliding window is updated, and the rewards of the adaptive control parameter adjustment method are ranked. In step 21, the process of each iteration ends, and finally, in step 22, the best solution and the global cost are obtained.

| Algorithm 3. MAIShOPwD Algorithm. |

Input: : Maximum number of iterations

Instance: m (stores), n (products), cij (product cost), dj (shipping cost)

Parameters/Variables: initial population size

: crossover probability

: mutation probability

: rate elitism

: Initial population

BestGlobalSolution, BestGlobalCost: Best overall solution and cost

BestLocalSolution, BestLocalCost: Best solution and cost in each generation

Functions:

BestGlobal(pop, BestGlobalSolution, BestGlobalCost): from pop obtain the BestGlobalSolution and the BestGlobalCost

, apply the mutation operator to all the solutions in the intermediate population and move the offspring to the population of children

: apply improved LocalSearch to all solutions in IntermediatePop and move the offspring to ChildPop

: obtain the BestLocalSolution and the BestLocalCost in ChildPop

: calculate the objective value

: apply the discount to the objective value

: obtains the index for executing an action

: performs an action based on an index

: a sliding window that stores both the frecuency of action execution and the decrease in individual costs

: enhance the rewards held within the sliding window

Output: : Best overall solution

Best overall cost |

|

| 2: Load Instance |

|

| 6: BestGlobal(pop, BestGlobalSolution, BestGlobalCost) |

| 7: BestLocalSolution = BestGlobalSolution; BestLocalCost = BestGlobalCost; |

do

|

| 10: Move from NewPop to IntermediatePop the elite solutions |

|

|

|

|

| then BestGlobalCost = BestLocalCost; BestGloblalSolution = BestLocalSolution |

| BestGlobalSolution |

| 17: Randomly generate ps − 1 solutions and add each solution to pop |

|

|

| 20: Rank Rewards |

| 21: end while |

| ) |

7. Results

Table 3,

Table 4 and

Table 5 show the outcomes obtained from the computational experiments carried out, and subsequently, the non-parametric Wilcoxon test is applied with a significance level of 5%. For each table, the first column corresponds to the evaluated instance. The second and fourth columns show the

and the

achieved by the reference algorithm and, as a subindex, the standard deviation. Correspondingly, the third and fifth columns show these values for the comparison algorithm. Columns six and seven, however, represent the

obtained by the Wilcoxon test for the objective value and shortest time, respectively. The cells shaded in gray represent the lowest

or the shortest time when the best solution is found according to each column. The symbol ↑ indicates a significant difference in favor of the reference algorithm. The symbol ↓ indicates a significant difference in favor of the comparison algorithm, and the symbol = indicates no significant differences.

Based on the Wilcoxon test results,

Table 3 illustrates that the proposed MAIShOPwD algorithm outperforms the state-of-the-art algorithm regarding the objective value in six out of nine instances. Regarding the shortest time metric, the MAIShOP algorithm performs better in four out of nine instances evaluated.

The results obtained by the Wilcoxon test shown in

Table 4 indicate that the proposed PSOIShOPwD algorithm outperforms the state-of-the-art algorithm in six out of nine instances when evaluating the objective value. Regarding the shortest time metric, this algorithm outperforms the others in eight out of nine instances.

The results obtained by the Wilcoxon test shown in

Table 5 indicate that the two proposed algorithms perform similarly to the objective value, since the MAIShOPwD algorithm performs better in medium instances. In contrast, the PSOIShOPwD algorithm performs better in large instances.

Table 6 contains the results obtained from the evaluation of the Friedman test at a 5% significance level; the first column lists the instances, while the second and third columns show the results for the

and the

achieved by the state-of-the-art algorithm. The fourth and fifth columns present equivalent data but for the MAIShOP algorithm. The sixth and seventh columns display results similar to those in the second and third columns, but specifically for the PSOIShOPwD algorithm. The last two columns contain the results of the

-value for each instance evaluated by the Friedman test.

The results obtained by the Friedman test indicate that in small instances concerning the objective value, the performances of the three algorithms are the same. For medium-sized instances, the algorithm that performs the best is MAIShOPwD. In the case of large instances, the best algorithm is PSOIShOPwD. The time analysis reveals that the two proposed algorithms outperform the state-of-the-art algorithm.

8. Conclusions and Future Work

This research addresses the IShOPwD, a variant of the IShOP that has become very relevant for buyers in the current electronic commerce scenario because online stores offer endless benefits for customers acquiring their products. This variant allows us to identify the most economical cost of a shopping list, considering a discount associated with the total cost. In this article, two main metaheuristic algorithms that have not yet been considered are proposed to solve IShOPwD. The first is a MAIShOPwD algorithm that incorporates an improved local search that contains a method used to calculate the change in the objective value of the current solution with a time complexity of , thus avoiding excessive expenditure of computing time and adaptive adjustment of control parameters that, during the execution of the algorithm, adjust the best values of the crossover and mutation probability. The second is a PSOIShOPwD algorithm that, unlike the state-of-the-art algorithms, also uses a vector representation of the candidate solutions. It includes neighborhood diversification to avoid local stagnation of the algorithm and incorporates the adaptive adjustment of control parameters that directly benefit the personal and global learning parameters. These parameters allow better positioning of the particles in the search space. The proposed algorithms are validated using the non-parametric Wilcoxon and Friedman tests, which were utilized to assess the results obtained from both the proposed algorithms and the state-of-the-art BB. According to the results obtained in the Wilcoxon test, the MAIShOPwD algorithm achieves a better performance compared to the BB; however, in inefficiency, the BB is better, and in the case of the PSOIShOPwD algorithm, it is better in terms of both quality and efficiency compared to the BB. This same test concludes that the two proposed algorithms have similar performance. The Friedman test indicates that the two proposed algorithms exhibit superior performances in both quality and efficiency when compared to the state-of-the-art algorithm.

Finally, in future work related to the IShOPwD variant, all the control parameters used by the algorithms could be incorporated into the adaptive adjustment, adding the restriction of being able to purchase more than one product of the same type and being able to use other types of discount such as coupons and lightning offers.