3.1. Debugging of the Robotic Arm

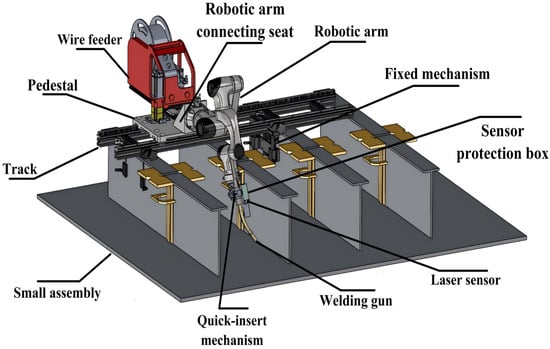

Before commissioning, it must first be specified that the movement direction of the external axis of the rail is the Z direction in the world coordinate system. The robotic arm, wire feeder, tool flange, quick-plug mechanism, welding torch, and laser sensor are installed onto the bearing mechanism. After the mechanical installation is completed, TCP calibration and laser calibration of the robotic arm are performed. This study adopts the hand-eye calibration method and recommends maintaining a unified calibration posture reference before calibration to prevent precision issues and mechanical arm failures such as lock-up. The starting point of calibration is the flange, which is the end part of the robotic arm. The coordinate system relationships comply with the Cartesian right-hand coordinate system rule shown in Figure 17. During calibration, the coordinate system coincides with the earth coordinate system at the base of the robotic arm, placed at the central position of the robotic arm base, and has the same orientation as the robotic arm’s world coordinate system.

The upper computer processes the hand-eye coordinate system relationship through calibration algorithms and the internal and external parameter matrices of the laser sensor. It converts the relative relationship between the origin of the robotic arm’s Cartesian coordinate system relative to the robotic arm flange into the relative spatial relationship between the origin of the robotic arm base’s Cartesian coordinate system and the sensor’s laser scanning point, as shown in Figure 17. The calibration process requires completing the transformations among the four coordinate systems B, T, C, and CL to obtain the coordinate conversion relationships between two-dimensional pixel coordinates and the spatial coordinate system. The visual recognition results are transferred to the robotic arm’s coordinate system, thereby achieving precise robotic arm motion control and visual guidance. In Figure 17, B represents the robotic arm base coordinate system, T represents the laser sensor coordinate system, C represents the laser sensor coordinate system, and Cl represents the calibration object coordinate system.

For the coordinates of the same point p in any two Cartesian coordinate systems M₁ and M₂ in three-dimensional space, the transformation matrix (1) is a 4 × 4 matrix, which can be represented as the combination H of rotation R and translation T.

In the Equation (1), the submatrix composed of R represents the rotation matrix, and represents the translation vector. The coordinates of point p in , are transformed into homogeneous coordinates , thereby obtaining the coordinates of point p in , .

The following coordinate system transformation relationships are expressed as Euclidean transformation matrices: BHCl represents the transformation from the base coordinate system B to the calibration object coordinate system Cl. CHT represents the transformation from the laser sensor coordinate system C to the TCP coordinate system T. This is the “eye-in-hand” relationship; regardless of how many movements occur, the positional relationship between the robotic arm base and the calibration board remains unchanged, and the positional relationship between the laser sensor and the TCP remains unchanged—that is, BHCl and CHT are constants. CHCl represents the transformation from the laser sensor coordinate system C to the calibration object coordinate system Cl, obtained through laser sensor calibration. BHT represents the transformation from the base coordinate system B to the TCP coordinate system T, derived from the robotic arm calibration system. When the robotic arm moves from position 1 to position 2, the following transformation relationships hold:

Based on Equations (3)–(5), we obtain (6):

Similarly, after the robotic arm moves to position 2, we obtain (7):

Combining and rearranging Equations (6) and (7), we derive (8):

If we let , we deduce (9):

To solve the problem in the form of Equation (9), we first need to complete the transformation from the base coordinate system to the TCP coordinate system. The rotation matrix is calculated based on the angles of the robotic arm. This paper adopts a specific ZYX Euler angle rotation matrix form to solve BHT. The rotation matrices of the three angles are as shown in Equation (10), where a, b, and c correspond to the (yaw), (pitch), and (roll) angles in the ZYX Euler angles.

Following the sequence of ZYX Euler angles to complete the rotations, multiplying the three matrices yields (11), which expands into (12):

A schematic diagram of the ZYX Euler angle rotation is shown in Figure 18.

The homogeneous matrix is calculated based on :

In Equation (13), () are the origin of the base coordinate system, () are the origin of the TCP, and () record the values of T, denoted as , which will be used for the calculation of the orthogonal vectors of . In the actual calibration, the eight-point calibration method shown in Figure 19 is adopted for the TCP. These eight points are non-colinear and uniformly distributed within the working space of the robotic arm. The calibration postures need to be manually set, leaving a margin under rotational limit conditions to prevent the robotic arm from locking up or generating singularities. Under the world coordinate system, the robotic arm is operated to sequentially collect and record the position and posture information of the TCP relative to the calibration object. This calibration algorithm uses the J5 axis as the main control axis. In Figure 19a, the TCP of the robotic arm needs to be perpendicular to the calibration object when taking points. In Figure 19b–h, A and B represent the rotational directions around the X and Y axes in the robotic arm’s world coordinate system, and the new posture changes are made as large as possible compared to the previous ones. After point acquisition is completed, calculate the errors. Once they are within the allowable range, operate the robotic arm under the world coordinate system to make its TCP perpendicular to the calibration object and rotate the robotic arm around the A, B, and C axes to check the degree of alignment. Upon passing the verification, the TCP calibration is completed. The smaller the calibration error, the higher the working efficiency and precision of the robotic arm, except when there are errors in the robotic arm itself.

In automatic welding technology, visual sensors are an important component driving the development of intelligent welding [19]. As the “eyes” of robotic arms, visual sensors are also a necessary means of assisting operations [20,21]. Next, we will complete the solution and calibration of the transformation relationship of the laser sensor to achieve precise perception of position and orientation, ensuring the accuracy and stability of scanning recognition. First, we introduce the laser sensor model and use its intrinsic and extrinsic parameter matrices for solving. Both map points from the 3D world to the 2D image plane, i.e., conversion between dimensions. The intrinsic parameters , related to the internal characteristics of the laser sensor, can be represented as follows (14):

The extrinsic parameters describe the position and orientation of the laser sensor in the world coordinate system, allowing for the transformation from world coordinates to laser sensor coordinates. That is, the extrinsic parameters are . Based on the derivation of the relationship between intrinsic and extrinsic parameters, after performing homogenization operations on the point from the 2D image plane and its spatial coordinates , we obtain the model of the laser sensor, represented as (15).

In (15), refers to degrees of freedom. During the process of moving from 3D space (laser sensor coordinates) to 2D space (captured image coordinates), one degree of freedom is reduced, particularly depth. The intrinsic parameters focal length () and principal point are provided by the laser sensor. Subsequently, combining the above matrices to solve for the extrinsic parameters, this paper obtains by utilizing the projection information of five different calibration points. The calibration transformation relationship is shown in Figure 20.

Using the laser five-point calibration method, a calibration starting center point and four calibration points at different positions with coordinates on the target plane are selected, along with the pose parameters of the base coordinate system, with coordinates and angles , where . Then the offset matrix is prepared.

Calculation of is performed five times according to Equation (13) to obtain . Based on and , the rotation and translation information is extracted from their product. Then, according to the required orthogonality, the three orthogonal vectors are computed (17).

In (17), represents the pseudoinverse (Moore-Penrose inverse) of . Based on the properties of rotation matrices, we determine that the cross products of the row vectors conform to the right-hand rule, thereby obtaining the relationships among V1, V2, and V3 in (18). Through precise calculations using five calibration points, we derive the optimal approximate solution. In (19), and are variables used to record the results. Subsequently, the variables are normalized.

The calibration result matrix is constructed, which is the required .

For any measurement point with coordinates , it is homogenized to obtain Equation (21).

The sensor performs repeated emission scanning of laser beams, calculates the time difference and angles of the round trip, and integrates the data to form a more comprehensive two-dimensional data image. Using Equation (21), any two-dimensional coordinate can be converted into a three-dimensional coordinate , facilitating further point cloud construction.

In practical operation, the calibration object is placed under the robotic arm’s world coordinate system within the working conditions. A central point and marking points in the four quadrants at different positions within the sensor’s visual range are specified and extracted. As shown in Figure 21a, when adjusting the central point, the laser locator is activated to initiate origin position calibration. The laser beam is superimposed with the positioning beam along the Z and Y axes to obtain the central positioning point. When selecting the four quadrant marking points, refer to the visual range in Figure 21b. The sensor’s field of view divisions and values are as follows: AB is the sensor’s blind zone, BC is the sensor’s positive range, CD is the sensor’s negative range, BD is the sensor’s effective range, C is the sensor’s zero point, W1 is the near measurement end, and W is the far measurement end. All dimensions are in millimeters (mm).

During the process of selecting calibration point data, the four quadrant points should be chosen at optimal distances within the Z/Y axis data intervals specified by the laser’s visual range. Based on the above processing rules, the calibration object is sequentially scanned at the four marking points. The calibration results are then subjected to precision estimation and comprehensive evaluation, and the data are recorded. The calibration result is shown in Figure 22.

Source link

Yang Cai www.mdpi.com