1. Introduction

Refractory high-entropy alloys (RHEAs) featuring high-melting-point elements, such as Mo, Nb, Hf, Ta, Cr, W, and Zr, promise to replace the nickel-based and cobalt-based high-temperature alloys for shaft, turbine disk, turbine blades of engine in aviation aircraft, marine and gas turbine, etc. [

1,

2,

3]. For example, NbMoTaW, NbMoTaWV [

4], and TaNbHfZrTi [

5] possess relatively high strengths above the limiting temperature (1473 K) of the conventional nickel-based high-temperature alloys.

Nonetheless, the development of refractory high-entropy alloys has been limited owing to their high density and poor low room-temperature toughness. The addition of low-density elements (e.g., Ti, Al, Mg, and Li, etc.) and the ratio adjustment of constituent elements have been suggested to alleviate the above problems in RHEAs [

6,

7,

8]. Therefore, lightweight RHEAs with densities lower than 7 g/cm

3, such as AlNbTiV [

8], Al

xNbTiVZr [

9], and AlNbTiZr [

10], were recently investigated in order to achieve excellent strength in high-temperature environments as well as superior room-temperature toughness.

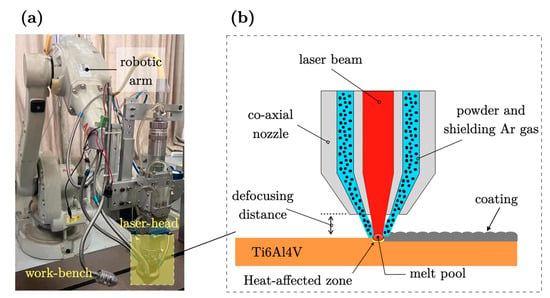

Laser cladding (LC), i.e., an advanced manufacturing technology, has the advantages of fostering a strong metallurgical bond strength, a concentrated energy density, a high processing precision, and a wide choice of elemental materials [

11,

12]. As such, lightweight RHEA coatings produced by the LC can reduce the high cost of bulk alloys, overcome size and thickness limitations, and may allow the selection of many elemental materials [

13,

14]. It is noteworthy that RHEA coatings prepared by the LC are often affected by high dilution and porosity rates (unmelted particles), which are attributed to the high melting points of the composing elements as well as their large differences in melting points. On the one hand, the large dilution rates are generated by the overmixing of powder and matrix, which can affect coating properties, such as microhardness, abrasion resistance, and corrosion resistance [

15]. On the other hand, owing to the variance in melting points among constituent elements, those with high melting points may remain unmelted, while high energy can lead to the disappearance of low-melting-point elements within the melt pool [

16]. In both scenarios, the resulting mechanical properties are negatively affected. As a consequence, a current challenge lies in optimizing the LC process parameters, specifically laser power (LP), scanning speed (SS), and powder feeding speed (PF), to overcome issues of high dilution and porosity rates observed in lightweight RHEA coatings [

16,

17].

Usually, the optimization of process parameters commences with the design of experiments (DoE) within a matrix representing the design space, where LP, SS, and PF serve as the primary variables. The optimal design space is subsequently refined through iterative experimentation, including visual and macroscopic inspections, as well as mechanical testing. Empirical-regression modeling is then applied to derive insights from these experiments. Successful applications of statistical analysis methods, illustrating the relationships between input and output parameters and facilitating the establishment of a comprehensive process map, have been previously documented by the authors of this work [

18,

19] and others [

16,

20]. However, it is essential to note that there are limitations to their adaptability in handling complex and non-linear relationships within intricate datasets.

Machine learning (ML) methods offer a versatile approach, autonomously learning patterns and making predictions without explicit programming [

21,

22]. Those could serve as a complementary or alternative means of optimizing process parameters, particularly in situations where relationships are highly complex and challenging to capture solely through traditional empirical methods. In contrast to empirical-regression models, which depend on predefined mathematical relationships, ML algorithms can identify hidden patterns and non-linear correlations within the data, potentially resulting in more accurate predictions. Additionally, machine learning techniques, including supervised and unsupervised learning [

23,

24], enable the exploration of complex interactions among numerous variables simultaneously. Previous research efforts, such as those by Masayuki et al. [

25], Xu et al. [

26], and He et al. [

27], showcase the successful application of machine learning algorithms, specifically Random Forest (RF), AdaBoost, Support Vector Machines (SVM), and hybrid Genetic Algorithm and Ant Colony Optimization (GA-ACO-RFR), in predicting and optimizing various material and process-related parameters. Considering these successes, there is a notable opportunity to leverage machine learning algorithms to address the challenges associated with high dilution and porosity rates in lightweight RHEA coatings produced by the LC process.

Therefore, the primary objective of this study is to formulate predictive models for the porosity, dilution, and microhardness of laser-cladded Ti-Al-Nb-Zr high-entropy alloy coatings to achieve outstanding mechanical properties. Firstly, the orthogonal experimental design is used to generate suitable output data for subsequent ML algorithms. Variance analysis (ANOVA) is used to quantify the contribution of the processing parameters (LP, SS, PF) to the porosity, dilution, and microhardness of the coatings. Subsequently, the Non-dominated Sorting Genetic Algorithm II (NSGA-II) is employed to obtain the optimal processing parameters for achieving minimum porosity, suitable dilution, and maximum microhardness. Finally, the Random Forest (RF), Gradient Boosting Decision Tree (GBDT), and Genetic Algorithm-enhanced Gradient Boosting Decision Tree (GA-GBDT) are utilized and compared to select the most suitable model for boosting prediction accuracy. The proposed approach integrates statistical analysis and advanced ML techniques, enhancing understanding into the optimization of LP, SS, and PF for improved RHEA coating performance in industrial applications, thereby advancing laser cladding technology of lightweight RHEA coatings.

4. Conclusions

In this study, a machine learning-based predictive model was developed to assess the porosity, dilution, and microhardness of Al0.5Ti2NbZr coatings prepared via laser cladding. Initially, ANOVA was used to analyze how processing parameters—LP, SS, and PF—affect these coating properties. Subsequently, the NSGA-II algorithm optimized these parameters to achieve coatings with superior mechanical properties, aiming for minimal porosity, maximum microhardness, and a maintained dilution rate of 25%. ANOVA results revealed direct effects of LP, SS, and PF on porosity, dilution, and microhardness, with significant interactions among these parameters. LP contributed 61.42%, SS contributed 47.05%, and PF contributed 49.69% to the variations in these properties, respectively. From the Pareto front’s optimal solutions identified by NSGA-II, we selected the following processing parameters: LP = 2.384 kW, SS = 2.52 mm/s, and PF = 1.10 g/min.

To enhance the robustness and effectiveness of the optimization process, AI-based predictive models were integrated into the framework. These models provide a powerful tool for forecasting the effects of varying processing parameters and capturing complex, non-linear relationships between the parameters and the coating properties. Therefore, building on the initial analysis presented above, we implemented Random Forest, Gradient Boosting Decision Tree (GBDT), and Genetic Algorithm-enhanced Gradient Boosting Decision Tree (GA-GBDT). The orthogonal dataset previously used for ANOVA was utilized to train and compare these models. Comparison of RF, GBDT, and GA-GBDT using experimental data demonstrated the superior predictive capability of GA-GBDT. Incorporating the genetic optimization algorithm significantly enhanced GBDT’s prediction accuracy, yielding R2 values of 0.88, 0.93, and 0.94 for porosity, dilution, and microhardness, respectively—outperforming RF and standard GBDT models.

Applying the optimized processing parameters in the GA-GBDT algorithm, we accurately predicted the porosity (2.76%), dilution (45.27%), and microhardness (553.32 HV0.3) of the coatings, with relative errors (δ) of 13.11%, 3.35%, and 1.74% compared to the experimental data.

Overall, this study employed a novel approach to enhance the understanding and optimization of laser-cladded coatings. By sequentially integrating ANOVA for rigorous statistical analysis, NSGA-II for precise multi-objective optimization, and advanced machine learning models for accurate predictive modeling, we demonstrated a robust methodology for validating factors, optimizing processing parameters, and predicting coating performance.