1. Weed Management in Agriculture

In 2022, agriculture, food, and related industries contributed more than USD 1.4 trillion or 5.5% of the U.S. gross domestic product (GDP), with USD 223 billion as a direct result of farmers’ production [1]. Crop production alone contributed USD 278 billion in cash receipts in 2022 [2].

One of the main challenges in row crop production is weed control. Weed infestation accounts for approximately 39% of the total soybean yield losses caused by biotic pests in the Midwestern United States [3]. However, the losses are not just limited to yield and extend to the increased cost of production through more herbicide applications, decreased harvest efficiency, and the increased contamination of harvested grains [4]. Soltani et al. (2016) [5] estimated that the absence of weed control practices could lead to a 50% reduction in corn yield, translating to a potential loss of USD 26.7 billion in revenue for producers in the U.S. and Canada. Consequently, addressing the impacts of weeds and crop competition is paramount in agricultural management.

For several decades, growers have primarily relied on herbicides for weed management. As a result, herbicides account for the largest market share of the crop protection industry, worth USD 30.2 billion in 2024 and expected to reach USD 43.8 billion by 2030 [6]. The development of herbicide-resistant (HR) crops combined with the sole reliance on chemical weed control has increased the risk of developing HR weeds [7]. From 1990 to 2020, an average of thirteen new HR weed cases arose annually [8]. Hence, the adoption of integrated weed management programs that combine both chemical and non-chemical weed control practices is necessary to achieve more sustainable crop production systems.

2. Cover Crops in U.S.

The use of cover crops is an example of a non-chemical weed control strategy that is becoming more widespread among U.S. growers. In Indiana, cover crop planting has increased by 850%, with cropland planted to cover crops growing from 74.5 to 633.6 thousand hectares between 2011 and 2023 [9]. However, while cover crop adoption has increased over the past several years, only 7.7% percent of agricultural hectares in Indiana were used to cover crop planting in 2023 [10]. The perception of cover crop adoption as a substantial financial investment has been identified as contributing to the limited adoption of cover crops in the United States [11]. Financial incentive programs have gained prominence to counteract this barrier, offering farmers remuneration for integrating cover crops into their practices. These cover crop incentive programs are administered by diverse entities, including federal, state, and county agencies and various private and nongovernmental organizations [12]. Cover crops are usually sown in the fall following the harvest of cash crops or, in some cases, are interseeded during the summer cash crop growth. Winter-hardy cover crop species germinate and start vegetative growth in the fall, go dormant in the winter months, and resume growth in the spring. In contrast, other species that are not winter-hardy such as oats (Avena sativa L.), rapeseed (Brassica napus L.), and forage radish (Raphanus sativus L. var. longipinnatus) grow for a few months and then are killed by freezing temperatures. Winter-hardy cover crop species can produce large amounts of biomass, which is ideal for preventing soil erosion and promoting nitrogen scavenging, carbon sequestration, and weed suppression [11]. However, winter-hardy cover crops terminating late can produce more than 10,000 kg ha−1 of biomass [13] in regions like the U.S. Midwest, for instance. High biomass levels can delay soil warming [11] and deplete soil moisture [14], which may interfere with cash crop planting. Consequently, some growers might prefer to use winterkilled cover crops that provide the suppression of winter annual weeds in the fall yet leave little residue on the soil the following spring [15]. These cover crops undergo rapid decomposition with the freeze–thaw cycle and leave little residue on the soil surface [15], which does not provide the suppression of troublesome summer annual weeds.

Cover crops provide soil protection by having an extensive root system that reduces soil erosion caused by heavy rainfall events, snow thaw, and wind. Additionally, non-legume cover crops fix residual N from the soil, reducing the risks associated with N leaching into the groundwater [11,16,17,18]. Legume cover crops also fix N from the atmosphere [19] and, after termination, release N through residue mineralization. In no-till systems, all above-ground biomass produced stays above the soil surface after termination. The cover crop residue provides a physical barrier to light, creating unfavorable conditions for weed emergence and allowing for soil moisture conservation [20,21]. Cover crops may also be incorporated into the soil at termination. However, research has shown that incorporating cover crop residue reduces the benefits of soil protection (e.g., erosion) and weed suppression during the cash crop growing season.

Cover crops have been associated with improved soil health [19]. The concept of soil health was defined by Doran and Parkin (1994) [22] as “The capacity of a soil to function within ecosystem boundaries to sustain biological productivity, maintain environmental quality, and promote plant and animal health”. Processes such as nutrient cycling, the decomposition of organic residues, and improvements in soil physical properties (e.g., water holding capacity, permeability, erodibility, the stability of soil aggregates) are heavily dependent on microbial activity [23,24]. In a meta-analysis study that combined the results of 122 individual studies, McDaniel et al. (2014) [25] reported an 8.5 and 12.8% increase in total carbon and nitrogen contents, respectively, as a result of cover crop inclusion in a rotational cropping system. These authors considered the greater belowground biomass of cover crops relative to cash crops as the key element to the increased carbon and nitrogen content. Roots produce and release labile carbon compounds such as sugars and amino acids into the soil, which are then used by microbes to promote microbial growth [26,27]. The above-ground biomass of cover crops also provides substantial amounts of C to the soil. The authors of [28] reported an average of 2814 kg C ha−1 yr−1 added to the soil when cereal rye was planted as cover crop in a corn–cotton rotation. The conservation of cover crop residues after termination and its progressive degradation during cash crop season provides compounds that are vital to sustain a soil’s microbiome. Enzymes released by soil microorganisms are responsible for organic matter decomposition and carbon, nitrogen, and phosphorus mineralization [29,30]. In addition, soil enzymes regulate nutrient cycles through biochemical, chemical, and physiochemical reactions [31] that result in the production of sugars and nutrients that are essential for microorganisms and plants.

The use of cover crops has been shown to increase enzymatic activity in comparison to soils under conventional cropping systems [29,32,33,34]. Bandick and Dick (1999) [29] observed 122, 41, 50, and 37% greater α-galactosidase, β-galactosidase, β-glucosidase, and urease activities, respectively, as a result of cereal rye use as a cover crop relative to winter fallow. According to the authors, the greater enzymatic activity from cover crop plots was a consequence of substantial carbon inputs to the soil by this cropping system. Similarly, Mendes et al. (1999) [34] reported an average of 46 and 35% greater β-glucosidase activity from red clover (Trifolium pratense L.) and triticale (x Triticosecale Wittmack) plots, respectively, in comparison with winter fallow. In a more recent study, Tyler et al. (2020) [35] investigated the impact of cereal rye and crimson clover (Trifolium incarnatum L.) used as cover crops on the activity of several enzymes (phosphatase, β-glucosidase, N-acetylglucosaminidase, and fluorescein diacetate [FDA] hydrolysis). These authors concluded that the higher enzyme activities observed on this 3-year study were a result of an enlarged microbial community and greater substrate availability caused by the use of cover crops.

Cover crop termination is the last management practice to occur either prior to cash crop planting, at planting, or afterwards. The adoption of a certain termination method must take into consideration the growth stage of the plant, the objective of using the cover crop (e.g., weed suppression, nitrogen scavenging, etc.), environmental conditions, the subsequent cash crop, and the cropping system (organic or conventional). The four main methods used by producers to terminate cover crops are winterkill, herbicides, tillage, and mowing or roller crimping [36]. Chemical termination is the most common practice among producers when terminating cover crops [37]. However, growers must be very diligent when chemically terminating cover crops as poor termination can result in negative impacts to cash crops such as delayed soil drying and warming in the spring and cover crop seed dispersal [37]. Deines et al. (2023) [38] examined the yield impacts of extensive cover cropping across the US Corn Belt, utilizing validated satellite data to analyze yield outcomes for over 90,000 fields. The study concluded, by a causal forest analysis, an average corn yield reduction of 5.5% in fields with cover crops in use for three or more years, while soybean exhibited average yield losses of 3.5%. Thelen et al. (2004) [39] reported that cereal rye terminated after soybean planting resulted in up to 27% yield losses.

Mechanical termination reduces the environmental risks associated with herbicide use; however, it requires careful planning. For instance, the current recommendations for cereal rye termination are to use roller crimping only at the flowering stage to avoid regrowth [40]. Davis (2010) evaluated cereal rye termination with roller crimping versus herbicides prior to soybean planting. This author observed soybean yields of up to 3700 kg ha−1 following herbicide termination and up to 3200 kg ha−1 following termination with roller crimping (an average of 6366 kg ha−1 of biomass). Cover crops terminated late continue to absorb water from the soil until a complete kill, which may cause water stress for the subsequent cash crop and reduce yields [41].

3. Cover Crop Effect on Weed Management

The impact of cover crops on weed suppression is well documented [41,42,43,44,45,46,47,48,49]. The presence of cover crop residue on the soil surface (no-tillage cropping systems) can result in an exponential reduction in the rate of weed emergence as residue biomass increases [50]. In addition to the presence of cover crop residue, weeds may not have the same capability to compete against crops as they would in the absence of the residue [51] due to the physical barrier created, reduced light penetration into the soil surface, and changes in soil temperature and moisture. Wallace et al. (2019) [52] investigated the effect of cover crops on horseweed (Erigeron canadensis L.) suppression and reported a 56 to 82% reduction in density compared to the fallow control treatment right before a pre-plant burndown herbicide application. Similarly, Palhano et al. (2018) [47] examined the cereal rye suppression of Palmer amaranth (Amaranthus palmeri S. Watson.) and reported 83% less emergence relative to no cover crop treatment.

Most cover crop research focuses on the post-termination phase as it relates to the weed suppression efficacy. However, the effect of cover crops, especially during early spring, cannot be disregarded. Cover crops compete for light, water, and nutrients, creating unfavorable growing conditions for weeds. The use of cereal rye as cover crop reduces the growth rate of horseweed plants relative to fallow treatment, which leads to a higher frequency of smaller plants at the time of herbicide exposure [52]. With a cereal rye biomass up to 5400 kg ha−1, Wallace et al. (2019) [52] reported horseweed rosette diameters smaller than 2.5 cm at the time of pre-plant burndown application, whereas in the fallow treatment, rosette diameters reached up to 10 cm. These authors attributed this difference in horseweed growth to the higher use of resources by the cereal rye plants during spring, reducing the availability of water, nutrients, and light to the horseweed individuals. When no light is present, phytochrome-mediated germination is not activated and weed seedling growth does not occur [53]. Teasdale and Mohler (1993) [21] investigated the effect of increasing cereal rye biomass levels on the transmittance of photosynthetic photon flux density (PPFD) through the residue. When cereal rye biomass increased from 1230 to 7380 kg ha−1, the mean PPFD was reduced by more than 10-fold. Furthermore, cover crops such as cereal rye can release phytotoxic allelochemicals to the soil surface and further inhibit weed seed germination [54]. Among the allelochemicals already identified in cereal rye residues, β-phenyllacetic acid and β-hydroxybutyric acid were documented to inhibit lambsquarters (Chenopodium album L.) and redroot pigweed (Amaranthus retroflexus L.) by 20 and 60%, respectively [55].

Biomass is considered the dominant factor in weed suppression by cover crops [21] and has consistent results throughout the literature [44,46,47,52]. Although cover crops also affect soil moisture and temperature, weed suppression as influenced by these factors is variable and can result in reduced or increased weed germination [21]. Under drought conditions, conserved soil moisture as result of cover crop use can favor weed germination. Similarly, under excessive heat, reduced temperature under cover crop mulch could create a favorable scenario for weed germination.

5. Herbicide Interception by Cover Crop Residue

Herbicide placement is one of the factors that drives soil residual herbicide efficacy [59]. Soil residual herbicides applied within a no-till system are likely to be intercepted by either crop or cover crop residue that remains on the soil surface after cover crop termination or crop harvest. The fraction of the applied herbicide intercepted by crop residue varies between 15 and 80% [60,61,62,63] and that fraction does not contribute to weed control until a precipitation event washes the herbicide residue onto the soil. However, crop residue maintenance can increase water infiltration [64,65] and decrease water evaporation [65], which favors herbicide movement through the soil profile [66].

Rainfall is one of the key factors that affects the movement of herbicides from the crop residue to the soil surface, being responsible for washing the herbicides off the crop residue and into the soil [60,61,67,68,69]. Rainfall amount is more important than rainfall intensity in terms of washing off the herbicide from the crop residue [70,71,72]. Khalil et al. (2019) [73] assessed the influence of rainfall amounts (0, 5, 10, and 20 mm) and intensities (5, 10, and 20 mm h−1) on the retention of prosulfocarb, pyroxasulfone, and trifluralin on wheat residue. These authors reported greater amounts of prosulfocarb being washed off the residue under higher amounts of rainfall relative to pyroxasulfone. Rainfall events that occurred after one day following trifluralin application did not result in more herbicide leaching to the soil surface [73]. Furthermore, trifluralin could not be detected in the wheat residue 14 days after application [73], which can be explained by the potential for the volatilization of this herbicide [74,75]. In addition to volatilization, some active ingredients may also be degraded through photodecomposition (e.g., atrazine, S-metolachlor, pendimethalin, trifluralin [69]) when not incorporated, for instance, when soil residual herbicides are applied at cover crop termination.

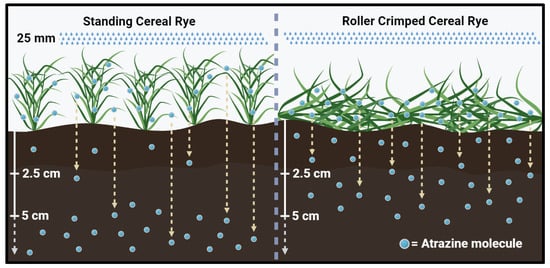

Research conducted by [60] investigated the interception of metribuzin by wheat straw and reported up to a 99% interception of the herbicide under 8900 kg ha−1 of biomass. Banks and Robinson (1982) observed that 4 days after an irrigation event of 20 mm (14 days after herbicide application), 15% of the metribuzin applied had been washed out to the soil surface. Similar results were reported by Ghadiri et al. (1984) [61] who found 60% of atrazine retention by wheat straw (3400 and 3000 kg ha−1 of flat and standing straw, respectively) following application. Three weeks after application and a cumulative rainfall volume of 50 mm, the amount of atrazine initially retained by the straw reduced by 90 and 63% in the standing and flat residues, respectively, while the amount on the soil surface increased more than 2-fold [61]. The flat residue provides greater soil coverage, intercepts more of the herbicide, and reduces the rate at which the herbicide is released onto the soil after rainfall, as is being represented in Figure 1. In addition, the flat residue protects the soil from the impact of the rainfall droplets, which could reduce the risks associated with herbicide leaching into the groundwater.

In addition to rainfall and straw biomass, other factors such as residue type, age, and herbicide active ingredient were also documented to affect soil residual herbicide interception and wash off from straw mulch [76]. A bioassay conducted by Khalil et al. (2018) [76] showed that regardless of the straw biomass levels tested, pyroxasulfone treatments resulted in the complete control of annual ryegrass, while trifluralin and prosulfocarb resulted in complete control only up to 1000 kg ha−1 of straw biomass. Therefore, the influence of straw biomass on herbicide wash off differs among herbicide active ingredients. In addition, residue age can also affect herbicide interception. As crop residue ages, more soil becomes exposed, and therefore, more herbicide can reach the soil at the time of application [76].

The sorption capacity of crop residues is linked to its lignin and cellulose content, with the greater sorption of herbicides onto plant stubble with higher lignin content [77]. In general, aged crop residue provide the greater adsorption of herbicides as cellulose decomposes and more lignin is exposed [78]. The lignin content from the aged and fresh residue of leguminous species can vary substantially. For example, one-year-aged canola residue can have 16% lignin (as a percentage of dry matter), while fresh residue can have 12% [76]. However, in cereals, the lignin content seems to have less variation given their slower decomposition [76,79] and might follow an opposite trend to leguminous species. For instance, Khalil et al. (2018) [76] reported one-year-aged wheat residue as having 6.8% lignin, whereas fresh wheat residue had 9.1% (as a percentage of dry matter). Residue age also affects ground cover, with older residue providing reduced ground cover compared with fresh residue [76].

The lack of the available literature on the application of soil residual herbicides at cover crop termination warrants further investigation on whether increased herbicide interception at the time of application results in reduced weed control. Furthermore, the amount of herbicide that reaches the soil at the time of application is inversely related to the cover crop biomass [57]. The herbicide that is intercepted by cover crop biomass will remain on the plant surface until there is a rainfall event or irrigation to move it to the soil where it can provide weed control.

6. Biodegradation of Soil Residual Herbicides

Soil residual herbicides applied at cover crop termination can be intercepted by the cover crop, reach the soil, and be lost via volatilization or photodegradation. Once in the soil, the herbicide molecules can be adsorbed to clay particles, leach to the groundwater, or degrade through chemical or biological processes. Biological degradation is considered the primary mechanism of herbicide degradation and is primarily catalyzed by enzymes produced by soil microbes, roots, and animals [31]. The process of herbicide degradation by soil microbes to obtain C and N to sustain their metabolism is called mineralization and results in the complete dissipation of the herbicide and its conversion into CO2, water, and other inorganic compounds [80].

The impact of soil residual herbicides on soil microbial activity is well documented [81,82,83,84]. This impact can be negative or positive and direct or indirect (as shown in Table 1) and may eventually lead to changes in the nutrient cycles within the soil [85]. One approach to evaluate how and why changes in soil microbial activity occur is by measuring the activity of enzymes linked to the nutrient cycles.

Soil enzymes are categorized into indicators of overall microbial activity (e.g., dehydrogenase; intracellular) or specific to certain nutrient cycles (e.g., hydrolases; extracellular). Currently, the most common indicators of enzymatic activity in soils are hydrolase reactions [86,87,88]. The hydrolases are responsible for catalyzing the C, N, phosphorus (P), and sulfur (S) cycles in the soil. Within hydrolases, β-glucosidase is responsible for the decomposition of organic matter, which ultimately results in the production of glucose, a carbon energy source for soil microbes [89].

Currently, there is no consensus about the effect of herbicides on β-glucosidase activity. While some researchers have reported no effect [90,91], others have reported either negative [92] or positive impacts [93,94] of herbicides on β-glucosidase activity. The response of a soil enzyme to a given pesticide is practically unpredictable because different pesticides can either increase, decrease, or result in no effect on the enzyme, which also varies by soil type and the pesticide rate [95].

Dehydrogenase is classified within the oxidoreductases, the largest enzyme group, and is responsible for catalyzing redox reactions within the soil [96]. During redox reactions, two hydrogen atoms are transferred from an organic compound (e.g., a herbicide molecule) to cofactors (NAD+ or NADP+) and then to an acceptor molecule (e.g., molecular oxygen) [97]. Dehydrogenase functions inside the cells of all living organisms [97]. Unlike β-glucosidase, dehydrogenase generally shows reduced activity in the presence of herbicides [83,84].

Table 1.

Effect of herbicides on enzyme activity in the soil (adapted from [98]).

Effect of herbicides on enzyme activity in the soil (adapted from [98]).

Table 1.

Effect of herbicides on enzyme activity in the soil (adapted from [98]).

Effect of herbicides on enzyme activity in the soil (adapted from [98]).

| Enzyme | Herbicide | Herbicide Effect (28 to 50 Days of Incubation) | Reference |

|---|---|---|---|

| β-glucosidase | Linuron | No effect | [90] |

| Metribuzin | No effect | [90] | |

| Diflufenican | – | [99] | |

| Glyphosate | – | [99] | |

| Dehydrogenase | Atrazine | – | [82] |

| Diuron | No effect | [100] | |

| Butachlor | ++ | [101] | |

| Alkaline phosphatase | Bromoxynil | ++ | [91] |

| Imazethapyr | + | [81] | |

| Rimsulfuron | No effect | [81] |

Source link

Lucas O. R. Maia www.mdpi.com