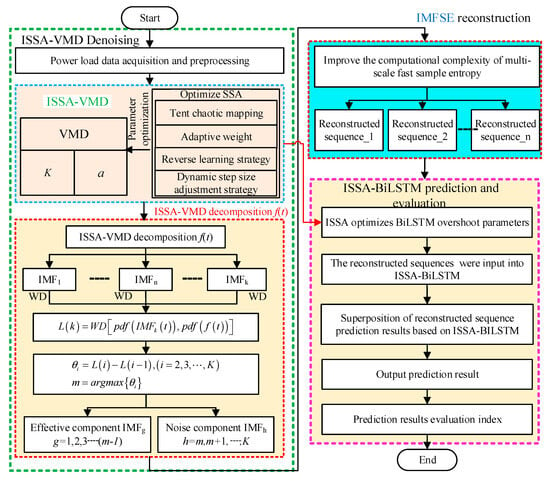

5.1. IMFSE Algorithm Model

As a classical entropy algorithm, the sample entropy algorithm has the advantages of short required data and strong anti-interference ability and is widely used in feature extraction and analysis. However, given the problem of redundancy in the process of feature extraction, the sample entropy algorithm has low computational efficiency of entropy feature extraction.

In view of the problem of redundant operations in the sample entropy algorithm, this study introduces the idea of a symbolic variable matrix. It replaces the phase space reconstruction process of the sample entropy algorithm, simplifies the calculation steps of the distance between vectors after time-series reconstruction and the statistics of the number of distances between vectors less than the threshold, and obtains an efficient and improved sample entropy algorithm. As shown in

Figure 7. The specific calculation steps of the fast sample entropy algorithm are shown below, with the relevant parameters set as follows: dimension m = 2; and threshold r = 0.20 std(a).

Step l: Phase space reconstruction. The first step of the fast sample algorithm is to reconstruct the phase space of the time series A = [

ai] (where

I = 1~

N) in

m + 1 dimension; that is, to construct (

N−

m) vector Wu using the original sequence A and then fill all the vectors

Wu to obtain the reconstruction matrix

W, where

u = 1~

N−

m.

Step 2: Distance comparison. The column vector W(u) in the matrix W is taken in the order of u = 1~N−m. It is made different from the other vectors in the matrix W (excluding its own vectors), and the absolute value is calculated, thus obtaining the distance matrix S containing the distance between all vectors.

Step 3: Matrix 0–1. The idea of a 0–1 matrix is introduced, and all elements in the distance matrix S are compared with the threshold r. If S(i) < r, then z(i) = 1; if S(i) > r, then Z(i) = 0, resulting in a 0–1 matrix Z.

Step 4: Number statistics and ratio calculation. First, the number of units less than the distance between the threshold vectors is counted, where the symbol variable matrix

Z has

m + 1 rows. The number of unit vectors in one column m rows in the first m rows of the matrix

Z is calculated, which is defined as Nim. The number of unit vectors in one column m + 1 row in matrix Z is calculated, defined as

Nim+1. Second, the ratio of the number of vectors less than the threshold to the total number of vectors is calculated. The total number of

M-dimensional vectors

Nim and the total number of

m + 1-dimensional vectors

Nim+1 are compared with the total number of vectors

N−

m of the symbolic variable matrix Z, respectively, to obtain the weight proportion

cim(

r) and

cim+i(

r) in the total number of vectors, respectively.

Step 5: Entropy calculation. First, the average values of all

cim(

r) and

cim+i(

r) are calculated to obtain

Bm(

r) and

Bm+1(

r), respectively. Then, the calculation principle of the value algorithm is used to solve the value of fast sample

FSampEn(

m,

r,

N).

The entropy results of the fast sample entropy algorithm are only related to parameters, such as

m,

r, and

N. The calculation process of the fast sample entropy algorithm based on the symbolic variable matrix is shown in

Figure 8.