1. Introduction

Optimization constitutes a fundamental aspect of scientific research and engineering, aiming at identifying the most effective solution among a plethora of feasible options under given constraints [

1,

2,

3,

4]. It plays a pivotal role in decision-making processes across various domains, including logistics, manufacturing, finance, and artificial intelligence [

5]. Most of the problems presented in different scientific and engineering areas can be formulated as optimization problems [

6].

Various optimization problems are classified on the basis of their objective function nature, constraints, and variables involved [

7,

8,

9]. Within the classification of optimization techniques, exact methods, which are designed to find the optimal solution with precision guarantees for certain problem types, represent a critical category [

2]. Exact methods are particularly powerful in contexts where the requirements for accuracy and certainty outweigh the need for computational efficiency. However, these methods are not generally effective in solving complex optimization problems because of their dependency on preconditions such as continuity, convexity, and differentiability of the objective function, which are not usually met in real-world optimization problems [

7,

8,

10,

11]

The quest for a balance between computational efficiency and optimal solution finding has spurred significant interest in heuristic and metaheuristic methods [

7,

8,

12]. These algorithms have proven effective at navigating complex search spaces to efficiently deliver near-optimal solutions [

9,

13,

14,

15]. In recent years, significant advancements in metaheuristic algorithms have been reported. Notable developments include the marine predator algorithm (MPA) [

16], which models hunting strategies in marine ecosystems, and the equilibrium optimizer (EO) [

17], which is based on mass balance principles. Henry gas solubility optimization (HGSO) [

18] draws inspiration from gas solubility dynamics, whereas the modified artificial gorilla trout optimizer (MAGTO) [

19] enhances primate behavior modeling. Tuna swarm optimization (TSO) [

20] mimics tuna hunting patterns, and the rain optimization algorithm (ROA) [

21] simulates precipitation processes. The modified Harris hawk optimization (MHHO) [

22] improves upon cooperative hunting strategies. The Brown-Bear Optimization Algorithm (BBOA) [

23] simulates the hunting and foraging behavior of brown bears, whereas the Energy Valley Optimizer (EVO) [

24] draws inspiration from energy landscapes in physical systems. Fick’s law algorithm (FLA) [

25] leverages principles of molecular diffusion, and the Honey Badger Algorithm (HBA) [

26] models the aggressive foraging strategies of honey badgers. The Hunger games search (HGS) [

27] implements competitive survival dynamics. Other significant contributions include the arithmetic optimization algorithm (AOA) [

28], the modified whale optimization algorithm (MWOA) [

29], and the enhanced leader particle swarm optimization (ELPSO). This proliferation of algorithms highlights both the field’s dynamism and the ongoing challenge of developing truly novel optimization mechanisms.

Despite the success of metaheuristics in addressing a broad spectrum of optimization challenges, the demand for more efficient, robust, and versatile algorithms persists, driven by increasingly complex optimization problems. As the field evolved through the late 20th and early 21st centuries, the number of metaheuristic algorithms grew to over 500 by the end of 2023, each designed to address specific complex issues [

30]. Research suggests that many of the newly developed algorithms are enhancements or variations of existing methods [

7,

31,

32] This trend highlights the iterative process of science but has also drawn several criticisms. A significant concern is the lack of novelty, with many new algorithms being minor modifications rather than genuine innovations [

33]. Additionally, the introduction of biases, such as center bias, skews the performance results, favoring solutions near the center of the search space [

34]. Another issue is the inconsistency between the proposed metaphors, mathematical models, and algorithm implementations. Often, the metaphors do not translate into the algorithm’s framework, leading to a disconnect between theory and practice [

35]. Furthermore, the lack of consistent and transparent benchmarking complicates the assessment of algorithm efficacy [

36]. Selective datasets and metrics can result in biased conclusions that fail in broader applications.

In this context, we introduce the electrical storm optimization (ESO) algorithm, a novel mathematical approach inspired by the dynamic behavior of electrical storms. This algorithm draws from the observation that lightning strikes the path of least resistance to ground, analogous to finding the optimal path through a complex search space. The ESO algorithm uses three dynamically adjusted parameters, field resistance, conductivity and intensity, to adapt the search process in real time. This allows the algorithm to seamlessly transition between the exploration and exploitation phases on the basis of the immediate needs of the optimization landscape. Unlike the reliance on interagent communication or fixed update mechanisms seen in other algorithms, the ESO approach is autonomously driven by environmental responses, increasing its adaptability across both discrete and continuous problem spaces. This study delineates the conceptual foundation of the ESO, its algorithmic structure, and potential implications for advancing the field of optimization.

The main objective of this study is to develop a robust and efficient optimization algorithm that can effectively handle both unimodal and multimodal optimization problems while maintaining consistent performance across varying dimensionalities. This work offers several key scientific contributions: (1) the introduction of a novel dynamic adaptation mechanism that automatically adjusts search parameters on the basis of real-time feedback from the optimization landscape, enabling seamless transitions between exploration and exploitation phases; (2) a self-regulating framework that reduces the need for parameter tuning across different problem types, enhancing the algorithm’s practical applicability; (3) a mathematically rigorous approach to distance-based resistance calculation that provides more accurate guidance for the search process; and (4) a computationally efficient implementation that scales well with problem dimensionality while maintaining competitive performance. These contributions address current limitations in the field of optimization algorithms, particularly in terms of adaptation capabilities and computational efficiency.

2. Materials and Methods

Thunderstorms are among the most potent phenomena in nature and are characterized by lightning occurring within cumulonimbus clouds. These clouds experience strong updrafts and downdrafts that move water particles across different altitudes. Collisions between droplets and ice particles, combined with temperature and pressure differences, lead to charge separation, the accumulation of positive charges at cloud tops and negative charges at the bases. This results in a strong electric field within the clouds and between the clouds and the surface of the Earth [

37,

38]. When the potential difference exceeds the dielectric strength of air, electrical discharge, known as lightning, occurs. This discharge, influenced by wind, humidity, and particulates, can occur within a cloud, between clouds, or from clouds to the ground and is subject to environmental obstacles such as terrain or structures, which can alter the path of lightning [

39].

The ESO algorithm draws inspiration from these dynamics, particularly the way in which lightning seeks the path of least resistance. This natural process is applied to solve optimization problems, where the algorithm seeks optimal solutions by navigating through problem spaces in a manner analogous to lightning navigating atmospheric obstacles.

Field resistance (

): In thunderstorms, electrical resistance determines the ease with which lightning can travel through the different regions of the atmosphere [

40,

41]. In the ESO algorithm, this parameter measures the dispersion of solutions within the search space, guiding the algorithm’s adjustments on the basis of the problem’s topology.

Field intensity (

): The intensity of the electric field in a thunderstorm governs the strength and frequency of lightning discharges [

42]. Analogously, this parameter controls the algorithmic phases, facilitating strategic shifts between exploration and exploitation.

Field conductivity (): Drawing from the concept of electrical conductivity, which measures the ability of a material or system to conduct electric current, this is the reciprocal of the resistance. Reflecting the adaptability required by lightning to navigate varying atmospheric conditions, this parameter dynamically modulates the algorithm’s response to changes in field resistance, enhancing its ability to fine-tune the search process.

Storm power (): Storm power is inspired by the cumulative and finite potential energy present in a thunderstorm. In the ESO algorithm, P measures the remaining energy and potential for exploration during the search process. This ensures that the algorithm’s efforts are proportional to the current state of variability and intensity in the search space, maintaining an adaptive balance between exploration and exploitation.

Ionized areas (

): In thunderstorms, ionized areas are regions where the air has become electrically conductive due to ionization, allowing for lightning to travel through these paths of least resistance [

40,

42]. In the ESO algorithm, the ionized areas represent regions in the search space where the probability of finding optimal solutions is higher. By identifying and targeting these ionized areas, the algorithm can navigate the search space more effectively, enhancing its efficiency in locating optimal solutions.

By leveraging nature-inspired dynamics, the ESO offers a structured and adaptive approach to complex optimization challenges, effectively translating these natural phenomena into computational strategies.

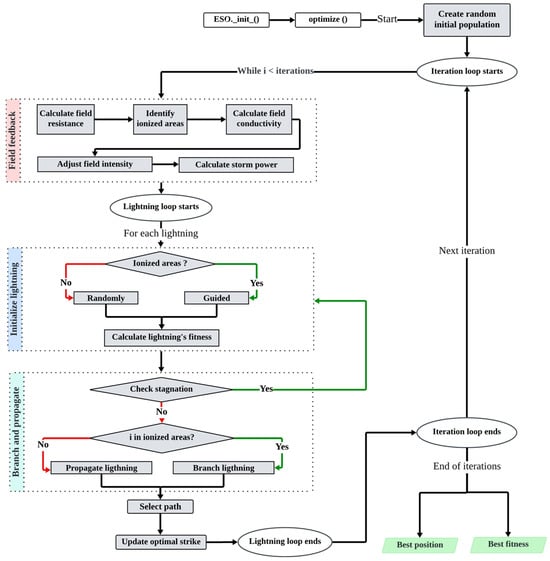

Figure 1 illustrates how these dynamics are operationalized within the algorithm.

During the first iteration () of the optimization process, the lightning (agents) are positioned randomly within the defined lower () and upper () bounds of the search space with dimensions. This is expressed as , where each agent is represented as a vector with both an origin and a direction that can explore the search space. Once these initial positions are established, the fitness of each lightning agent’s position is calculated by evaluating the objective function at the agent’s position. This initial assessment is crucial, as it sets the baseline for the algorithm’s iterative process of refining positions to find optimal solutions.

2.1. Field Resistance

The concept of field resistance (

) in the ESO algorithm is crucial for effectively managing the optimization process. It serves as a real-time feedback mechanism that reflects the landscape of the optimization problem, enabling the algorithm to dynamically control its operational phases. The field resistance is calculated as the ratio between the standard deviation (

) and the peak-to-peak difference (

) across these positions (

) in each iteration (

) through the total lightning number (

), which is an argument of the algorithm. The standard deviation is computed on the basis of the deviation in each position from the mean position (

), where

is the average of all the positions. The mathematical representation of this calculation is provided in Equations (1)–(3). A higher

indicates a greater need for exploration, suggesting that the current solutions are widely spread and possibly distant from any optimum. Conversely, a lower

indicates a denser clustering of solutions, suggesting its proximity to potential optima and thus a greater need for exploitation to fine-tune the solutions.

2.2. Ionized Areas

The ionized areas (

) are identified as the zones within the search space where the solutions are superior, indicating lower resistance. This involves selecting a subset of the best solutions on the basis of their fitness. First, the percentile of fitness from all the solutions is calculated via Equation (4). Then, the solutions whose fitness values are better (lower for minimization, higher for maximization) are selected, as expressed in Equation (5). This process ensures that the algorithm can identify and focus on the most promising areas of the search space, facilitating a more directed and potentially more efficient search.

2.3. Field Conductivity

The field conductivity (

) dynamically adjusts the search strategy in response to changes in

in each iteration (

), allowing for the ESO algorithm to modulate exploration and exploitation on the basis of current environmental resistance. This parameter indicates how easily lightning can progress toward the optimum. It is computed via a logistic function (

), as expressed in Equations (6) and (7). The nonlinear nature of the logistic function makes

highly reactive to any small changes in

, allowing for quick and effective adjustments to the search strategy. This reactivity is essential for handling the variability and complexity of search spaces. A less responsive adjustment results in a slower convergence. High field resistance (

results in low

, promoting exploration. Conversely, a low

increases

, favoring exploitation.

2.4. Field Intensity

The field intensity (I) dynamically adjusts the storm stages during the optimization process through a logistic function (). This allows for a nonlinear response to variations in field resistance (R), with an imposed decreasing trend influenced by the current iteration (j) relative to the total number of iterations (iter), allowing for the algorithm to adapt its behavior over time, as in Equation (8). This adjustment models three distinct stages of storm development: an initial exploration phase, a transitional phase, and a final exploitation phase. The field intensity is then calculated as the product of and the field conductivity (), as in Equation (9).

Figure 2 shows the conceptual behavior of

I. The pendent of the curve (m =

) and the value of the Sigmoid’s midpoint (

) are adjusted on the basis of the value of

. Consequently, when R is high,

is increased to promote the greater exploration of the search space. Conversely, when

is low,

is decreased to encourage the exploitation of promising areas near the current best solution. This dynamic adjustment of

ensures a balance between exploration and exploitation, maintaining the effectiveness and efficiency of the search process throughout the optimization.

2.5. Storm Power

Storm power (

) plays a crucial role in quantifying the cumulative potential of the search mechanism at any given point during the optimization process. It serves as an adjustment to the intensity of the perturbation introduced to the lightning positions and to the step size for the local search. It is calculated as the product of two dynamically varying factors,

and

, as in Equation (10). This definition is inspired by the physical concept of electrical loss, where power loss occurs due to resistance within a system. In the context of the ESO algorithm, this concept is adapted to calculate the remaining power of the storm during the exploration process. This adaptive mechanism ensures that the algorithm remains responsive to the topology of the search space, enabling it to dynamically balance exploration and exploitation on the basis of real-time feedback from the optimization environment.

2.6. Lightning Initialization

The initialization process involves strategically positioning new agents either within previously identified promising regions (ionized areas) or randomly within the search space. If ionized areas (

) are present (

), the position of a new lightning event (

) is determined by selecting a position from

and applying a perturbation equal to the storm power (

P) value. If no

is present, the position

is then given by a uniform random distribution within the lower (

) and upper (

) bounds of each dimension, as in Equation (11).

2.7. Branching and Propagation

The ESO algorithm simulates the branching and propagation of lightning via interactions with ionized areas within the search space. The new position of active lightning (

) is determined on the basis of whether the position of the lightning (

) is within an ionized area (

or not. For lightning in

, the new position is adjusted by the storm power (

), aiming to explore nearby promising areas. For lightning outside of

, the new position is influenced by the average position of

, scaled by a random uniform perturbation

of size ±

and storm power (

), facilitating the exploration of areas with known high-quality solutions, as shown in Equation (12).

2.8. Selection and Evaluation of New Positions

The optimization process seeks to find the optimal solution among complex and vast search spaces. Therefore, an evaluation of the improvements is a critical component of the algorithm, which focuses on the iterative refinement of agent (lightning) positions within the search space. The primary objective of this process is to evaluate new potential positions for each agent and determine whether moving to these new positions would result in an improvement in the fitness of the objective function. This decision-making process is central to the ability of the algorithms to converge on optimal or near-optimal solutions. By systematically comparing the fitness of the function in the new position

with the current best fitness of the function in the best position found thus far (

), the algorithm decides whether adopting the new path would bring the search closer to the optimization goal, as in Equations (13) and (14). The fitness is obtained by evaluating the objective function in the current coordinates of each lightning strike. Algorithm 1 presents the pseudocode of the main processes in the algorithm.

| Algorithm 1. Pseudocode of the main processes of the electrical storm optimization (ESO) algorithm |

|

3. Results

The effectiveness of the ESO algorithm was assessed through a series of diverse optimization challenges. The evaluation began with 25 primitive unimodal and multimodal problems, testing the efficiency of the ESO in scaping from local optimums and converging to the global optimum. It then proceeded to 20 shifted and rotated problems to explore its ability to navigate multiple local optima in complicated landscapes. Additionally, the ESO faced the IEEE Congress on Evolutionary Computation (CEC) 2022 Single Objective Bound Constrained (SOBC) benchmark set, which includes rotated, shifted, hybrid, and composite functions, showcasing its adaptability to intricate and computationally intensive tasks [

43]. The real-world applicability of the algorithm was further validated by its performance on six engineering problems.

The ESO algorithm was benchmarked against 15 of the most cited and utilized metaheuristic algorithms and 5 recently published algorithms, as shown in

Table 1 [

2,

7,

10,

12,

32,

44,

45,

46]. Moreover, the comparison set includes the LSHADE algorithm, which was the winner of numerous editions of the IEEE CEC competition [

47,

48,

49,

50]. The implementation of the algorithms was retrieved from the Mealpy v30.1 Python library, which is known for its robust and standardized implementations of optimization algorithms, providing a consistent platform for comparative evaluations [

8]. To ensure fairness, standard hyperparameters were used, with a population size of 50 and a maximum of 50,000 objective function evaluations. Fifty independent runs were conducted per problem, with no parameter tuning between problems. The default parameters for each algorithm were retrieved from their corresponding papers, as shown in

Table 1. However, the comparison focused on the results of the top ten performing algorithms, with the results for the ten lower-performing algorithms detailed in

Appendix A Table A1,

Table A2 and

Table A3.

3.1. Primitive Benchmark Functions

Unimodal benchmark functions are crucial for evaluating the performance of metaheuristic algorithms, particularly their exploitation capabilities within optimization landscapes. With a single global optimum and no local optimum, they effectively assess an algorithm’s precision in converging to the global solution. The primary challenge lies in exploiting the search space while navigating environments where gradient information is absent or misleading, limiting direct feedback about the optimum location. This lack of gradients requires algorithms to rely on indirect methods to infer direction and distance to the global optimum. Consequently, algorithms need efficient strategies that transition smoothly from exploration to exploitation, even without explicit gradient information.

Multimodal benchmark functions are essential for assessing the performance of metaheuristic algorithms designed for complex optimization challenges. These functions, characterized by numerous local optima, require sophisticated navigation to distinguish between local and global optima. Functions such as Damavandi (F16), Cross Leg Table (F14), and Modified Rosenbrock (F25) have intricate landscapes that mislead optimization efforts with deceptive gradients and rugged terrains, often leading algorithms to converge on local optima instead of the global optimum. These problems evaluate the robustness, adaptability, and intelligence of algorithms, challenging the ability of algorithms to dynamically adjust search strategies in response to the changing topography of the problem space. Success depends on balancing search intensity and diversity, diving deeply into promising regions while maintaining broad sweeps to avoid pitfalls.

Table 2 shows the test set of primitive benchmark problems, comprising unimodal and multimodal problems with a wide range of characteristics and complexities.

Table 3 presents the performances of the algorithms across 25 primitive problems. ESO and LSHADE consistently achieved optimal or near-optimal means and low standard deviations, reaching success rates of 92% and 86%, respectively, for a tolerance of 10E-08. The DE and GSKA algorithms also yielded strong results, although they did not match the performance of the ESO and LSHADE algorithms. Their performance was particularly notable in unimodal functions, although they showed some variability in more complex multimodal scenarios. MFO and QSA demonstrated comparable competence, especially for functions F1, F2, and F5, where they achieved near-optimal solutions. Conversely, the FLA and FPA showed the poorest performance among the tested algorithms, exhibiting greater variability in their solutions and larger distances from the optimum. This was particularly evident in functions F3 and F16, where they showed significant deviations from the optimal solutions. PSO also demonstrated inconsistent performance, struggling notably with functions F3 and F4, where it showed some of the largest deviations from the optimal solutions in the test set.

Figure 3 presents the convergence curves of the algorithms across primitive problems. The ESO algorithm demonstrates effective convergence in most of the problems and maintains the lowest function fitness, highlighting its efficiency in exploring and exploiting the solution space. GSKA, LSHADE, and QSA also show strong performance. However, MFO and DE exhibit significant performance variability across different functions.

Benchmark functions in their basic form can lead to misleading performance assessments, particularly due to center bias, which is a common issue where algorithms tend to concentrate their search near the coordinate origin.

Table 4 presents transformed benchmark functions, incorporating rotations and shifts to address this limitation. These transformations move optima away from the center and introduce variable interdependencies through orthogonal matrix operations, creating more challenging and realistic test scenarios that better reflect real-world optimization problems. Several studies have shown that algorithms that perform well on basic functions often struggle with these transformed variants, revealing hidden weaknesses in their search mechanisms and potential center bias issues that could impact their practical applications.

The results in

Table 5 reveal differential performance patterns across the shifted and rotated functions (F26–F45). ESO and LSHADE maintain their optimization capabilities, with ESOs achieving exceptional precision in functions such as F26, F35, F31, F39, and F40. However, several algorithms exhibit significant performance degradation when confronted with these transformed variants. The FLA and PSO struggled considerably, as evidenced by substantial deviations in F31 (1.1 × 10

3) and F40 (1.8 × 10

2), respectively. DE and ALO showed intermediate performance, but lacked consistency, particularly in challenging cases such as F44, where DE reached 2.2 × 10

2. GSKA emerged as a notably resilient algorithm, maintaining strong performance across several transformed functions, especially F30 and F39 (distances of 3.2 × 10

−65 and 1.8 × 10

−21). These results underscore the significant impact of rotational and shift transformations on optimization algorithm performance, highlighting the importance of developing algorithms with invariance to such transformations. This pattern suggests that the introduction of rotation and shift transformations substantially increases the complexity of the optimization landscape, challenging algorithms that rely heavily on coordinate-wise optimization strategies or which might be indicators of design biases, as reported previously [

18].

Similarly,

Figure 4 shows the convergence curves of the algorithms for shifted and rotated problems. ESO and LSHADE consistently demonstrated rapid convergence to the lowest function fitness, indicating efficiency in finding optimal solutions. Conversely, the algorithms DE, MFO, and PSO displayed significant performance variability across different functions, showing generally slower or poorer convergence.

Internal Parameter Behavior

Analysis across benchmark functions (F5, F9, F16, F26, F31, and F42) reveals distinct trends in the behaviors of the three main internal parameters of the ESO algorithm, field resistance (

), field conductivity (

), and field intensity (

), as shown in

Figure 5. The behavior of

changes with the evolution of the problem’s landscape, describing the diversity of solutions at each iteration. Conversely,

is complementary and reactive to

, controlling the transition from exploration to exploitation. As

decreases,

adjusts to favor exploitation, focusing on the promising regions of the search space. The field intensity generally follows a decreasing pattern over iterations, but can also increase when sudden changes in

and

occur. This keeps the search agents responsive to the landscape, allowing for both steady convergence and rapid adjustment to new promising areas. The progression in the global best solution is a direct result of the interplay between

,

, and

. This adaptability enables the ESO algorithm to effectively navigate complex search spaces, achieving consistent improvements toward the global optimum.

3.2. CEC 2022 SOBC Benchmark Problems

The CEC SOBC 2022 suite, detailed in

Table 6, is designed to rigorously test optimization algorithms in demanding scenarios using transformations such as rotations and shifts in the optimum [

27]. These elements are critical for examining an algorithm’s robustness and adaptability in nonlinear high-dimensional search spaces. Additionally, the suite features hybrid and composite functions that amalgamate various problem types into a single test, increasing computational demands and complexity. This thorough evaluation not only tests the algorithm’s ability to manage intricate optimization tasks, but also provides deep insights into its applicability and limitations under challenging conditions.

In

Table 7, the results for optimizing the CEC SOBC 2022 competition problems reveal distinct performance patterns across different dimensionalities. For D = 10, ESO and LSHADE demonstrated superior performance, with ESOs achieving optimal or near-optimal results in several functions, particularly F46, F48, F54, and F56 (distances of 0.0 × 10

0).

For D = 20, while most algorithms experienced performance degradation, ESO and LSHADE maintained relative stability. ESO particularly excelled in F46, F48, and F56 (distance of 0.0 × 100), although it showed increased sensitivity in F51, where the distance rose from 2.5 × 104 at D=10 to 6.7 × 104 at D = 20. LSHADE demonstrated robust scalability, maintaining consistent performance across dimensions, notably for F46, F48, and F56 (distances of 0.0 × 100).

The remaining algorithms showed significant performance deterioration with increased dimensionality. This was particularly evident in the results of GSKA for F51, where the distance increased dramatically from 4.5 × 104 at D = 10 to 2.7 × 107 at D = 20. Similar degradation patterns were observed in FLA and PSO, indicating their limited ability to handle higher-dimensional search spaces effectively. Notably, specific functions such as F49 and F55 proved to be challenging across all algorithms and dimensions, suggesting inherent complexity in these problem landscapes that warrant further investigation for algorithm improvement.

3.3. Real-World Bound Constrained Optimization Problems

While artificial benchmark functions are commonly employed to assess the performance and accuracy of metaheuristic algorithms, such synthetic problems often embed unrealistic properties that may not be representative of real-world scenarios. As a result, evaluations can lead to overestimations or underestimations of the true potential of an algorithm when it is applied outside of controlled test conditions. To address this gap and provide a more precise assessment, we submit the ESO algorithm and its comparative metaheuristics to the challenge of solving four diverse and complex problems from real-world engineering and industrial domains.

3.3.1. Lennard–Jones Potential Problem

The Lennard–Jones potential problem is a fundamental challenge in molecular physics, which focuses on determining the optimal spatial configuration of atoms that minimizes the total potential energy of the system. For a 15-molecule system, this represents a 45-dimensional optimization problem (3 spatial coordinates per molecule), with a theoretical minimum potential that corresponds to the most stable molecular configuration.

The objective function sums the pairwise interaction potentials between all molecules as

, where

is the distance between molecules,

is the depth of the potential well, and

is the distance at which the potential becomes zero. The optimization aims to minimize the total potential energy

, which is subjected to geometric constraints. The complete implementation of the problem can be found in [

68]. The problem’s complexity stems from its highly multimodal nature, featuring numerous local minima corresponding to different stable configurations. The global minimum represents the most energetically favorable molecular arrangement, making this a valuable benchmark for testing the ability of optimization algorithms to navigate complex energy landscapes. The complete implementation of the problem can be found in [

69].

In

Table 8, the results for the Lennard–Jones potential optimization problem with 15 particles reveal distinct performance patterns among the tested algorithms. ESO, HS, MFO, PSO, and the QSA achieve perfect convergence, reaching the exact known optimal value of −9.103852. This consistent achievement across multiple algorithms validates the robustness of these methods for this molecular configuration problem.

FPA, FLA, and LSHADE demonstrated near-optimal performance, achieving values of −9.091091, −9.094731, and −9.098313, respectively, with deviations of less than 0.15% from the global optimum. However, other algorithms struggled significantly, with ALO showing the poorest performance (−5.837872) followed by GSKA (−6.671860) and DE (−8.219488), suggesting that these methods may require modifications to better handle this specific type of molecular optimization problem.

3.3.2. Tersoff Potential Problem—Si(B) Model

The Tersoff Potential Problem for silicon, specifically the Si(B) parameterization, focuses on determining the minimum energy configuration of silicon atoms in a molecular cluster. This problem models covalent bonding interactions with a sophisticated three-body potential function that accounts for bond order and angular dependencies.

The optimization objective represents the total potential energy , where each atomic contribution includes the following:

Two-body repulsive and attractive terms.

Bond-order term incorporating angular effects.

Cutoff function for limiting the interaction range.

The total potential is the sum of the individual potentials of atoms

, where

is the distance between atoms

and

,

is a repulsive term,

is a switching function, and

is a many-body term that depends on the position of the atoms

and

. The complete implementation of the problem can be found in [

69].

In

Table 9, the results for the Tersoff potential Si(B) model optimization reveal that MFO achieves the best performance, with a minimum value of −44.074326, followed closely by PSO (−43.129095) and the FPA (−42.888543). LSHADE, ESO, and QSA also showed competitive performance, reaching values of −42.698791 and −42.192469, respectively, demonstrating strong capabilities in handling this complex molecular potential optimization. However, FLA and HS produced slightly higher values of −41.701543 and −40.802206, respectively. However, some algorithms struggled significantly with this problem, particularly DE and GSKA, which only reached values of −30.258289 and −32.093009, respectively, indicating substantial difficulty in navigating this specific potential energy landscape. Significant performance differences were found, with nearly 14 energy units between the best and worst results.

3.3.3. Spread Spectrum Radar Polyphase Code Design Problem

The Spread Spectrum Radar Polyphase Code Design Problem represents a critical optimization challenge in radar signal processing. The fundamental objective is to design optimal polyphase codes that enable effective radar pulse compression while minimizing undesirable side lobes in the compressed signal output. The problem is structured as a global minimization task of a maximum function. The objective function seeks to minimize the maximum side-lobe level across all possible phase combinations as

, where

. Here,

represents the vector of phase variables

,

is the phase accumulation index, and

is the normalized autocorrelation. The optimization space consists of 20 phase variables, each constrained within the interval [0, 2π]. This creates a continuous search space with complex periodic behaviors. The objective function exhibits multiple local minima, making it particularly challenging for optimization algorithms to locate the global optimum. The complete implementation of the problem can be found in [

69].

In

Table 10, the results for the Spread Spectrum Radar Polyphase Code Design Problem demonstrate that the ESO achieves the best performance, with a minimum cost value of 1.980246, followed closely by LSHADE (2.433395) and ALO (2.448449). These results indicate superior capabilities in optimizing the phase sequences for radar signal design.

The FLA and FPA showed similar performance levels, reaching cost values of 2.609190 and 2.623724, respectively, demonstrating moderate effectiveness in this specialized optimization task. However, the GSKA encountered significant challenges, producing the highest cost value of 3.562859, followed by DE with 3.398476 and HS with 3.279291, suggesting that these algorithms struggle with the specific constraints and complexity of radar code design.

3.3.4. Circular Antenna Array Optimization Problem

The circular antenna array optimization problem addresses the design of antenna arrays arranged in a circular configuration to achieve optimal radiation patterns. This problem has significant applications in radar systems, mobile communications, and satellite technology. The array factor (

) for a circular antenna array with

elements is expressed as

, where

represents the azimuth angle in the x–y plane,

denotes the amplitude excitation of the

n-th element,

is the wavenumber,

defines the radius of the circle,

indicates the direction of maximum radiation,

is the phase excitation of the

n-th element, and

is the total number of array elements. The optimization aims to minimize a composite objective

, where

represents the angle at which the maximum side lode occurs,

denotes the directivity of the array pattern,

is the desired maximum radiation direction, and

specifies the

k-th null control direction. The optimization is constrained to current amplitudes (

) [0.2, 1.0] and phase excitation (

) [

]. The complete implementation of the problem can be found in [

69].

In

Table 11, the results for the circular antenna array optimization problem demonstrate that the ESO achieved significantly superior performance, with a cost value of −11.479936, substantially outperforming all the other algorithms. HS emerged as the second-best performer, with a cost of −7.211993, followed by LSHADE at −6.009528.

DE and the GSKA achieved moderate performance levels, with costs of −5.199967 and −4.743778, respectively, whereas the remaining algorithms clustered around similar performance levels between −4.3 and −4.4. The FPA and PSO showed the least effective performance, both reaching a cost of −3.974214, indicating difficulty in optimizing the antenna array parameters.

4. Discussion

4.1. Statistical Performance Evaluation

Two statistical tests were employed to evaluate the optimization results. A Bayesian signed-rank test was employed to identify differences in mean fitness between populations, providing a more robust and comprehensive analysis than traditional nonparametric tests do [

70]. Furthermore, a critical differences (CD) analysis via Friedman’s test with the Nemenyi post hoc test was conducted to compute the individual rankings and the critical differences [

71]. The statistical analysis was conducted on 11 populations comprising 40,700 paired samples divided into 3 groups of problems (

Figure 6A–C) and the total set of problems (

Figure 6D). The familywise significance level for the tests was set to alpha = 0.05. The null hypothesis, which posited that all populations were normally distributed, was rejected (

p = 0.000) for every population and set of problems. The median (MD) and median absolute deviation (MAD) were reported for each population. The populations were assessed pairwise to determine whether they were smaller, equal, or larger. A decision was made in favor of one of these outcomes if the estimated posterior probability was at least alpha = 0.05. The effect size was used to define the region of practical equivalence (ROPE), dynamically calculated around the median as

, where

is the effect size (Akinshin’s gamma) [

71].

Based on the results presented, the following conclusions can be drawn about the performance of the ESO algorithm compared to that of the other algorithms:

- (a)

For the primitive problems (

Figure 6A,A1), ESO and LSHADE exhibited the best performances, with minimal differences between them. The pairwise comparison in

Figure 6A clearly shows that the ESO has superior performance.

- (b)

For the shifted and rotated problems (

Figure 6B,B1), the ESO and LSHADE algorithms again achieved the best performances, with a more noticeable difference between their results and those of the other algorithms. Critical differences were found between the performances of the PSO, FLA, DE, and MFO algorithms and those of the other methods. The pairwise comparison in

Figure 6B also reveals that the ESO has superior performance.

- (c)

In the CEC SOBC 2022 problems (

Figure 6C,C1), LSHADE leads, followed by ESO. The pairwise comparison revealed that LSHADE had a slightly greater performance.

- (d)

Among the three sets of problems, the ESO (4.06) ranks first, followed by LSHADE (4.33) and GSKA (5.75) (

Figure 6D,D1).

These outcomes emphasize the ability of the ESO to adapt and excel across a broad range of optimization challenges, demonstrating its potential as a versatile and effective tool for solving complex problems under various conditions.

4.2. Computational Overhead

The computational cost of the ESO algorithm can be analyzed via ‘Big O’ notation, which is a well-established notation in computer sciences, to provide a theoretical estimate of its complexity. This analysis helps in understanding how the algorithm scales with respect to various parameters under typical usage scenarios. The asymptotic complexity of the ESO algorithm is heavily influenced by the number of iterations, the number of repetitions, and the complexity of the objective function. The dimensionality of the problem also plays a significant role, especially in how vector and matrix calculations are handled within the algorithm. Thus, the computational load of the algorithm presented herein can be theoretically estimated as follows:

- (a)

Lightning initialization: Initializing each lightning event involves at least one evaluation of the objective function and operations over a vector of size . The complexity of this part is , where represents the complexity of evaluating the objective function.

- (b)

Main loop:

Identifying ionized areas: Sorting lightning events based on their fitness has a complexity of .

Field intensity adjustment and resistance calculation: These calculations have a complexity of since they involve computing the standard deviation and the peak-to-peak difference across the lightning positions.

Lightning propagation: Each lightning event may adjust its position based on the ionized areas, potentially requiring another evaluation of the objective function, .

- (c)

Total function evaluations: Each lightning strike evaluates the objective function at least once per iteration, .

Combining all these components, the overall computational complexity of the ESO algorithm can be estimated as in Equation (15):

Additionally, to evaluate the computational complexity of the algorithm in terms of execution time, we applied the CEC SOBC 2022 evaluation procedure [

43]. The problem in Equation (16) executes a loop with 200,000 iterations, where, in each step, the value of the variable x is modified through a series of mathematical operations.

T0 represents the time calculated by running the test problem in Equation (16). Meanwhile,

T1 represents the time required to execute 200,000 independent evaluations of the benchmark function F51 in D dimensions.

2, on the other hand, refers to the average time taken to execute the same number of evaluations of F51 within the framework of the algorithms in five runs, also in D dimensions. The analysis of the computational complexity across various optimization algorithms shown in

Table 12 reveals significant differences in performance between algorithms and dimensions. In increasing dimensions, all algorithms show heightened execution times, underscoring the added complexity with higher dimensions. Notably, the ESO demonstrates lower overheads, suggesting greater efficiency in handling higher-dimensional tasks. All the experiments were carried out in the following computational environment:

4.3. Comparison with Gradient-Based Methods

While gradient-based optimization methods remain fundamental in mathematical programming, particularly for problems with well-defined structures, the ESO algorithm offers distinct characteristics that make it suitable for specific optimization scenarios. A careful examination of both approaches reveals their complementary nature and specific domains of applicability.

Gradient-based methods excel in problems with smooth, differentiable objective functions, offering rapid convergence and mathematical guarantees of optimality when applied to convex optimization problems. These methods are particularly efficient in low- to medium-dimensional problems where gradient information is analytically available. Furthermore, for problems with clear mathematical formulations, such as linear programming or convex quadratic programming, gradient-based methods provide robust theoretical convergence properties.

In contrast, the ESO demonstrates advantages in several challenging scenarios where gradient-based methods may struggle. First, the field resistance mechanism of the ESO enables the effective navigation of multimodal landscapes, allowing for it to escape from local optima through its dynamic exploration–exploitation balance. Second, since the ESO operates solely on function evaluations, it can handle nondifferentiable objectives and discontinuous functions without modification. Third, the algorithm’s field intensity and conductivity parameters provide adaptive search capabilities that maintain optimization momentum in regions where gradient information becomes unreliable or vanishes, such as plateau regions or near-flat landscapes.

This advantage becomes particularly evident in high-dimensional problems where gradient computation through numerical differentiation becomes computationally expensive or impractical. While gradient-based methods need to evaluate the objective function multiple times to approximate each partial derivative, the ESO maintains consistent computational complexity regardless of dimensionality, making it potentially more efficient for high-dimensional optimization tasks.

These considerations suggest that the choice between the ESO and gradient-based methods should be guided by problem characteristics rather than treating either approach as universally superior. The ESO is particularly suited for complex optimization problems with limited mathematical structure, multimodal landscapes, high dimensionality, or where gradient information is unreliable or computationally expensive to obtain. Conversely, gradient-based methods remain the preferred choice for well-structured low-dimensional convex optimization problems where analytical derivatives are available.

5. Conclusions

In this research, the electrical storm optimization (ESO) algorithm is presented as a novel population-based metaheuristic inspired by the dynamic behavior observed in electrical storms. The algorithm operates primarily through three parameters that are dynamically adjusted according to the optimized landscape: field resistance, field conductivity and field intensity. These parameters are crucial because they allow for the algorithm to transition between exploration and exploitation phases effectively. Field resistance is utilized to measure the dispersion of solutions within the search space, providing insights that guide the algorithm’s adjustments based on the problem’s topology. Field conductivity dynamically modulates the algorithm’s adaptability to the optimization landscape, enabling it to respond sensitively to changes in field resistance and facilitating the fine-tuning of the search process. Field intensity controls the phases of the algorithmic process, facilitating strategic shifts between exploration and exploitation.

The performance of the ESO algorithm was thoroughly evaluated across various sets of problems. It was particularly effective in addressing 25 primitive problems characterized by complex landscapes, where it demonstrated high precision and stability. The algorithm also displayed high capabilities in resolving 20 shifted and rotated problems. To test the algorithm further, it was tested against 12 advanced problems from the CEC 2022 suite. Statistical analysis, including the Bayesian signed-rank test and critical difference (CD) analysis via Friedman’s test with the Nemenyi post hoc test, indicated that the performance of the ESO was significantly better than that of several established methods, ranking first overall, closely followed by LSHADE. However, it was observed that the precision of the ESO slightly diminished in the monotonous optimization landscapes, indicating a potential area for further refinement.

In practical applications, the ESO demonstrated effectiveness in solving four complex engineering problems involving molecular processes and antenna design problems, particularly those characterized by nonconvex search spaces and multiple local optima. While classical optimization methods such as gradient-based approaches remain superior for problems with clear mathematical formulations and convex landscapes, the performance of the ESO in these six case studies suggests its utility for specific engineering scenarios where traditional methods may face limitations, such as problems with nondifferentiable objectives or deceptive local optima. These real-world implementations highlight the potential of the ESO as a complementary tool in the optimization toolbox, which is particularly suitable for complex problems where the objective function landscape is not well understood or where classical optimization methods may struggle to find global solutions.

Limitations and Future Works

The current implementation of the ESO presents several limitations that should be acknowledged. The basic constraint-handling mechanism using penalty functions may not be optimal for problems with complex feasibility landscapes. The algorithm’s formulation assumes continuous search spaces, which limits its effectiveness in discrete optimization problems. Experimental validation, while being comprehensive for static optimization, does not include dynamic optimization problems where the optimum changes over time, leaving this capability unexplored. The algorithm’s applicability to multiobjective problems currently relies on aggregating multiple objectives into a single function, which may limit the accuracy of the Pareto front approximation. Additionally, the dynamic parameter adaptation strategy might require more iterations to achieve convergence in extremely deceptive or monotonic landscapes.

Future research directions could address these limitations through several key developments. The advancement of constraint-handling mechanisms based on adaptive penalty functions or feasibility rules would enhance the algorithm’s ability to handle complex constraints. The algorithm could be extended to discrete optimization through appropriate modifications of the field parameters and branching mechanisms, while adaptation for dynamic optimization problems could be achieved by incorporating time-varying field properties. The framework could be reformulated for true multiobjective optimization without requiring objective aggregation. Additionally, enhancing the parameter adaptation mechanism would enable faster convergence in deceptive landscapes. The integration of local search strategies would improve performance in monotonic search spaces, and the development of hybrid versions would combine the ESO’s global exploration capabilities with problem-specific local search methods. These developments significantly expand the algorithm’s applicability while addressing its current limitations.