Appendix A. Features of the Test-Data

The main data file used by the CustomGPT in our study is a specially crafted dataset designed to demonstrate the potential of sequence analysis in an educational context for teachers. It has been created based on the real log-data gathered during the DigiEfekt project in the 2021/2022 study year. Since the original data had some limitations (inhomogeneity, lack of the information about the context of interaction, and some technical issues), a new dataset has been artificially created to produce clear and understandable results that can effectively illustrate to teachers how sequence analysis can be applied in different dimensions and what insights they can potentially obtain from it.

The key components of this file include:

Student ID: A unique identifier for each student, allowing for the tracking of individual interaction patterns over time;

TimeCode: A timestamp indicating the exact date and time when each interaction occurred. This column is crucial for analyzing temporal patterns in Opiq usage;

Action: Describes the type of activity the student engaged in, such as watching videos, reading materials, completing exercises, or taking tests. This information is important for deriving typical sessions based on dominant activities and their order;

Score: The results of assessments, representing the correctness of students’ answers. Scores range from 0 to 100 as it is in the Opiq environment.

Three types of information of the data file have been used for sequence analysis:

Temporal patterns: The TimeCode column enables the examination of when students interact with the platform. By converting these timestamps into monthly or weekly periods, sequence analysis can be applied to identify patterns of engagement over time. In our case, the first sequence analysis script uses this information to track student activity over months, highlighting interaction trends and helping to identify periods of high or low activity.

Session patterns: the Action column provides detailed information on the types of interactions students have with the platform. By categorizing these actions and analyzing their sequences, teachers can see which activities are commonly performed together and identify typical sessions of interaction.

Test-taking patterns: the Score column is used to track student performance tests. By mapping these scores onto categorical labels (e.g., ‘Incorrect’, ‘Partly correct’, ‘Almost correct’, ‘Correct’), sequence analysis can reveal typical strategies that students use while taking tests.

Appendix B. Features of the Scripts for Sequence Analysis

The first step is translating the sequence data-processing workflow. In R, the sequence data is often being read, cleaned, and transformed using specific libraries like TraMineR. We adapted this process in Python using the pandas library, which offers comparable data manipulation capabilities to make it viable in the ChatGPT environment. Specifically, the CustomGPT read the sequence data from an original CSV file (that teachers potentially might obtain directly from the Opiq environment), ensured the data was in the correct format, and mapped categorical states to numeric values. The next step was replicating the optimal matching (OM) algorithm, a key feature of TraMineR used for measuring the dissimilarity between sequences. We implemented the OM distance calculation in Python, utilizing dynamic programming techniques as analogues of ones employed by TraMineR. This involved defining a substitution matrix to determine the cost of transforming one event into another and incorporating insertion and deletion costs. The scipy library’s functions were used in calculating pairwise distances between sequences and performing hierarchical clustering, akin to the functions provided by TraMineR’s clustering library.

Finally, we adapted the visualization provided by the ggplot2 package of TraMineR into Python using matplotlib. This involved creating histograms of the typical sequences. After adapting each component of the R script into Python, we ensured that the results gained using TraMineR package were replicated, making them accessible within a Python-based workflow for their application in a ChatGPT environment.

The following Python libraries were used to adapt the sequence analysis:

pandas: for data manipulation and analysis;

numpy: for numerical operations;

matplotlib.pyplot: for plotting and visualization;

scipy.cluster.hierarchy: for performing hierarchical clustering;

scipy.spatial.distance: for calculating pairwise distances between sequences.

The process of preparing the data for sequence analysis consists of several steps. The first step is to generate specific types of sequence files in a .csv format for further analysis. Initially, the main data file is read using the pandas library to extract and format necessary columns. For the first sequence file, the chatbot is focusing on capturing student interaction patterns over a series of months. This involved converting the ‘TimeCode’ column to a string format, extracting the month (6 months were available in the data-set as an example of a semester), and identifying unique student IDs. For each student, monthly interactions were recorded as either ‘I’ for interaction or ‘S’ for skip, resulting in a DataFrame that reflected each student’s sequence over time. This DataFrame was saved to the chatbot environment as sequence1.csv.

In creating the second sequence file, the emphasis was on session sequences. The process involved reading the same main data file and generating sequences of actions during each session. Each session consists of five actions—this limitation was implemented since the problem of analyzing sequences of different length is still open in an educational context. The possible actions are: ‘Reading’, ‘Gallery’, ‘Video’, ‘Exercise’ and ‘Test’. These sequences were converted to a numeric format using custom mapping for different actions and they were saved as sequence2.csv.

The third sequence file focused on analyzing test-taking patterns from the data. The data were read from the main file, and performance scores were mapped to categories like ‘Incorrect’ (score 0–49), ‘Partly correct (score 50–79)’, ‘Almost correct (score 80–99)’, and ‘Correct (score 100)’, accordingly. These labeled sequences were then transformed into a numeric form for optimal matching distance calculations. These processed data were saved as sequence3.csv.

Here is an overview of the three sequence analysis scripts currently available in the CustomGPT:

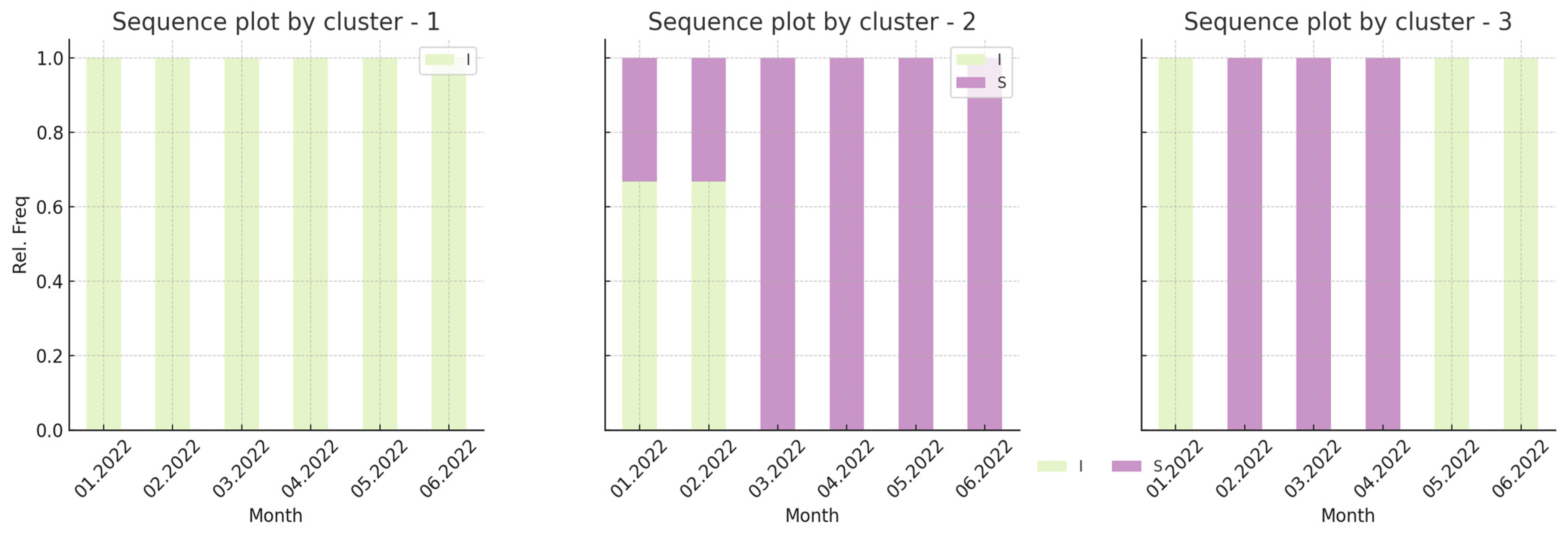

The first script is designed to analyze interaction patterns from the log data, focusing on sequences of student activities over time. The primary aim is to identify and visualize distinct patterns of student engagement throughout a semester. The script begins by reading a CSV file containing sequences of monthly interactions (marked as ‘I’ for interaction and ‘S’ for skip). These sequences are then converted into a numeric format for the computation of OM distances. Once the distances are computed, hierarchical clustering is applied to group students based on their interaction patterns. The resulting clusters are then visualized through state frequency histograms. Each cluster’s relative frequency of interaction is plotted by month, with the green color representing interaction and purple representing a skip. The educational goal of this analysis is in identifying potential dropouts, gaps in interaction, or periods of increased activity.

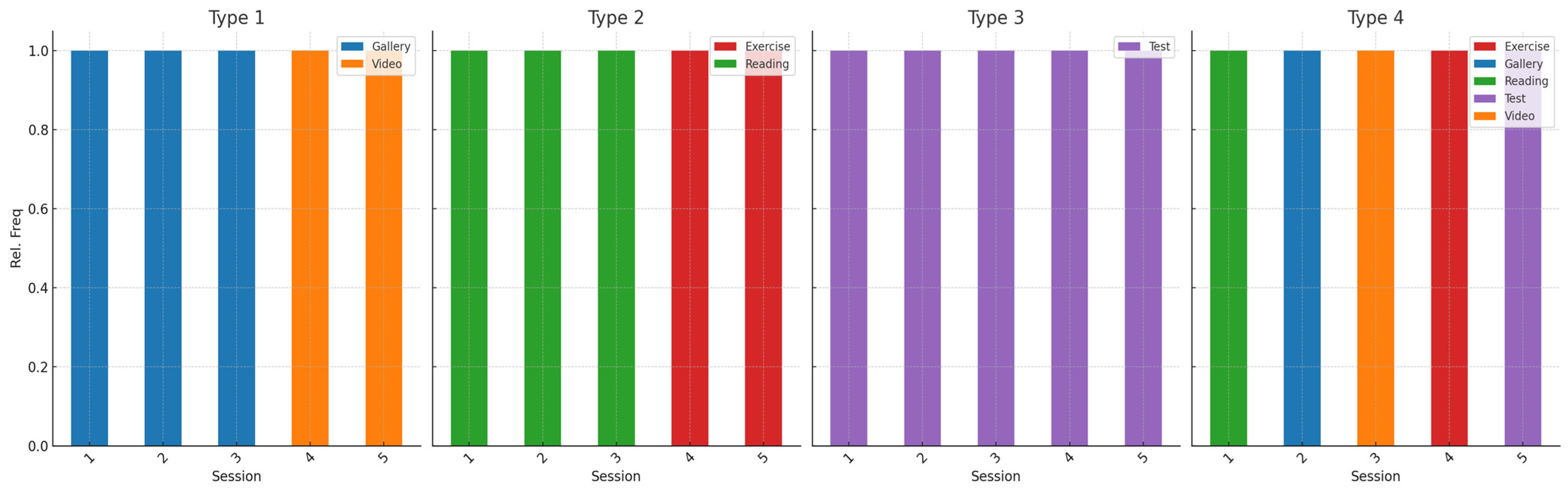

The second script takes a similar approach to analyzing educational log data but focuses on sequences of specific actions rather than general interactions. This script aims to provide a more granular view of student behavior by examining the types of actions performed during study sessions. In this script, the sequences of actions (e.g., watching videos, reading, and performing exercises) are read from a CSV file and converted into numeric format using an action mapping. The OM distance is again used to calculate the similarity between sequences, and hierarchical clustering is applied to group similar sequences together. A key difference in this script is the emphasis on visualizing the sequences with custom colors assigned to each type of action. This allows for a detailed representation of student behavior, highlighting which actions are most prevalent in each cluster. The visualizations include a separate legend for each cluster to make it more easily readable for teachers. The aim of this analysis is to provide teachers with an understanding of how typical sessions of interaction with Opiq look.

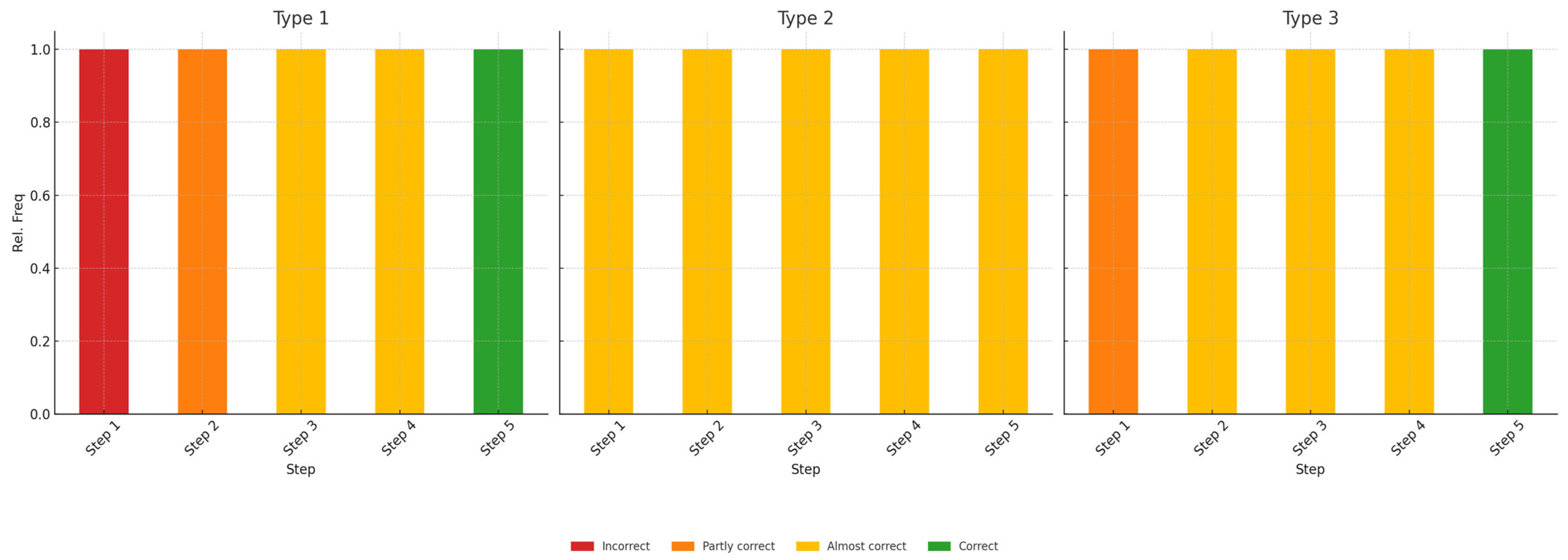

The third script extends the analysis further by focusing on sequences of test-scores, categorizing them into different levels of correctness. The script starts by reading sequences of scores from a CSV file and mapping these scores onto categorical labels, such as ‘Incorrect’, ‘Partly correct’, ‘Almost correct’, and ‘Correct’. These labeled sequences are then used to compute OM distances, followed by hierarchical clustering to group students based on their performance patterns. The resulting histograms display the relative frequency of each performance category within the clusters, providing an overview of trends of improvement of the result.

Script for deriving and analyzing sequences from an original log data file.

Figure A1.

Script for deriving and analyzing sequences from an original log data file.

Appendix C. Description of Various Analysis Options Available in the CustomGPT and Examples of Interpretation and Tips Provided by the CustomGPT

Since the main goal of the CustomGPT is to make data more accessible for teachers, visualizations of different patterns were provided along with the description and tips, both generated by AI. Below are the visualizations and an example of interpretation/tips provided by Custom GPT.

Each graph represents a sequence plot for one of three distinct clusters of students, showing how frequently students were interacting with the educational platform (“I” for interaction) versus being inactive (“S” for skip) in each month from January 2022 to June 2022 (taken as an example based on the original data). While the first and the third graphs show that all the students in these clusters interacted in every month (in case of the first cluster) or in January, May, and June (in case of the third cluster), the second cluster shows that the relative frequency of interacting during first two month is about 0.7.

Visualization of temporal patterns.

Figure A2.

Visualization of temporal patterns.

Interpretation and tips generated by the Custom GPT:

“In the first script, the graphs represent student interactions over several months, with colors indicating activity (green) and inactivity (purple). The legend helps readers understand that each bar corresponds to a month, and the height of the colored sections shows the relative frequency of interactions or inactivity. To interpret these graphs, we look for patterns such as consistent engagement, early drop-offs, or sporadic interactions. By identifying these patterns, we can provide targeted tips for each group of students based on their engagement trends.

Each graph represents a sequence plot for one of four distinct types of students, illustrating how frequently students engaged in different activities during each session. The relative frequency of each activity is represented on the y-axis, while the order of actions is shown on the x-axis. Each session conditionally consists of only five actions with relative frequency = 1.0 to avoid problems with sequences of unequal length and feedback received from teachers. In this case, the states of sequences represent different types of student activities during each session: Gallery viewing (blue), Video watching (orange), Reading (green), Exercise (red), and Taking Tests (purple). The data for this type of analysis were created to reproduce some distinguish patterns of interaction during the session: combining two activities in a particular order (in case of the first and the second clusters), focusing only on a specific activity during the whole session (the third cluster), and combining different types of interaction (the fourth cluster).

Visualization of sessions’ patterns.

Figure A3.

Visualization of sessions’ patterns.

Interpretation and tips generated by the CustomGPT:

“The second script focuses on the variety of activities students engage in during each session. The graphs use a distinct color for each activity (e.g., blue for “Gallery”, orange for “Video”, green for “Reading”, red for “Exercise”, and purple for “Test”). Each bar represents a session, and the height of each colored section indicates the frequency of the corresponding activity. To interpret these graphs, we analyze the sequence and frequency of activities within each session. This helps us understand student preferences and engagement levels with different types of activities.

The sequence plots in the third analysis illustrate distinct patterns of how students performed across five test attempts. The relative frequency of each performance category is represented on the y-axis, while the order of test attempts (steps) is shown on the x-axis. In this case, the states of the sequences represent different levels of the result: Incorrect (red), Partly correct (orange), Almost correct (yellow), and Correct (green). The data for this analysis were designed to reflect distinguishable patterns of performance improvement: moving progressively from incorrect to correct results over the five attempts (Type 1), consistently maintaining an “Almost correct” performance with no significant improvement (Type 2), and showing a steady improvement from partly correct to fully correct by the final attempt (Type 3).

Test-taking patterns.

Figure A4.

Test-taking patterns.

Interpretation and tips generated by the CustomGPT:

“The third script examines student performance over multiple test attempts. The graphs use colors to represent different performance categories (e.g., red for “Incorrect”, orange for “Partly correct”, yellow for “Almost correct”, and green for “Correct”). Each bar corresponds to a step in the sequence of test attempts, showing the progression of student performance. To interpret these graphs, we look for trends in performance improvement or consistency. This helps us identify students’ learning trajectories and provide appropriate support.

Appendix D. Requirements, Evaluation Criteria and Possibilities for CustomGPT Development Process

Table A1.

Stages and recommendations for CustomGPT development process.

Table A1.

Stages and recommendations for CustomGPT development process.

| Stage | Requirements | Evaluation Criteria | Possibilities |

|---|---|---|---|

| Educational log-data gathering and processing | Define the information to collect (e.g., actions and timestamps that may be of interest), determine granularity for adequate representation of the learning process | Are all important actions and timestamps, including omissions or subtle events, considered? Can the level of detail in the data be adjusted after collection for specific analytical tasks? Are there artifacts in the data, and if so, can they be neutralized during further analysis? | Ability to track micro-behaviors of students, including actions tied to specific content (e.g., reading, completing exercises, testing). Flexibility in log-file granularity—allowing a single log file to be used for analyses at different levels. |

| Ensure data protection and anonymity during collection, processing, and usage in CustomGPT | Does the system eliminate the possibility of data leakage at various stages of analysis? Are well-defined access levels provided for different users (teachers, administrators, etc.)? | A flexible anonymity system allows for sharing aggregated data for research or product improvements while maintaining participant anonymity. It also enables personalized information for a limited audience (e.g., teachers and parents). | |

| Define directions for analysis (e.g., activity trends, interaction strategies, test completion patterns), write analysis code, and test it in Python and R | Do the analysis results reflect trends that are easy to interpret and of interest to teachers? Do Python script results match those obtained in R, confirming accuracy? | Wide variability in analysis allows teachers to focus on what matters to them. The analysis can target students (class trends) or content (trends in studying a specific textbook) and can also be generalized or personalized. | |

| Visualizations | Define appropriate visualization methods for sequences based on analysis goals (state plot, distribution plot, etc.) | Do the graphs represent real data without distortion, ensuring accurate understanding of patterns? | Develop a dynamic visualization system that automatically selects the most appropriate type of graph (e.g., state plot for sequence analysis, distribution plot for frequency evaluation) based on input data and analysis goals. |

| Ensure intuitive visualizations—a color palette that allows clear differentiation of patterns; include a legend to define key visualization elements | Are the colors contrasting and easily distinguishable for different categories (e.g., actions, states, or test results)? Can teachers or analysts without technical training understand the visualization results? | Create a visualization interface where teachers or analysts can choose color schemes and customize legends to simplify pattern understanding, allowing individualization of data representation for various audiences (e.g., teachers, parents, researchers). | |

| Interpretation | Provide CustomGPT instructions to train the model for understanding results, highlighting key trends (e.g., high relative frequency of a specific action during a session) and presenting them in interpretations | Are the interpretations consistently aligned with the highlighted trends across multiple scenarios or datasets? Can the model adapt to variations in input data (e.g., different formats, levels of detail) without losing interpretation quality? | Interpretation might be based not only on developers’ instructions, but also on users’ interaction, which means the focus of interpretation might be more personalized |

| Ensure practice-oriented interpretation of results for teachers, where numerical metrics are translated into insights useful for understanding the learning process | Do the insights align with teachers’ needs and can teachers implement changes or interventions based on the insights provided? Is there a feedback mechanism for teachers to refine the interpretation process and improve the relevance of insights over time? | Further training of the chatbot could focus on highlighting key metrics that are of practical interest to teachers. For example, attention could be drawn to the absence of specific actions in patterns or, conversely, the dominance of a certain action at the beginning, middle, or end of a session. | |

| Tips | Generated tips should be based on results in each particular case and their interpretation, so Interpretation + Tips become related to each other | Are the tips closely aligned with the specific results and interpretations for each case? | Subsequent outputs can also build upon previous ones, forming a sort of portfolio for each student or class. This creates an opportunity for comparative analysis of interaction dynamics over time. |

| DLE specifications and interaction context should be taken into account while generating tips | Are the tips adapted to the context of the student’s learning behavior? | The system could provide aggregated action plans for educators or administrators, summarizing the tips for multiple students or groups. This feature would allow educators to implement data-driven interventions at scale. |

Appendix E. Interview Analysis

Table A2.

Interview protocol.

Table A2.

Interview protocol.

| Informative Potential | Question in the Interview |

|---|---|

| Background | What are the main strengths of Opiq? |

| What disadvantages of Opiq have you noted? | |

| RQ1 | Do you think that the process of students’ interaction with Opiq may vary? For example, students may use different strategies when learning material. |

| Do you think that different activities in Opiq (reading, video, gallery, practice, etc.) may vary depending on the context (task, age, subject, etc.)? Share your observations and thoughts on this matter. | |

| Do you think certain combinations of interactions (e.g., reading + watching videos, or practicing in preparation for obligatory tests) can be effective in the learning process? Share your observations and thoughts on this matter. | |

| Do you think that the order of studying content in Opiq matters? | |

| Would you as a teacher benefit from more detailed information about student interaction with Opiq? | |

| RQ2 | What information can you extract from graphs without explanation? |

| Is the textual interpretation of the graphs clear? Did it expand your understanding? | |

| Do you find the tips the bot gives you based on current information useful? | |

| RQ3 | What is your overall impression of the custom GPT? |

| What other statistics on your students’ use of Opiq would you like to know? |

Table A3.

Categories and code names resulting from the analysis of interviews.

Table A3.

Categories and code names resulting from the analysis of interviews.

| Dimension | Category | Code |

|---|---|---|

| Opinions on the Opiq environment | Positive features | Using material from Opiq to create own content (tests) |

| Showing some content from Opiq | ||

| Showing different strategies for studying material | ||

| Collaboration with students | ||

| Saving time | ||

| Automatic assessment | ||

| Sustainability | ||

| Negative features | Limited access | |

| Less effectiveness in comparison to classic books | ||

| Technical issues | ||

| Outdated information | ||

| Opinions on the process of students’ interaction | Variety of students’ interaction process | Test-taking strategies |

| General learning strategies | ||

| Order of interactions | ||

| Certain combinations | ||

| Factors influencing students’ strategies | Subject | |

| Quality of material | ||

| Motivation | ||

| Grade | ||

| Personal preferences | ||

| Platform flexibility | ||

| Information of interaction and ways of its application | Process of preparation for tests | |

| How much time students spend on different activities | ||

| Process of test-taking | ||

| Using the information for additional support | ||

| Using the information to plan the workload | ||

| Check if some content is avoided | ||

| Opinions on the design and functionality of the Custom GPT | Visualizations | Pictures are clear |

| Legend ambiguity | ||

| Additional info needed | ||

| Related frequency concept | ||

| Pictures aren’t clear | ||

| Descriptions | Descriptions are needed | |

| Descriptions can expand the understanding | ||

| Descriptions aren’t needed | ||

| Tips | Can be useful | |

| Different from teacher’s opinion on the case | ||

| Repeating description | ||

| Too general | ||

| Overall thoughts and ideas about the chatbot | Uncertainty about application | |

| Group analysis | ||

| Individual analysis | ||

| Flexibility to expand the analysis | ||

| Using a tool for diagnostic at some specific moments | ||

| Privacy concerns | ||

| Wider application | ||

| Other ways of visualization |

Source link

Yaroslav Opanasenko www.mdpi.com